145170ca9bbd89aa01d8a40841e3c039d3683af8,stellargraph/layer/graph_attention.py,GraphAttention,call,#GraphAttention#Any#,211

Before Change

// Convert A to dense tensor - needed for the mask to work

// TODO: replace this dense implementation of GraphAttention layer with a sparse implementation

if K.is_sparse(A):

A = tf.sparse_tensor_to_dense(A, validate_indices=False)

// For the GAT model to match that in the paper, we need to ensure that the graph has self-loops,

// since the neighbourhood of node i in eq. (4) includes node i itself.

// Adding self-loops to A via setting the diagonal elements of A to 1.0:

if kwargs.get("add_self_loops", False):

// get the number of nodes from inputs[1] directly

N = K.int_shape(inputs[1])[-1]

if N is not None:

// create self-loops

A = tf.linalg.set_diag(A, K.cast(np.ones((N,)), dtype="float"))

else:

raise ValueError(

"{}: need to know number of nodes to add self-loops; obtained None instead".format(

type(self).__name__

)

)

outputs = []

for head in range(self.attn_heads):

kernel = self.kernels[head] // W in the paper (F x F")

attention_kernel = self.attn_kernels[After Change

dense += mask

// Apply softmax to get attention coefficients

dense = K.softmax(dense, axis=1) // (N x N), Eq. 3 of the paper

// Apply dropout to features and attention coefficients

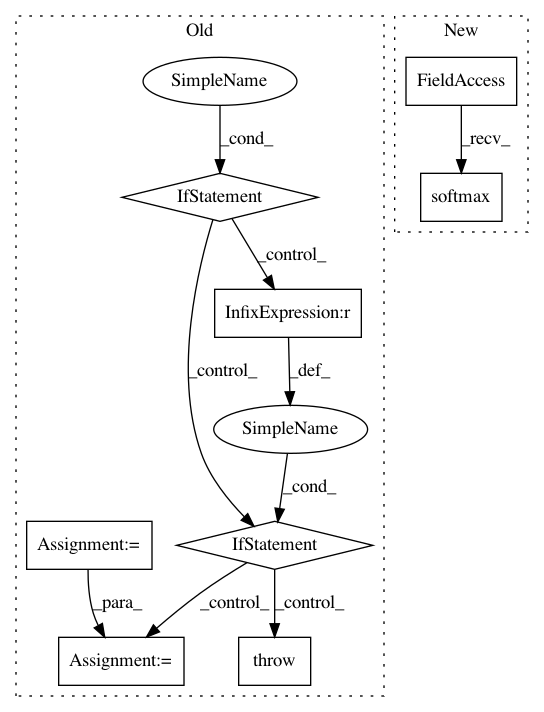

dropout_feat = Dropout(self.in_dropout_rate)(features) // (N x F")In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 8

Instances Project Name: stellargraph/stellargraph

Commit Name: 145170ca9bbd89aa01d8a40841e3c039d3683af8

Time: 2019-06-03

Author: andrew.docherty@data61.csiro.au

File Name: stellargraph/layer/graph_attention.py

Class Name: GraphAttention

Method Name: call

Project Name: tensorlayer/tensorlayer

Commit Name: 4c02d6d4da5ba31d7c9e6e11e6415e8bd2fa2962

Time: 2016-11-21

Author: dhsig552@163.com

File Name: tensorlayer/activation.py

Class Name:

Method Name: pixel_wise_softmax

Project Name: zsdonghao/text-to-image

Commit Name: 74796ff02e9425ca336f595978fe6e7c422c0378

Time: 2017-04-11

Author: dhsig552@163.com

File Name: tensorlayer/activation.py

Class Name:

Method Name: pixel_wise_softmax