3252f2117a4b693ca001613b13c28cc2d8cd9eb7,tests/candidates/test_candidates.py,,test_ngrams,#,424

Before Change

PARALLEL = 4

max_docs = 1

session = Meta.init(CONN_STRING).Session()

docs_path = "tests/data/pure_html/lincoln_short.html"

logger.info("Parsing...")

doc_preprocessor = HTMLDocPreprocessor(docs_path, max_docs=max_docs)

corpus_parser = Parser(session, structural=True, lingual=True)

corpus_parser.apply(doc_preprocessor, parallelism=PARALLEL)

assert session.query(Document).count() == max_docs

assert session.query(Sentence).count() == 503

docs = session.query(Document).order_by(Document.name).all()

// Mention Extraction

Person = mention_subclass("Person")

person_ngrams = MentionNgrams(n_max=3)After Change

)

doc = mention_extractor_udf.apply(doc)

assert len(doc.persons) == 118

mentions = doc.persons

assert len([x for x in mentions if x.context.get_num_words() == 1]) == 49

assert len([x for x in mentions if x.context.get_num_words() > 3]) == 0

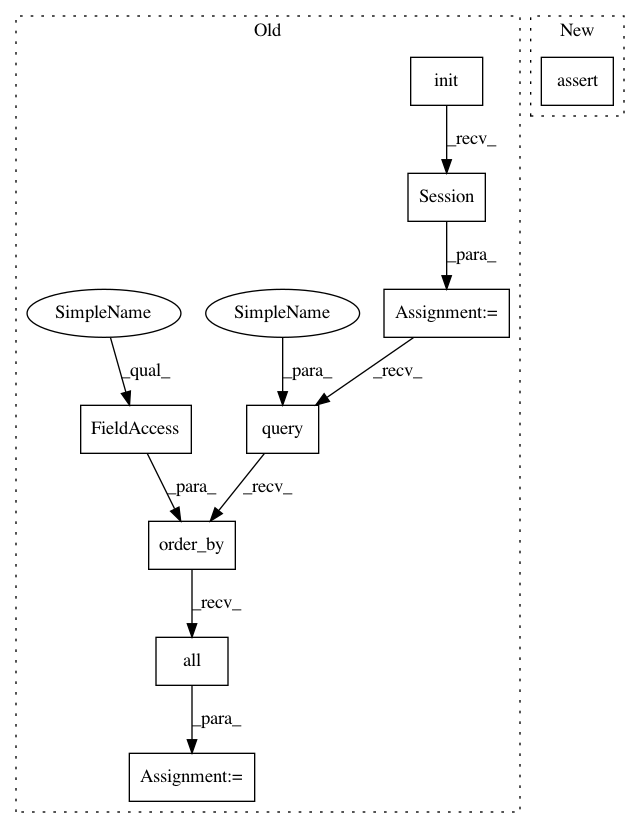

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 9

Instances Project Name: HazyResearch/fonduer

Commit Name: 3252f2117a4b693ca001613b13c28cc2d8cd9eb7

Time: 2020-02-14

Author: hiromu.hota@hal.hitachi.com

File Name: tests/candidates/test_candidates.py

Class Name:

Method Name: test_ngrams

Project Name: HazyResearch/fonduer

Commit Name: 3252f2117a4b693ca001613b13c28cc2d8cd9eb7

Time: 2020-02-14

Author: hiromu.hota@hal.hitachi.com

File Name: tests/candidates/test_candidates.py

Class Name:

Method Name: test_multimodal_cand

Project Name: HazyResearch/fonduer

Commit Name: 3252f2117a4b693ca001613b13c28cc2d8cd9eb7

Time: 2020-02-14

Author: hiromu.hota@hal.hitachi.com

File Name: tests/candidates/test_candidates.py

Class Name:

Method Name: test_ngrams

Project Name: HazyResearch/fonduer

Commit Name: e14c08dd732e73cbe2b3e249cba2632663abdd27

Time: 2018-08-29

Author: hiromu.hota@hal.hitachi.com

File Name: tests/parser/test_parser.py

Class Name:

Method Name: test_simple_tokenizer