7e52351ae56a5068131d873252ad4f95d134c33d,python/ray/util/collective/collective_group/nccl_collective_group.py,NCCLGroup,allreduce,#NCCLGroup#Any#Any#,157

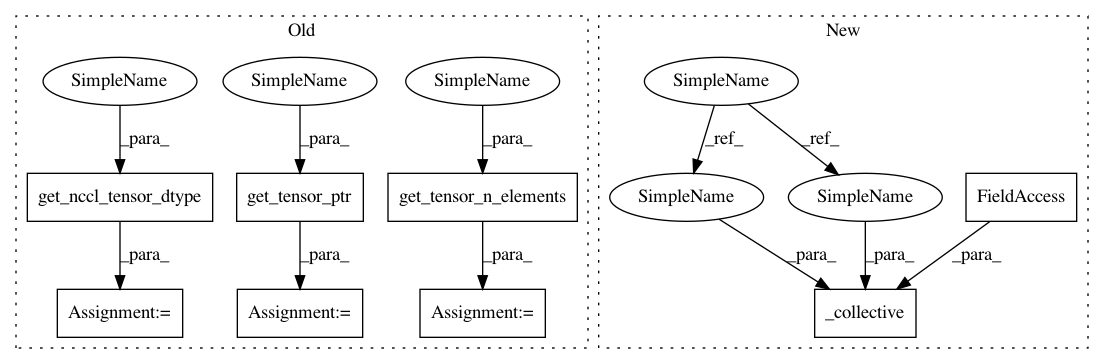

Before Change

// TODO(Hao): implement a simple stream manager here

stream = self._get_cuda_stream()

dtype = nccl_util.get_nccl_tensor_dtype(tensor)

ptr = nccl_util.get_tensor_ptr(tensor)

n_elems = nccl_util.get_tensor_n_elements(tensor)

reduce_op = nccl_util.get_nccl_reduce_op(allreduce_options.reduceOp)

// in-place allreduce

comm.allReduce(ptr, ptr, n_elems, dtype, reduce_op, stream.ptr)After Change

nccl_util.get_nccl_reduce_op(allreduce_options.reduceOp),

stream.ptr)

self._collective(tensor, tensor, collective_fn)

def barrier(self, barrier_options=BarrierOptions()):

Blocks until all processes reach this barrier.

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 8

Instances Project Name: ray-project/ray

Commit Name: 7e52351ae56a5068131d873252ad4f95d134c33d

Time: 2021-01-05

Author: zhisbug@users.noreply.github.com

File Name: python/ray/util/collective/collective_group/nccl_collective_group.py

Class Name: NCCLGroup

Method Name: allreduce

Project Name: ray-project/ray

Commit Name: 7e52351ae56a5068131d873252ad4f95d134c33d

Time: 2021-01-05

Author: zhisbug@users.noreply.github.com

File Name: python/ray/util/collective/collective_group/nccl_collective_group.py

Class Name: NCCLGroup

Method Name: broadcast

Project Name: ray-project/ray

Commit Name: 7e52351ae56a5068131d873252ad4f95d134c33d

Time: 2021-01-05

Author: zhisbug@users.noreply.github.com

File Name: python/ray/util/collective/collective_group/nccl_collective_group.py

Class Name: NCCLGroup

Method Name: allgather

Project Name: ray-project/ray

Commit Name: 7e52351ae56a5068131d873252ad4f95d134c33d

Time: 2021-01-05

Author: zhisbug@users.noreply.github.com

File Name: python/ray/util/collective/collective_group/nccl_collective_group.py

Class Name: NCCLGroup

Method Name: reduce