30a0e7b4b572b1a48d64d2f7f3574493fd3c7d56,train.py,,train,#Any#,32

Before Change

//////////////////////////////////////////////////

iteration = infos["iter"]

epoch = infos["epoch"]

loader.iterators = infos.get("iterators", loader.iterators)

loader.split_ix = infos.get("split_ix", loader.split_ix)

if opt.load_best_score == 1:

best_val_score = infos.get("best_val_score", None)

if opt.noamopt:

optimizer._step = iteration

// flag indicating finish of an epoch

// Always set to True at the beginning to initialize the lr or etc.

epoch_done = True

// Assure in training mode

dp_lw_model.train()

// Start training

try:

while True:

if epoch_done:

if not opt.noamopt and not opt.reduce_on_plateau:

// Assign the learning rate

if epoch > opt.learning_rate_decay_start and opt.learning_rate_decay_start >= 0:

frac = (epoch - opt.learning_rate_decay_start) // opt.learning_rate_decay_every

decay_factor = opt.learning_rate_decay_rate ** frac

opt.current_lr = opt.learning_rate * decay_factor

else:

opt.current_lr = opt.learning_rate

utils.set_lr(optimizer, opt.current_lr) // set the decayed rate

// Assign the scheduled sampling prob

if epoch > opt.scheduled_sampling_start and opt.scheduled_sampling_start >= 0:

frac = (epoch - opt.scheduled_sampling_start) // opt.scheduled_sampling_increase_every

opt.ss_prob = min(opt.scheduled_sampling_increase_prob * frac, opt.scheduled_sampling_max_prob)

model.ss_prob = opt.ss_prob

// If start self critical training

if opt.self_critical_after != -1 and epoch >= opt.self_critical_after:

sc_flag = True

init_scorer(opt.cached_tokens)

else:

sc_flag = False

// If start structure loss training

if opt.structure_after != -1 and epoch >= opt.structure_after:

struc_flag = True

init_scorer(opt.cached_tokens)

else:

struc_flag = False

epoch_done = False

start = time.time()

// Load data from train split (0)

data = loader.get_batch("train")

print("Read data:", time.time() - start)

torch.cuda.synchronize()

start = time.time()

tmp = [data["fc_feats"], data["att_feats"], data["labels"], data["masks"], data["att_masks"]]

tmp = [_ if _ is None else _.cuda() for _ in tmp]

fc_feats, att_feats, labels, masks, att_masks = tmp

optimizer.zero_grad()

model_out = dp_lw_model(fc_feats, att_feats, labels, masks, att_masks, data["gts"], torch.arange(0, len(data["gts"])), sc_flag, struc_flag)

loss = model_out["loss"].mean()

loss.backward()

utils.clip_gradient(optimizer, opt.grad_clip)

optimizer.step()

train_loss = loss.item()

torch.cuda.synchronize()

end = time.time()

if struc_flag:

print("iter {} (epoch {}), train_loss = {:.3f}, lm_loss = {:.3f}, struc_loss = {:.3f}, time/batch = {:.3f}" \

.format(iteration, epoch, train_loss, model_out["lm_loss"].mean().item(), model_out["struc_loss"].mean().item(), end - start))

elif not sc_flag:

print("iter {} (epoch {}), train_loss = {:.3f}, time/batch = {:.3f}" \

.format(iteration, epoch, train_loss, end - start))

else:

print("iter {} (epoch {}), avg_reward = {:.3f}, time/batch = {:.3f}" \

.format(iteration, epoch, model_out["reward"].mean(), end - start))

// Update the iteration and epoch

iteration += 1

if data["bounds"]["wrapped"]:

epoch += 1

epoch_done = True

// Write the training loss summary

if (iteration % opt.losses_log_every == 0):

tb_summary_writer.add_scalar("train_loss", train_loss, iteration)

if opt.noamopt:

opt.current_lr = optimizer.rate()

elif opt.reduce_on_plateau:

opt.current_lr = optimizer.current_lr

tb_summary_writer.add_scalar("learning_rate", opt.current_lr, iteration)

tb_summary_writer.add_scalar("scheduled_sampling_prob", model.ss_prob, iteration)

if sc_flag:

tb_summary_writer.add_scalar("avg_reward", model_out["reward"].mean(), iteration)

elif struc_flag:

tb_summary_writer.add_scalar("lm_loss", model_out["lm_loss"].mean().item(), iteration)

tb_summary_writer.add_scalar("struc_loss", model_out["struc_loss"].mean().item(), iteration)

tb_summary_writer.add_scalar("reward", model_out["reward"].mean().item(), iteration)

histories["loss_history"][iteration] = train_loss if not sc_flag else model_out["reward"].mean()

histories["lr_history"][iteration] = opt.current_lr

histories["ss_prob_history"][iteration] = model.ss_prob

// update infos

infos["iter"] = iteration

infos["epoch"] = epoch

infos["iterators"] = loader.iterators

infos["split_ix"] = loader.split_ix

// make evaluation on validation set, and save model

if (iteration % opt.save_checkpoint_every == 0):

After Change

epoch = infos["epoch"]

// For back compatibility

if "iterators" in infos:

infos["loader_state_dict"] = {split: {"index_list": infos["split_ix"][split], "iter_counter": infos["iterators"][split]} for split in ["train", "val", "test"]}

loader.load_state_dict(infos["loader_state_dict"])

if opt.load_best_score == 1:

best_val_score = infos.get("best_val_score", None)

if opt.noamopt:

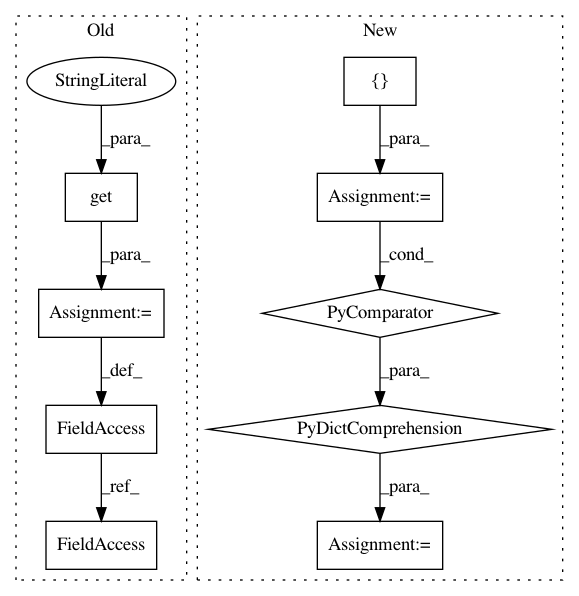

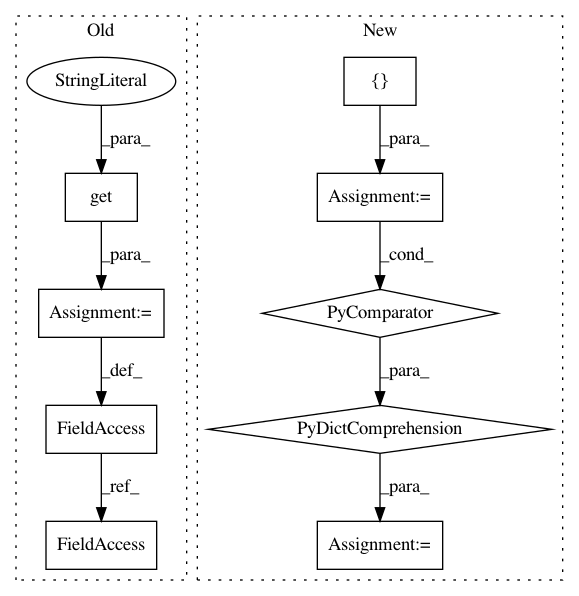

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 9

Instances

Project Name: ruotianluo/ImageCaptioning.pytorch

Commit Name: 30a0e7b4b572b1a48d64d2f7f3574493fd3c7d56

Time: 2019-12-26

Author: rluo@ttic.edu

File Name: train.py

Class Name:

Method Name: train

Project Name: scipy/scipy

Commit Name: d430e92f1bc58b28567439a1028bc1697e7a7869

Time: 2020-08-04

Author: 44255917+swallan@users.noreply.github.com

File Name: benchmarks/benchmarks/stats.py

Class Name: ContinuousFitAnalyticalMLEOverride

Method Name: setup