0ac2b33e8c63304a50db7d2b484368299706b58b,slm_lab/agent/net/recurrent.py,RecurrentNet,training_step,#RecurrentNet#Any#Any#Any#Any#Any#,164

Before Change

if self.clip_grad:

logger.debug(f"Clipping gradient: {self.clip_grad_val}")

torch.nn.utils.clip_grad_norm_(self.parameters(), self.clip_grad_val)

if global_net is None:

self.optim.step()

else: // distributed training with global net

net_util.push_global_grad(self, global_net)

self.optim.step()

net_util.pull_global_param(self, global_net)After Change

def training_step(self, x=None, y=None, loss=None, retain_graph=False, lr_t=None):

"""Takes a single training step: one forward and one backwards pass"""

if hasattr(self, "model_tails") and x is not None:

raise ValueError("Loss computation from x,y not supported for multitails")

self.lr_scheduler.step(epoch=lr_t)

self.train()

self.optim.zero_grad()

if loss is None:

out = self(x)

loss = self.loss_fn(out, y)

assert not torch.isnan(loss).any(), loss

if net_util.to_assert_trained():

assert_trained = net_util.gen_assert_trained(self.rnn_model)

loss.backward(retain_graph=retain_graph)

if self.clip_grad_val is not No ne:

nn.utils.clip_grad_norm_(self.parameters(), self.clip_grad_val)

self.optim.step()

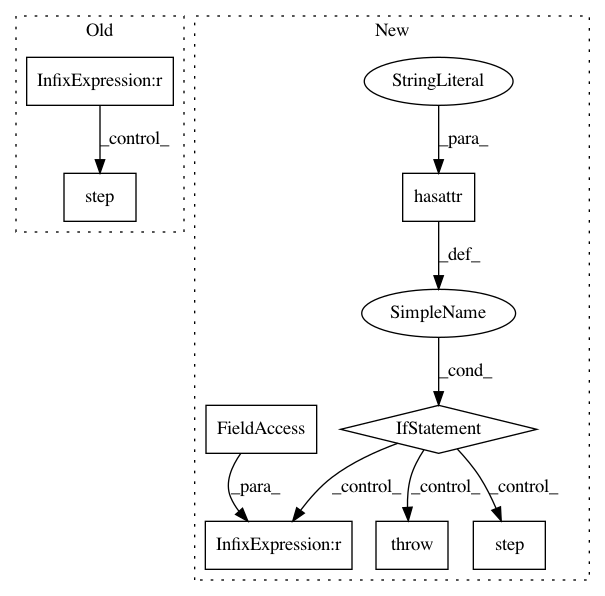

if net_util.to_assert_trained():In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 8

Instances Project Name: kengz/SLM-Lab

Commit Name: 0ac2b33e8c63304a50db7d2b484368299706b58b

Time: 2018-11-14

Author: kengzwl@gmail.com

File Name: slm_lab/agent/net/recurrent.py

Class Name: RecurrentNet

Method Name: training_step

Project Name: ray-project/ray

Commit Name: 415be78cc0d1275a29d0ceda550d0d7a7a5224ea

Time: 2020-09-08

Author: amogkam@users.noreply.github.com

File Name: python/ray/util/sgd/torch/training_operator.py

Class Name: TrainingOperator

Method Name: train_epoch

Project Name: kengz/SLM-Lab

Commit Name: 0ac2b33e8c63304a50db7d2b484368299706b58b

Time: 2018-11-14

Author: kengzwl@gmail.com

File Name: slm_lab/agent/net/mlp.py

Class Name: MLPNet

Method Name: training_step