5135e42987c9a6349e3c198fabdb939774d21fbb,contents/10_A3C/A3C_continuous_action.py,ACNet,__init__,#ACNet#Any#Any#,45

Before Change

with tf.name_scope("a_loss"):

log_prob = normal_dist.log_prob(self.a_his)

exp_v = log_prob * td

entropy = normal_dist.entropy() // encourage exploration

self.exp_v = ENTROPY_BETA * entropy + exp_v

self.a_loss = tf.reduce_mean(-self.exp_v)

with tf.name_scope("choose_a"): // use local params to choose action

self.A = tf.clip_by_value(tf.squeeze(normal_dist.sample(1), axis=0), A_BOUND[0], A_BOUND[1])

with tf.name_scope("local_grad"):

self.a_params = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope=scope + "/actor")

self.c_params = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope=scope + "/critic")

self.a_grads = tf.gradients(self.a_loss, self.a_params)

self.c_grads = tf.gradients(self.c_loss, self.c_params)

with tf.name_scope("sync"):

with tf.name_scope("pull"):

self.pull_a_params_op = [l_p.assign(g_p) for l_p, g_p in zip(self.a_params, globalAC.a_params)]

self.pull_c_params_op = [l_p.assign(g_p) for l_p, g_p in zip(self.c_params, globalAC.c_params)]

with tf.name_scope("push"):

self.update_a_op = OPT_A.apply_gradients(zip(self.a_grads, globalAC.a_params))

self.update_c_op = OPT_C.apply_gradients(zip(self.c_grads, globalAC.c_params))

def _build_net(self ):

After Change

with tf.name_scope("a_loss"):

log_prob = normal_dist.log_prob(self.a_his)

exp_v = log_prob * td

entropy = tf.stop_gradient(normal_dist.entropy()) // encourage exploration

self.exp_v = ENTROPY_BETA * entropy + exp_v

self.a_loss = tf.reduce_mean(-self.exp_v)

with tf.name_scope("choose_a"): // use local params to choose action

self.A = tf.clip_by_value(tf.squeeze(normal_dist.sample(1), axis=0), A_BOUND[0], A_BOUND[1])

with tf.name_scope("local_grad"):

self.a_params = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope=scope + "/actor")

self.c_params = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope=scope + "/critic")

self.a_grads = tf.gradients(self.a_loss, self.a_params)

self.c_grads = tf.gradients(self.c_loss, self.c_params)

with tf.name_scope("sync"):

with tf.name_scope("pull"):

self.pull_a_params_op = [l_p.assign(g_p) for l_p, g_p in zip(self.a_params, globalAC.a_params)]

self.pull_c_params_op = [l_p.assign(g_p) for l_p, g_p in zip(self.c_params, globalAC.c_params)]

with tf.name_scope("push"):

self.update_a_op = OPT_A.apply_gradients(zip(self.a_grads, globalAC.a_params))

self.update_c_op = OPT_C.apply_gradients(zip(self.c_grads, globalAC.c_params))

def _build_net(self):

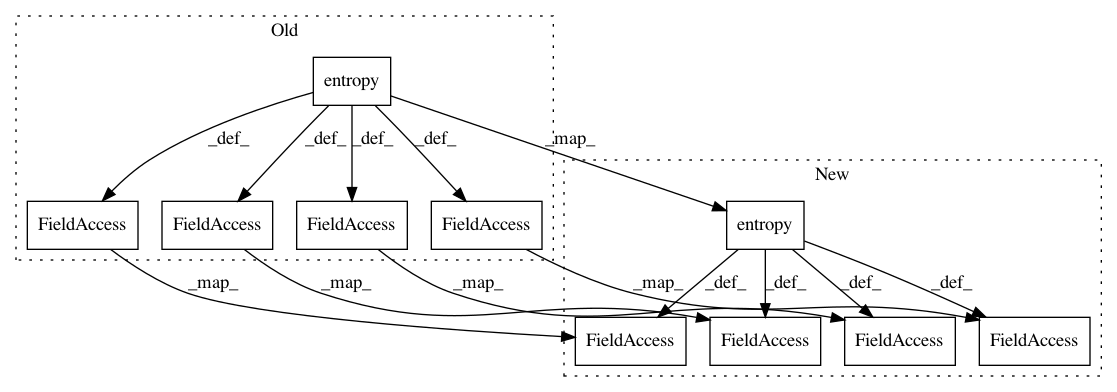

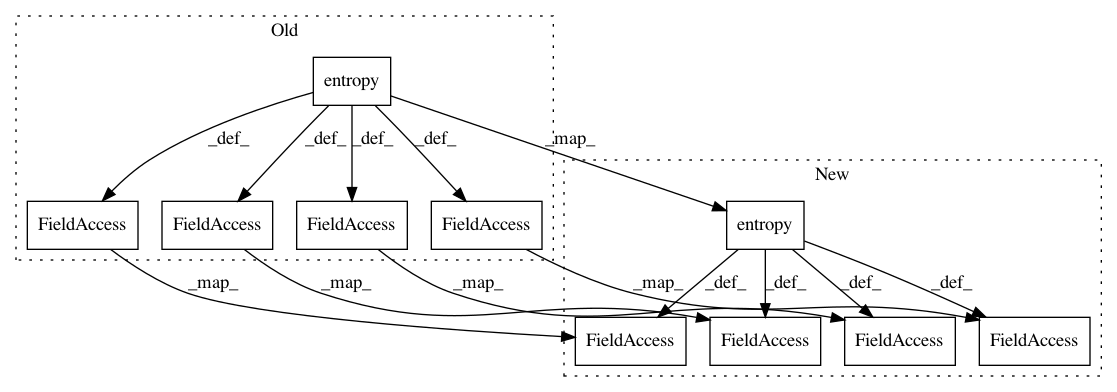

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 10

Instances

Project Name: MorvanZhou/Reinforcement-learning-with-tensorflow

Commit Name: 5135e42987c9a6349e3c198fabdb939774d21fbb

Time: 2017-08-10

Author: morvanzhou@gmail.com

File Name: contents/10_A3C/A3C_continuous_action.py

Class Name: ACNet

Method Name: __init__

Project Name: MorvanZhou/Reinforcement-learning-with-tensorflow

Commit Name: 5135e42987c9a6349e3c198fabdb939774d21fbb

Time: 2017-08-10

Author: morvanzhou@gmail.com

File Name: contents/10_A3C/A3C_RNN.py

Class Name: ACNet

Method Name: __init__

Project Name: MorvanZhou/Reinforcement-learning-with-tensorflow

Commit Name: c885f2d6ec036f86a83587793c02caa2c8cac2b0

Time: 2017-09-23

Author: morvanzhou@gmail.com

File Name: contents/10_A3C/A3C_continuous_action.py

Class Name: ACNet

Method Name: __init__

Project Name: MorvanZhou/Reinforcement-learning-with-tensorflow

Commit Name: c885f2d6ec036f86a83587793c02caa2c8cac2b0

Time: 2017-09-23

Author: morvanzhou@gmail.com

File Name: contents/10_A3C/A3C_RNN.py

Class Name: ACNet

Method Name: __init__