06c9cefed73cfd43f9453c616e1b9d3ef63f58cf,fairseq/models/speech_to_text/s2t_transformer.py,S2TTransformerEncoder,forward,#S2TTransformerEncoder#Any#Any#,297

Before Change

x += positions

x = self.dropout_module(x)

for layer in self.transformer_layers:

x = layer(x, encoder_padding_mask)

if self.layer_norm is not None:

x = self.layer_norm(x)

return {

"encoder_out": [x], // T x B x C

"encoder_padding_mask": [encoder_padding_mask] if encoder_padding_mask.any() else [], // B x T

"encoder_embedding": [], // B x T x C

"encoder_states": [], // List[T x B x C]

"src_tokens": [],

"src_lengths": [],

}

def reorder_encoder_out(self, encoder_out, new_order):

new_encoder_out = (

[] if len(encoder_out["encoder_out"]) == 0After Change

with torch.no_grad():

x = self._forward(src_tokens, src_lengths)

else:

x = self._forward(src_tokens, src_lengths)

return x

def reorder_encoder_out(self, encoder_out, new_order):

new_encoder_out = (

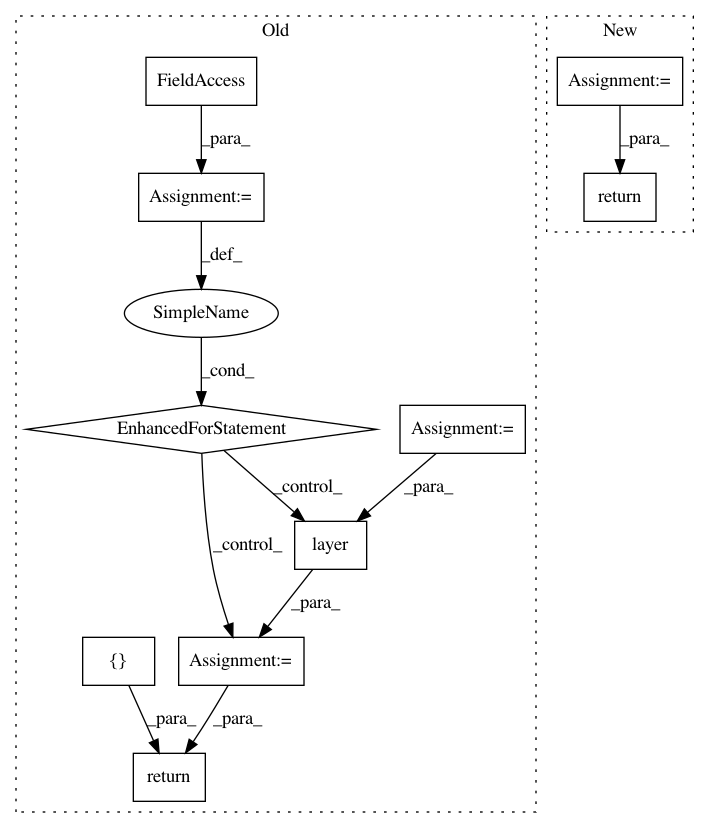

[] if len(encoder_out["encoder_out"]) == 0In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 10

Instances Project Name: pytorch/fairseq

Commit Name: 06c9cefed73cfd43f9453c616e1b9d3ef63f58cf

Time: 2021-03-25

Author: changhan@fb.com

File Name: fairseq/models/speech_to_text/s2t_transformer.py

Class Name: S2TTransformerEncoder

Method Name: forward

Project Name: osmr/imgclsmob

Commit Name: 4ad1ebea7992d840e028ff513dc81e0073755d6e

Time: 2019-02-24

Author: osemery@gmail.com

File Name: pytorch/pytorchcv/models/others/oth_fractalnet_cifar10_2.py

Class Name: FractalNet

Method Name: forward

Project Name: tensorflow/agents

Commit Name: 150442111a9db973dcce7d20fb0cf386cebf60b0

Time: 2019-12-11

Author: ebrevdo@google.com

File Name: tf_agents/agents/ddpg/actor_network.py

Class Name: ActorNetwork

Method Name: call