144b5afdd5d9262a5a9a9fdd9afa56a14b629ba1,texar/modules/decoders/transformer_decoders.py,TransformerDecoder,_build,#TransformerDecoder#Any#Any#Any#Any#,78

Before Change

for i in range(self._hparams.num_blocks):

with tf.variable_scope("num_blocks_{}".format(i)):

with tf.variable_scope("self_attention"):

dec = layers.layer_normalize(self.dec)

dec = layers.multihead_attention(

queries=dec,

keys=dec,

queries_valid_length=tgt_length,

keys_valid_length=tgt_length,

num_units=self._hparams.embedding.dim,

num_heads=self._hparams.num_heads,

dropout_rate=self._hparams.dropout,

causality=True,

scope="self_attention")

dec = tf.layers.dropout(

dec,

rate=self._hparams.dropout,

training=context.is_train())

self.dec += dec

with tf.variable_scope("encdec_attention"):

dec = layers.layer_normalize(self.dec)

dec = layers.multihead_attention(

queries=dec,

keys=encoder_output,

queries_valid_length=tgt_length,

keys_valid_length=src_length,

num_units=self._hparams.embedding.dim,

num_heads=self._hparams.num_heads,

dropout_rate=self._hparams.dropout,

causality=False,

scope="multihead_attention")

dec = tf.layers.dropout(

dec,

rate=self._hparams.dropout,

training=context.is_train())

self.dec += dec

poswise_network = FeedForwardNetwork(hparams=self._hparams["poswise_feedforward"])

with tf.variable_scope(poswise_network.variable_scope):

dec = layers.layer_normalize(self.dec)

dec = poswise_network(dec)

dec = tf.layers.dropout(

dec,

rate=self._hparams.dropout,

training=context.is_train())

self.dec += dec

// share the projection weight with word embedding

if self._hparams.share_embed_and_transform:

batch_size, length= tf.shape(self.dec)[0], tf.shape(self.dec)[1]

depth = self.dec.get_shape()[2]

self.dec = tf.reshape(self.dec, [-1, depth])

self.logits = tf.matmul(self.dec, self._output_transform)

self.logits = tf.reshape(self.logits, [batch_size, length, self._vocab_size])

else:

After Change

self.dec += tf.nn.embedding_lookup(self.position_dec_embedding,\

tf.tile(tf.expand_dims(tf.range(tf.shape(inputs)[1]), 0), [tf.shape(inputs)[0], 1]))

self.dec = tf.layers.dropout(

self.dec,

rate=self._hparams.dropout,

training=context.is_train()

)

for i in range(self._hparams.num_blocks):

with tf.variable_scope("num_blocks_{}".format(i)):

with tf.variable_scope("self_attention"):

selfatt_output = layers.multihead_attention(

queries=layers.layer_normalize(self.dec),

keys=None,

queries_valid_length=tgt_length,

keys_valid_length=tgt_length,

num_units=self._hparams.embedding.dim,

num_heads=self._hparams.num_heads,

dropout_rate=self._hparams.dropout,

causality=True,

scope="self_attention")

self.dec = self.dec + tf.layers.dropout(

selfatt_output,

rate=self._hparams.dropout,

training=context.is_train()

)

with tf.variable_scope("encdec_attention"):

encdec_output = layers.multihead_attention(

queries=layers.layer_normalize(self.dec),

keys=encoder_output,

queries_valid_length=tgt_length,

keys_valid_length=src_length,

num_units=self._hparams.embedding.dim,

num_heads=self._hparams.num_heads,

dropout_rate=self._hparams.dropout,

causality=False,

scope="multihead_attention")

self.dec = self.dec + tf.layers.dropout(encdec_output, \

rate=self._hparams.dropout,

training=context.is_train()

)

poswise_network = FeedForwardNetwork(hparams=self._hparams["poswise_feedforward"])

with tf.variable_scope(poswise_network.variable_scope):

sub_output = tf.layers.dropout(

poswise_network(layers.layer_normalize(self.dec)),

rate=self._hparams.dropout,

training=context.is_train()

)

self.dec = self.dec + sub_output

self.dec = layers.layer_normalize(self.dec)

// share the projection weight with word embedding

if self._hparams.share_embed_and_transform:

batch_size, length= tf.shape(self.dec)[0], tf.shape(self.dec)[1]

depth = self.dec.get_shape()[2]

self.dec = tf.reshape(self.dec, [-1, depth])

self.logits = tf.matmul(self.dec, self._output_transform)

self.logits = tf.reshape(self.logits, [batch_size, length, self._vocab_size])

else:

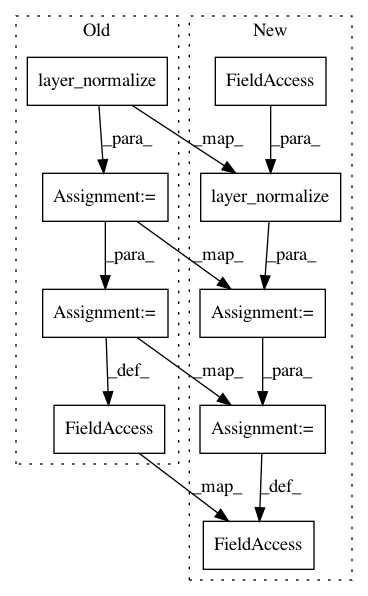

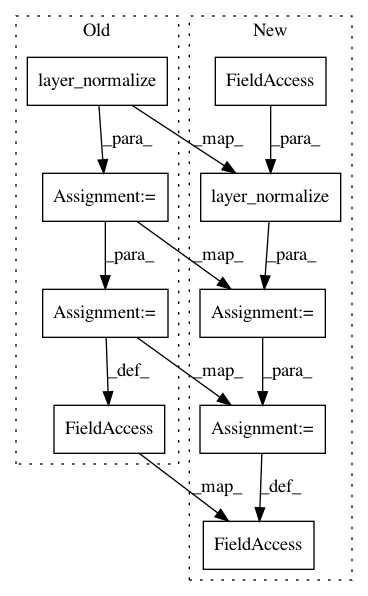

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 9

Instances

Project Name: asyml/texar

Commit Name: 144b5afdd5d9262a5a9a9fdd9afa56a14b629ba1

Time: 2018-03-19

Author: zhiting.hu@petuum.com

File Name: texar/modules/decoders/transformer_decoders.py

Class Name: TransformerDecoder

Method Name: _build

Project Name: asyml/texar

Commit Name: 144b5afdd5d9262a5a9a9fdd9afa56a14b629ba1

Time: 2018-03-19

Author: zhiting.hu@petuum.com

File Name: texar/modules/decoders/transformer_decoders.py

Class Name: TransformerDecoder

Method Name: _build

Project Name: asyml/texar

Commit Name: 144b5afdd5d9262a5a9a9fdd9afa56a14b629ba1

Time: 2018-03-19

Author: zhiting.hu@petuum.com

File Name: texar/modules/encoders/transformer_encoders.py

Class Name: TransformerEncoder

Method Name: _build

Project Name: asyml/texar

Commit Name: b5b06c0f262413ef62c4bfff996f3189673507b1

Time: 2018-03-23

Author: zhiting.hu@petuum.com

File Name: texar/modules/encoders/transformer_encoders.py

Class Name: TransformerEncoder

Method Name: _build