ba2af6efeb086391d286ee5d86635f723e806c15,fonduer/parser/parser.py,SimpleTokenizer,parse,#SimpleTokenizer#Any#Any#,30

Before Change

for x in words]))[:-1]

text = " ".join(words)

stable_id = construct_stable_id(document, "phrase", i, i)

yield {

"text": text,

"words": words,

"char_offsets": char_offsets,

"stable_id": stable_id

}

i += 1

class OmniParser(UDFRunner):

After Change

if not len(text.strip()):

continue

words = text.split()

char_offsets = [0] + [int(_) for _ in np.cumsum([len(x) + 1

for x in words])[:-1]]

text = " ".join(words)

stable_id = construct_stable_id(document, "phrase", i, i)

yield {

"text": text,

"words": words,

"pos_tags": [""] * len(words),

"ner_tags": [""] * len(words),

"lemmas": [""] * len(words),

"dep_parents": [0] * len(words),

"dep_labels": [""] * len(words),

"char_offsets": char_offsets,

"abs_char_offsets": char_offsets,

"stable_id": stable_id

}

i += 1

class OmniParser(UDFRunner):

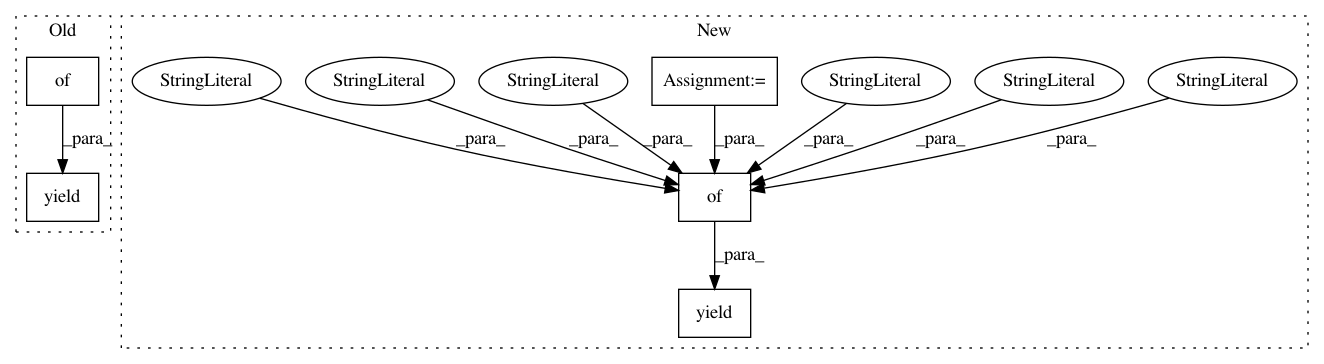

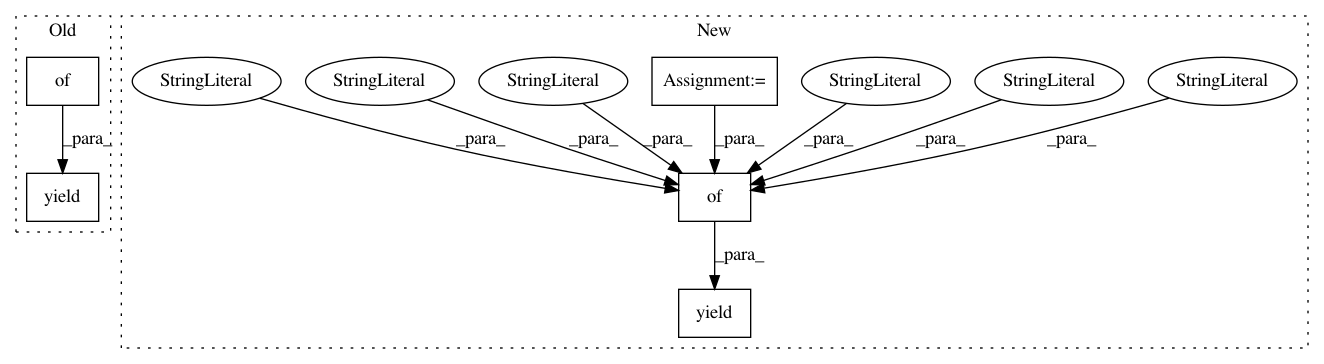

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: HazyResearch/fonduer

Commit Name: ba2af6efeb086391d286ee5d86635f723e806c15

Time: 2018-05-17

Author: senwu@cs.stanford.edu

File Name: fonduer/parser/parser.py

Class Name: SimpleTokenizer

Method Name: parse

Project Name: tensorflow/datasets

Commit Name: 52ba3c53fdeb5806b3626b873eefabf8c065d9d4

Time: 2019-05-21

Author: adarob@google.com

File Name: tensorflow_datasets/text/squad.py

Class Name: Squad

Method Name: _generate_examples

Project Name: tensorflow/datasets

Commit Name: 3be1154314111df8cd327a321fe3d68e7b661c68

Time: 2020-05-29

Author: charlespatel07@gmail.com

File Name: tensorflow_datasets/text/pg19.py

Class Name: Pg19

Method Name: _generate_examples