c21a6e29933f91b83ab417b182c328958dad0bbf,fairseq/models/fconv.py,FConvEncoder,max_positions,#FConvEncoder#,107

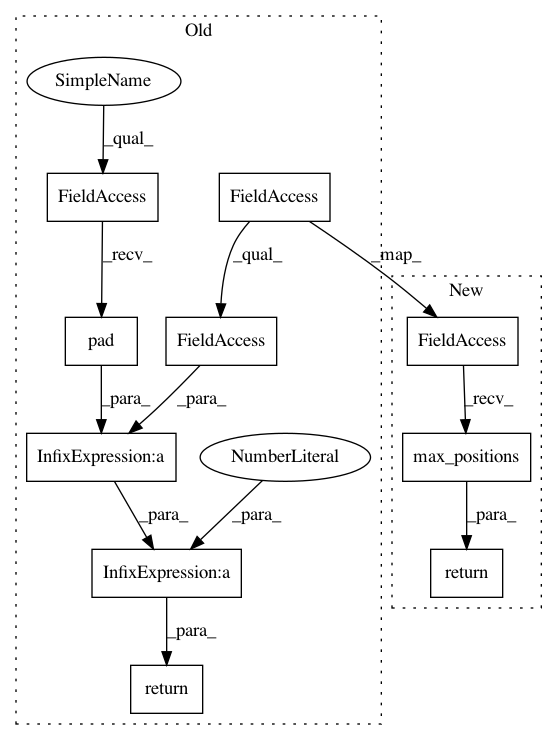

Before Change

def max_positions(self):

Maximum input length supported by the encoder.

return self.embed_positions.num_embeddings - self.dictionary.pad() - 1

class AttentionLayer(nn.Module):

def __init__(self, conv_channels, embed_dim, bmm=None):After Change

def max_positions(self):

Maximum input length supported by the encoder.

return self.embed_positions.max_positions()

class AttentionLayer(nn.Module):

def __init__(self, conv_channels, embed_dim, bmm=None):In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 10

Instances Project Name: elbayadm/attn2d

Commit Name: c21a6e29933f91b83ab417b182c328958dad0bbf

Time: 2018-01-22

Author: myleott@fb.com

File Name: fairseq/models/fconv.py

Class Name: FConvEncoder

Method Name: max_positions

Project Name: pytorch/fairseq

Commit Name: c21a6e29933f91b83ab417b182c328958dad0bbf

Time: 2018-01-22

Author: myleott@fb.com

File Name: fairseq/models/fconv.py

Class Name: FConvDecoder

Method Name: max_positions