d1ac7b831ad36cd0e4bdd7980819f83208345148,gpflow/expectations.py,,_expectation,#Any#Any#Any#Any#Any#,560

Before Change

if feat1 != feat2:

raise NotImplementedError("Different features are not supported")

if kern1 != kern2:

raise NotImplementedError("Calculating the expectation over two different Product kernels is not supported")

kern = kern1

feat = feat1

if not kern.on_separate_dimensions:

raise NotImplementedError("Product currently needs to be defined on separate dimensions.") // pragma: no cover

with tf.control_dependencies([

tf.assert_equal(tf.rank(p.var), 2,

message="Product currently only supports diagonal Xcov.", name="assert_Xcov_diag"),

]):

_expectation_fn = lambda k: _expectation(p, k, feat, k, feat)

return functools.reduce(tf.multiply, [_expectation_fn(k) for k in kern.kern_list])

@dispatch(DiagonalGaussian, object, (InducingFeature, type(None)), object, (InducingFeature, type(None)))

After Change

:return: NxDxQ

with params_as_tensors_for(mean1), params_as_tensors_for(mean2):

N = tf.shape(p.mu)[0]

e_xxt = p.cov + (p.mu[:, :, None] * p.mu[:, None, :]) // NxDxD

e_xxt_A = tf.matmul(e_xxt, tf.tile(mean2.A[None, ...], (N, 1, 1))) // NxDxQ

e_x_bt = p.mu[:, :, None] * mean2.b[None, None, :] // NxDxQ

return e_xxt_A + e_x_bt

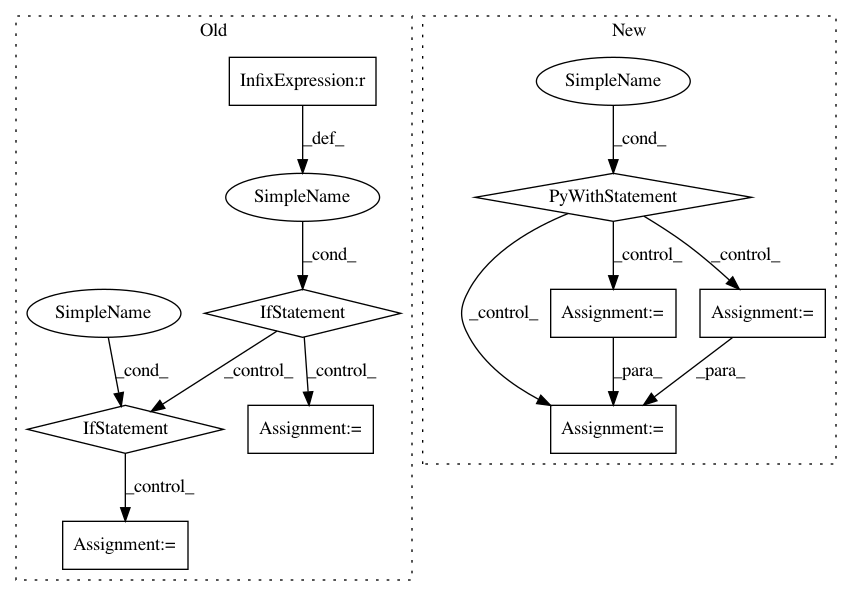

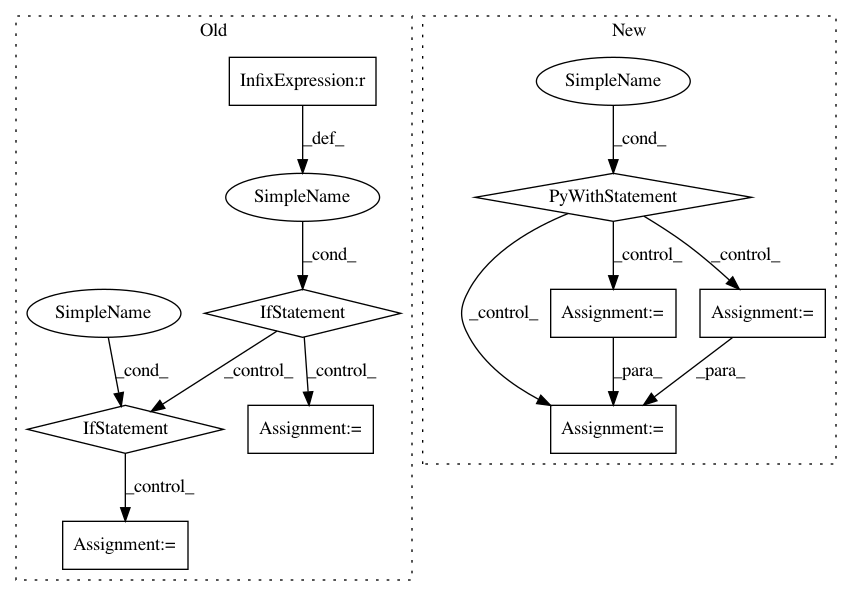

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 9

Instances

Project Name: GPflow/GPflow

Commit Name: d1ac7b831ad36cd0e4bdd7980819f83208345148

Time: 2018-02-07

Author: alex.ialongo@gmail.com

File Name: gpflow/expectations.py

Class Name:

Method Name: _expectation

Project Name: glm-tools/pyglmnet

Commit Name: bae902f30638942d08819ec9cd4acdc5f6e33682

Time: 2019-06-03

Author: scotto@sharpleaf.org

File Name: pyglmnet/datasets.py

Class Name:

Method Name: fetch_group_lasso_datasets

Project Name: analysiscenter/batchflow

Commit Name: 11c6bf1dbd051d087519ff771b39dac600c0d96d

Time: 2019-07-29

Author: 7520522+a-arefina@users.noreply.github.com

File Name: batchflow/models/tf/encoder_decoder.py

Class Name: EncoderDecoder

Method Name: head