29b8a4deb58ca9798b61690a31de1ea57de92122,fairseq/trainer.py,Trainer,_check_grad_norms,#Trainer#Any#,840

Before Change

self._grad_norm_buf.zero_()

self._grad_norm_buf[self.data_parallel_rank] = grad_norm

distributed_utils.all_reduce(self._grad_norm_buf, group=self.data_parallel_process_group)

if not (self._grad_norm_buf == self._grad_norm_buf[0]).all():

raise RuntimeError(

"Fatal error: gradients are inconsistent between workers. "

"Try --ddp-backend=no_c10d."

)

def _reduce_and_log_stats(self, logging_outputs, sample_size, grad_norm=None):

if grad_norm is not None:

metrics.log_speed("ups", 1., priority=100, round=2)

metrics.log_scalar("gnorm", grad_norm, priority=400, round=3)

After Change

group=self.data_parallel_process_group

)

if not self._is_grad_norms_consistent(self._grad_norm_buf):

pretty_detail = "\n".join(

"rank {:3d} = {:.8f}".format(r, n)

for r, n in enumerate(self._grad_norm_buf.tolist())

)

error_detail = "grad_norm across the workers:\n{}\n".format(pretty_detail)

raise RuntimeError(

"Fatal error: gradients are inconsistent between workers. "

"Try --ddp-backend=no_c10d. "

"Or are you mixing up different generation of GPUs in training?"

+ "\n"

+ "-" * 80

+ "\n{}\n".format(error_detail)

+ "-" * 80

)

def _reduce_and_log_stats(self, logging_outputs, sample_size, grad_norm=None):

if grad_norm is not None:

metrics.log_speed("ups", 1., priority=100, round=2)

metrics.log_scalar("gnorm", grad_norm, priority=400, round=3)

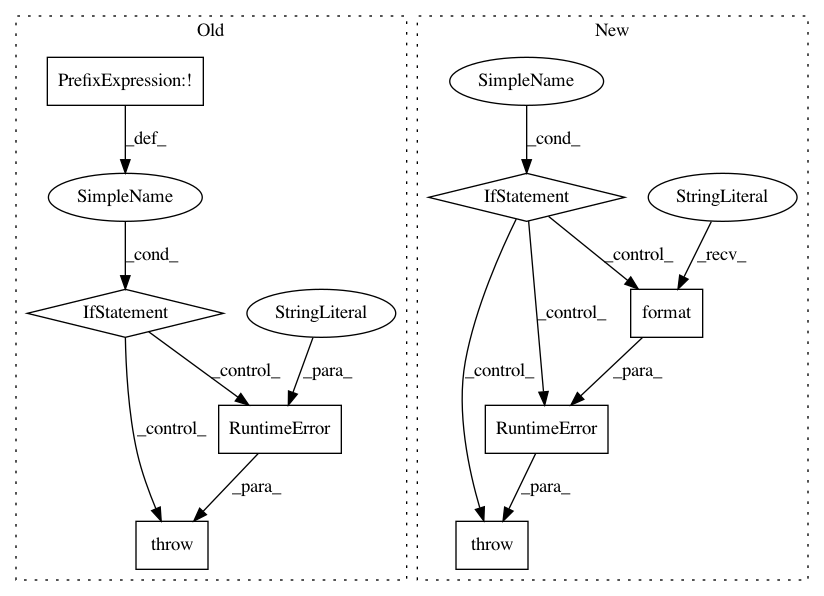

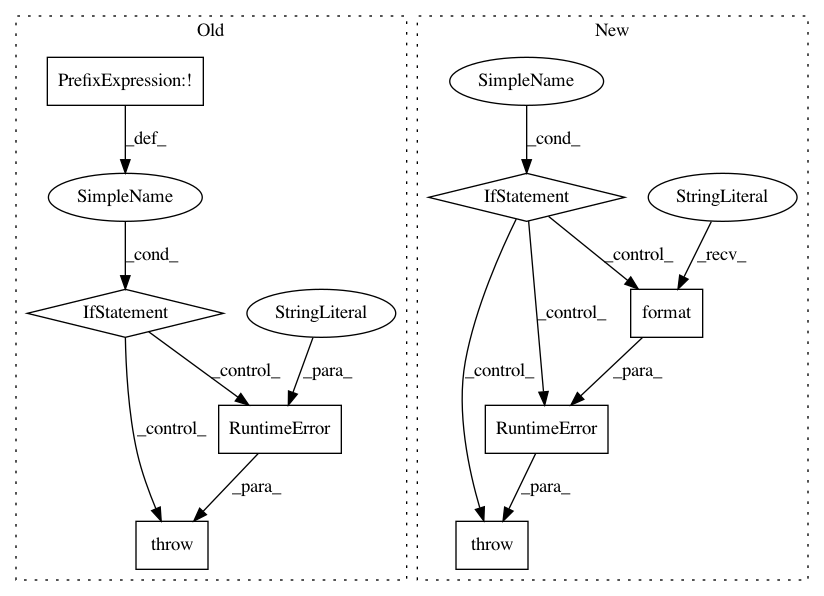

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 8

Instances

Project Name: pytorch/fairseq

Commit Name: 29b8a4deb58ca9798b61690a31de1ea57de92122

Time: 2020-05-29

Author: yqw@fb.com

File Name: fairseq/trainer.py

Class Name: Trainer

Method Name: _check_grad_norms

Project Name: vatlab/SoS

Commit Name: 6ca46d807b12bb34e46cf83b83afa4abc45d797c

Time: 2016-12-11

Author: ben.bog@gmail.com

File Name: sos/sos_step.py

Class Name: Base_Step_Executor

Method Name: _run

Project Name: ray-project/ray

Commit Name: 8204717eed71923f1e14fa6bd17ca4588c140c09

Time: 2020-07-16

Author: sven@anyscale.io

File Name: rllib/evaluation/rollout_worker.py

Class Name: RolloutWorker

Method Name: __init__