16d2eb3061f1bed8ade390c5e2a2c1de9daa3509,theanolm/optimizers/basicoptimizer.py,BasicOptimizer,__init__,#BasicOptimizer#Any#Any#Any#,16

Before Change

total_sum = sum(sums) // sum over parameter variables

norm = tensor.sqrt(total_sum)

target_norm = tensor.clip(norm, 0.0, max_norm)

gradients = [gradient * target_norm / (epsilon + norm)

for gradient in gradients]

self._gradient_exprs = gradients

self.gradient_update_function = \

theano.function([self.network.minibatch_input,

After Change

for name, value in self.param_init_values.items()}

// numerical stability / smoothing term to prevent divide-by-zero

if not "epsilon" in optimization_options:

raise ValueError("Epsilon is not given in optimization options.")

self._epsilon = optimization_options["epsilon"]

// maximum norm for parameter updates

if "max_gradient_norm" in optimization_options:

self._max_gradient_norm = optimization_options["max_gradient_norm"]

else:

self._max_gradient_norm = None

// Derive the symbolic expression for log probability of each word.

logprobs = tensor.log(self.network.prediction_probs)

// Set the log probability to 0, if the next input word (the one

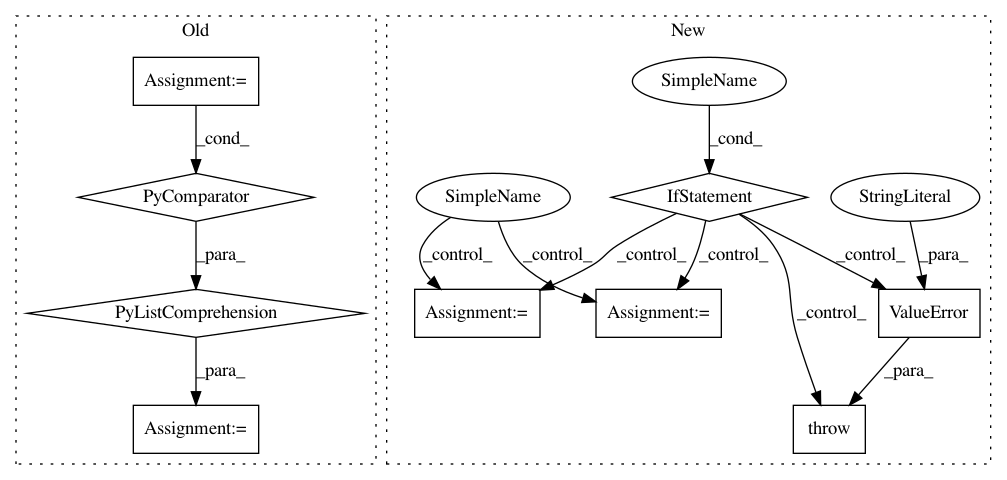

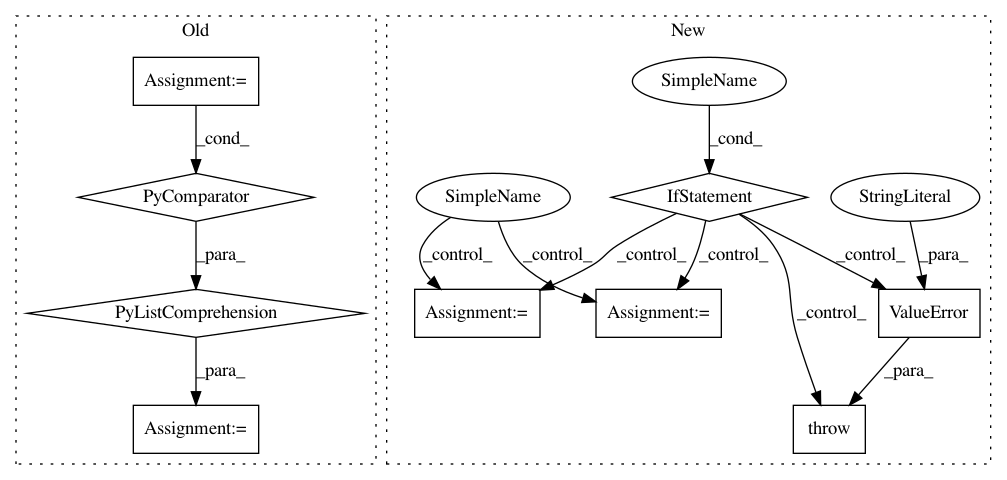

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 9

Instances

Project Name: senarvi/theanolm

Commit Name: 16d2eb3061f1bed8ade390c5e2a2c1de9daa3509

Time: 2015-12-04

Author: seppo.git@marjaniemi.com

File Name: theanolm/optimizers/basicoptimizer.py

Class Name: BasicOptimizer

Method Name: __init__

Project Name: analysiscenter/batchflow

Commit Name: cba76d5cf38125d9dc221dbe0681a7710d5d06d3

Time: 2020-12-01

Author: rhudor@gmail.com

File Name: batchflow/batch.py

Class Name: Batch

Method Name: apply_parallel

Project Name: deepchem/deepchem

Commit Name: 528b37b6a893713994ef6335a02a642b8827cd74

Time: 2020-04-07

Author: bharath@Bharaths-MBP.zyxel.com

File Name: deepchem/models/keras_model.py

Class Name: KerasModel

Method Name: _predict