9f9c8aa7a0d0fff121e1f9f77f49a059a16825ee,examples/deep_fqi_atari/extractor.py,Extractor,_build,#Extractor#Any#,111

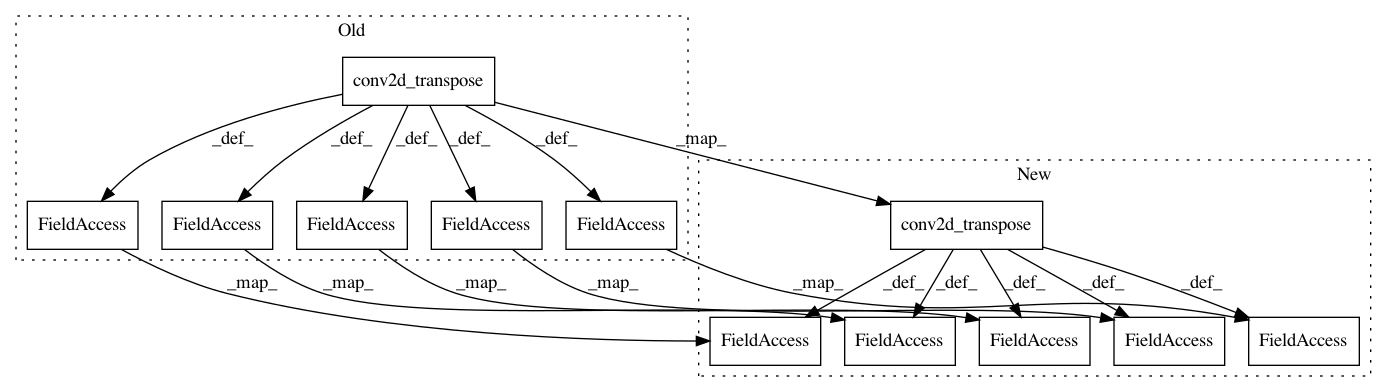

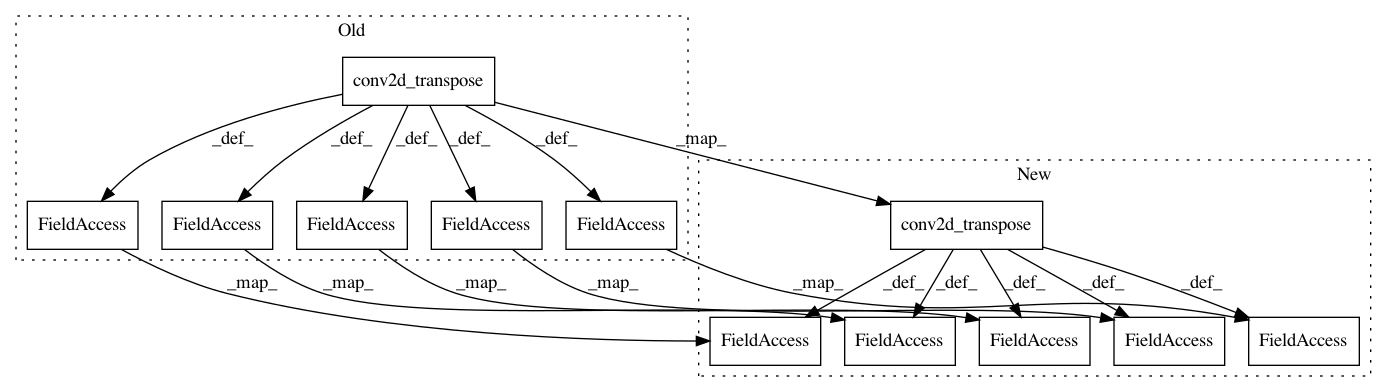

Before Change

name="hidden_6_flat")

hidden_6_conv = tf.reshape(hidden_6_flat, [-1, 5, 5, 64],

name="hidden_6_conv")

hidden_7 = tf.layers.conv2d_transpose(

hidden_6_conv, 64, 3, 1, activation=tf.nn.relu,

kernel_initializer=tf.glorot_uniform_initializer(),

name="hidden_7"

)

hidden_8 = tf.layers.conv2d_transpose(

hidden_7, 64, 3, 1, activation=tf.nn.relu,

kernel_initializer=tf.glorot_uniform_initializer(),

name="hidden_8"

)

hidden_9 = tf.layers.conv2d_transpose(

hidden_8, 32, 4, 2, activation=tf.nn.relu,

kernel_initializer=tf.glorot_uniform_initializer(),

name="hidden_9"

)

if self._predict_next_frame:

output_kernels = 1

else:

output_kernels = 4

self._predicted_frame = tf.layers.conv2d_transpose(

hidden_9, output_kernels, 8, 4, activation=tf.nn.sigmoid,

kernel_initializer=tf.glorot_uniform_initializer(),

name="predicted_frame_conv"

)

if self._predict_next_frame:

self._predicted_frame = tf.reshape(

self._predicted_frame,

shape=[-1, convnet_pars["height"], convnet_pars["width"]],

name="predicted_frame"

)

if self._predict_next_frame:

self._target_frame = tf.placeholder(

"float32",

shape=[None,

convnet_pars["height"],

convnet_pars["width"]],

name="target_frame")

else:

self._target_frame = tf.placeholder(

"float32",

shape=[None,

convnet_pars["height"],

convnet_pars["width"],

convnet_pars["history_length"]],

name="target_frame")

if self._predict_reward or self._predict_absorbing:

hidden_9 = tf.layers.dense(

self._features,

128,

tf.nn.relu,

kernel_initializer=tf.glorot_uniform_initializer(),

name="hidden_9"

)

hidden_10 = tf.layers.dense(

hidden_9,

64,

tf.nn.relu,

kernel_initializer=tf.glorot_uniform_initializer(),

name="hidden_10"

)

if self._predict_reward:

self._target_reward = tf.placeholder(tf.int32,

shape=[None, 1],

name="target_reward")

self._target_reward_class = tf.clip_by_value(

self._target_reward, -1, 1,

name="target_reward_clipping") + 1

self._predicted_reward = tf.layers.dense(

hidden_10, 3, tf.nn.sigmoid, name="predicted_reward")

predicted_reward = tf.clip_by_value(

self._predicted_reward,

1e-7,

1 - 1e-7,

name="predicted_reward_clipping"

)

predicted_reward_logits = tf.log(

predicted_reward / (1 - predicted_reward),

name="predicted_reward_logits"

)

self._xent_reward = tf.reduce_mean(

tf.nn.sparse_softmax_cross_entropy_with_logits(

labels=tf.squeeze(self._target_reward_class),

logits=predicted_reward_logits,

name="sparse_softmax_cross_entropy_reward"

),

name="xent_reward"

)

if self._predict_absorbing:

self._target_absorbing = tf.placeholder(

tf.float32, shape=[None, 1], name="target_absorbing")

self._predicted_absorbing = tf.layers.dense(

hidden_10, 1, tf.nn.sigmoid, name="predicted_absorbing")

predicted_absorbing = tf.clip_by_value(

self._predicted_absorbing,

1e-7,

1 - 1e-7,

name="predicted_absorbing_clipping"

)

predicted_absorbing_logits = tf.log(

predicted_absorbing / (1 - predicted_absorbing),

name="predicted_absorbing_logits")

self._xent_absorbing = tf.reduce_mean(

tf.nn.sigmoid_cross_entropy_with_logits(

labels=tf.squeeze(self._target_absorbing),

logits=predicted_absorbing_logits,

name="sigmoid_cross_entropy_absorbing"

),

name="xent_absorbing"

)

predicted_frame = tf.clip_by_value(self._predicted_frame,

1e-7,

1 - 1e-7,

name="predicted_frame_clipping")

predicted_frame_logits = tf.log(

predicted_frame / (1 - predicted_frame),

name="predicted_frame_logits"

)

self._xent_frame = tf.reduce_mean(

tf.nn.sigmoid_cross_entropy_with_logits(

labels=self._target_frame,

logits=predicted_frame_logits,

name="sigmoid_cross_entropy_frame"

),

name="xent_frame"

)

self._xent = self._xent_frame

if self._predict_reward:

self._xent += self._xent_reward

if self._predict_absorbing:

self._xent += self._xent_absorbing

if self._contractive:

raise NotImplementedError

else:

self._reg = tf.reduce_mean(tf.norm(self._features, 1, axis=1),

name="reg")

self._loss = tf.add(self._xent, self._reg_coeff * self._reg,

name="loss")

tf.summary.scalar("xent_frame", self._xent_frame)

if self._predict_reward:

accuracy_reward = tf.equal(

tf.squeeze(self._target_reward_class),

tf.cast(tf.argmax(self._predicted_reward, 1), tf.int32)

)

self._accuracy_reward = tf.reduce_mean(

tf.cast(accuracy_reward, tf.float32))

tf.summary.scalar("accuracy_reward", self._accuracy_reward)

tf.summary.scalar("xent_reward", self._xent_reward)

if self._predict_absorbing:

accuracy_absorbing = tf.equal(

tf.squeeze(tf.cast(self._target_absorbing, tf.int32)),

tf.cast(tf.argmax(self._predicted_absorbing, 1), tf.int32)

)

self._accuracy_absorbing = tf.reduce_mean(

tf.cast(accuracy_absorbing, tf.float32))

tf.summary.scalar("accuracy_absorbing",

self._accuracy_absorbing)

tf.summary.scalar("xent_absorbing", self._xent_absorbing)

tf.summary.scalar("xent", self._xent)

tf.summary.scalar("reg", self._reg)

tf.summary.scalar("loss", self._loss)

self._merged = tf.summary.merge(

tf.get_collection(tf.GraphKeys.SUMMARIES,

scope=self._scope_name)

)

optimizer = convnet_pars["optimizer"]

if optimizer["name"] == "rmspropcentered":

opt = tf.train.RMSPropOptimizer(learning_rate=optimizer["lr"],

decay=optimizer["decay"],

centered=True)

elif optimizer["name"] == "rmsprop":

opt = tf.train.RMSPropOptimizer(learning_rate=optimizer["lr"],

decay=optimizer["decay"])

elif optimizer["name"] == "adam":

opt = tf.train.AdamOptimizer()

elif optimizer["name"] == "adadelta":

opt = tf.train.AdadeltaOptimizer()

else:

raise ValueError("Unavailable optimizer selected.")

self._train_step = opt.minimize(loss=self._loss)

initializer = tf.variables_initializer(

tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES,

After Change

name="hidden_5_flat")

hidden_5_conv = tf.reshape(hidden_5_flat, [-1, 5, 5, 64],

name="hidden_5_conv")

hidden_5 = tf.layers.conv2d_transpose(

hidden_5_conv, 64, 3, 1, activation=tf.nn.relu,

kernel_initializer=tf.glorot_uniform_initializer(),

name="hidden_5"

)

hidden_6 = tf.layers.conv2d_transpose(

hidden_5, 64, 3, 1, activation=tf.nn.relu,

kernel_initializer=tf.glorot_uniform_initializer(),

name="hidden_6"

)

hidden_7 = tf.layers.conv2d_transpose(

hidden_6, 32, 4, 2, activation=tf.nn.relu,

kernel_initializer=tf.glorot_uniform_initializer(),

name="hidden_7"

)

if self._predict_next_frame:

output_kernels = 1

else:

output_kernels = 4

self._predicted_frame = tf.layers.conv2d_transpose(

hidden_7, output_kernels, 8, 4, activation=tf.nn.sigmoid,

kernel_initializer=tf.glorot_uniform_initializer(),

name="predicted_frame_conv"

)

if self._predict_next_frame:

self._predicted_frame = tf.reshape(

self._predicted_frame,

shape=[-1, convnet_pars["height"], convnet_pars["width"]],

name="predicted_frame"

)

if self._predict_next_frame:

self._target_frame = tf.placeholder(

"float32",

shape=[None,

convnet_pars["height"],

convnet_pars["width"]],

name="target_frame")

else:

self._target_frame = tf.placeholder(

"float32",

shape=[None,

convnet_pars["height"],

convnet_pars["width"],

convnet_pars["history_length"]],

name="target_frame")

if self._predict_reward or self._predict_absorbing:

hidden_9 = tf.layers.dense(

self._features,

128,

tf.nn.relu,

kernel_initializer=tf.glorot_uniform_initializer(),

name="hidden_9"

)

hidden_10 = tf.layers.dense(

hidden_9,

64,

tf.nn.relu,

kernel_initializer=tf.glorot_uniform_initializer(),

name="hidden_10"

)

if self._predict_reward:

self._target_reward = tf.placeholder(tf.int32,

shape=[None, 1],

name="target_reward")

self._target_reward_class = tf.clip_by_value(

self._target_reward, -1, 1,

name="target_reward_clipping") + 1

self._predicted_reward = tf.layers.dense(

hidden_10, 3, tf.nn.sigmoid, name="predicted_reward")

predicted_reward = tf.clip_by_value(

self._predicted_reward,

1e-7,

1 - 1e-7,

name="predicted_reward_clipping"

)

predicted_reward_logits = tf.log(

predicted_reward / (1 - predicted_reward),

name="predicted_reward_logits"

)

self._xent_reward = tf.reduce_mean(

tf.nn.sparse_softmax_cross_entropy_with_logits(

labels=tf.squeeze(self._target_reward_class),

logits=predicted_reward_logits,

name="sparse_softmax_cross_entropy_reward"

),

name="xent_reward"

)

if self._predict_absorbing:

self._target_absorbing = tf.placeholder(

tf.float32, shape=[None, 1], name="target_absorbing")

self._predicted_absorbing = tf.layers.dense(

hidden_10, 1, tf.nn.sigmoid, name="predicted_absorbing")

predicted_absorbing = tf.clip_by_value(

self._predicted_absorbing,

1e-7,

1 - 1e-7,

name="predicted_absorbing_clipping"

)

predicted_absorbing_logits = tf.log(

predicted_absorbing / (1 - predicted_absorbing),

name="predicted_absorbing_logits")

self._xent_absorbing = tf.reduce_mean(

tf.nn.sigmoid_cross_entropy_with_logits(

labels=tf.squeeze(self._target_absorbing),

logits=predicted_absorbing_logits,

name="sigmoid_cross_entropy_absorbing"

),

name="xent_absorbing"

)

predicted_frame = tf.clip_by_value(self._predicted_frame,

1e-7,

1 - 1e-7,

name="predicted_frame_clipping")

predicted_frame_logits = tf.log(

predicted_frame / (1 - predicted_frame),

name="predicted_frame_logits"

)

self._xent_frame = tf.reduce_mean(

tf.nn.sigmoid_cross_entropy_with_logits(

labels=self._target_frame,

logits=predicted_frame_logits,

name="sigmoid_cross_entropy_frame"

),

name="xent_frame"

)

self._xent = self._xent_frame

if self._predict_reward:

self._xent += self._xent_reward

if self._predict_absorbing:

self._xent += self._xent_absorbing

if self._contractive:

raise NotImplementedError

else:

self._reg = tf.reduce_mean(tf.norm(self._features, 1, axis=1),

name="reg")

self._loss = tf.add(self._xent, self._reg_coeff * self._reg,

name="loss")

tf.summary.scalar("xent_frame", self._xent_frame)

if self._predict_reward:

accuracy_reward = tf.equal(

tf.squeeze(self._target_reward_class),

tf.cast(tf.argmax(self._predicted_reward, 1), tf.int32)

)

self._accuracy_reward = tf.reduce_mean(

tf.cast(accuracy_reward, tf.float32))

tf.summary.scalar("accuracy_reward", self._accuracy_reward)

tf.summary.scalar("xent_reward", self._xent_reward)

if self._predict_absorbing:

accuracy_absorbing = tf.equal(

tf.squeeze(tf.cast(self._target_absorbing, tf.int32)),

tf.cast(tf.argmax(self._predicted_absorbing, 1), tf.int32)

)

self._accuracy_absorbing = tf.reduce_mean(

tf.cast(accuracy_absorbing, tf.float32))

tf.summary.scalar("accuracy_absorbing",

self._accuracy_absorbing)

tf.summary.scalar("xent_absorbing", self._xent_absorbing)

tf.summary.scalar("xent", self._xent)

tf.summary.scalar("reg", self._reg)

tf.summary.scalar("loss", self._loss)

self._merged = tf.summary.merge(

tf.get_collection(tf.GraphKeys.SUMMARIES,

scope=self._scope_name)

)

optimizer = convnet_pars["optimizer"]

if optimizer["name"] == "rmspropcentered":

opt = tf.train.RMSPropOptimizer(learning_rate=optimizer["lr"],

decay=optimizer["decay"],

centered=True)

elif optimizer["name"] == "rmsprop":

opt = tf.train.RMSPropOptimizer(learning_rate=optimizer["lr"],

decay=optimizer["decay"])

elif optimizer["name"] == "adam":

opt = tf.train.AdamOptimizer()

elif optimizer["name"] == "adadelta":

opt = tf.train.AdadeltaOptimizer()

else:

raise ValueError("Unavailable optimizer selected.")

self._train_step = opt.minimize(loss=self._loss)

initializer = tf.variables_initializer(

tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES,

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 12

Instances

Project Name: AIRLab-POLIMI/mushroom

Commit Name: 9f9c8aa7a0d0fff121e1f9f77f49a059a16825ee

Time: 2017-09-26

Author: carloderamo@gmail.com

File Name: examples/deep_fqi_atari/extractor.py

Class Name: Extractor

Method Name: _build

Project Name: AIRLab-POLIMI/mushroom

Commit Name: f53509b67c9ca37f40331a51e4f39228186383d8

Time: 2017-09-16

Author: carlo.deramo@gmail.com

File Name: examples/deep_fqi_atari/extractor.py

Class Name: Extractor

Method Name: _build

Project Name: AIRLab-POLIMI/mushroom

Commit Name: f75eaafe8077c6443276503206600faa24218526

Time: 2017-09-16

Author: carlo.deramo@gmail.com

File Name: examples/deep_fqi_atari/extractor.py

Class Name: Extractor

Method Name: _build