cc80175c2704c0dbbfe908f6b678c84ef1741a56,onmt/Models.py,Encoder,forward,#Encoder#Any#Any#,28

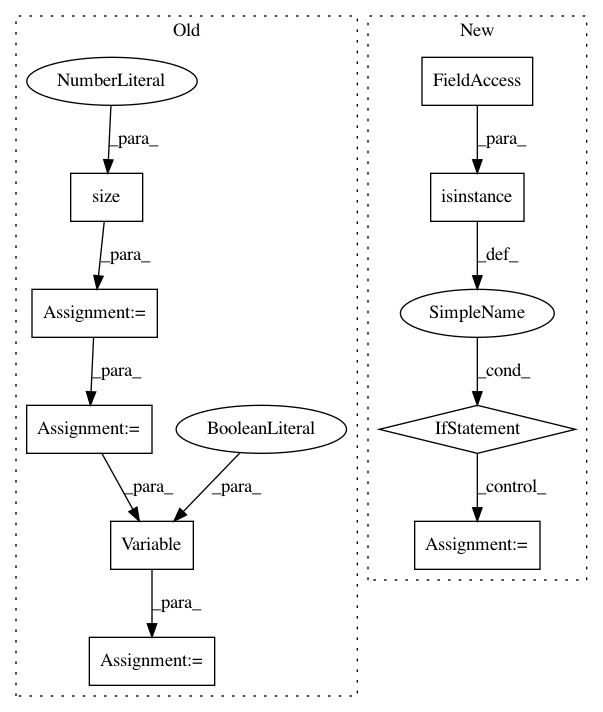

Before Change

emb = self.word_lut(input)

if hidden is None:

batch_size = emb.size(1)

h_size = (self.layers * self.num_directions, batch_size, self.hidden_size)

h_0 = Variable(emb.data.new(*h_size).zero_(), requires_grad=False)

c_0 = Variable(emb.data.new(*h_size).zero_(), requires_grad=False)

hidden = (h_0, c_0)

outputs, hidden_t = self.rnn(emb, hidden)

return hidden_t, outputs

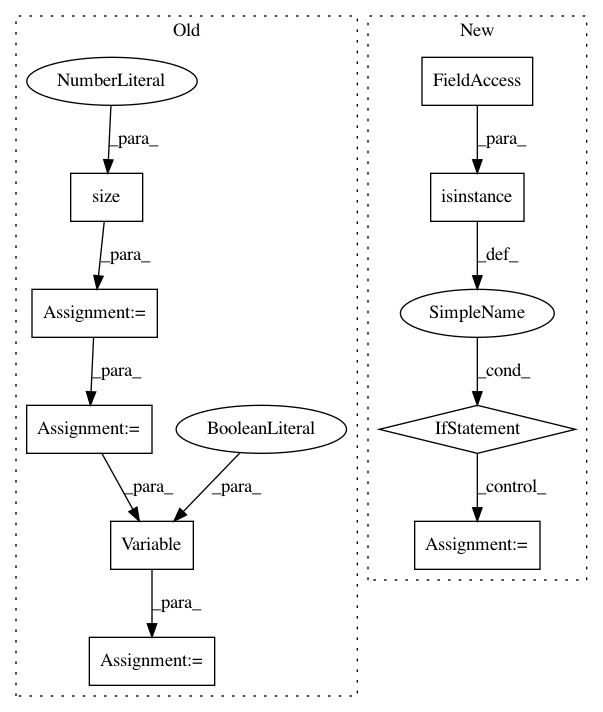

After Change

else:

emb = self.word_lut(input)

outputs, hidden_t = self.rnn(emb, hidden)

if isinstance(input, tuple):

outputs = unpack(outputs)[0]

return hidden_t, outputs

class StackedLSTM(nn.Module):

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 9

Instances

Project Name: OpenNMT/OpenNMT-py

Commit Name: cc80175c2704c0dbbfe908f6b678c84ef1741a56

Time: 2017-03-22

Author: bryan.mccann.is@gmail.com

File Name: onmt/Models.py

Class Name: Encoder

Method Name: forward

Project Name: pytorch/fairseq

Commit Name: 6e4b7e22eeb79f7e1c39d862f10ec3e61e51c979

Time: 2017-11-08

Author: myleott@fb.com

File Name: fairseq/sequence_generator.py

Class Name: SequenceGenerator

Method Name: _decode