2573c649518391ada6214cfc72d20421dfac4072,src/preprocess.py,,get_embeddings,#Any#Any#Any#,194

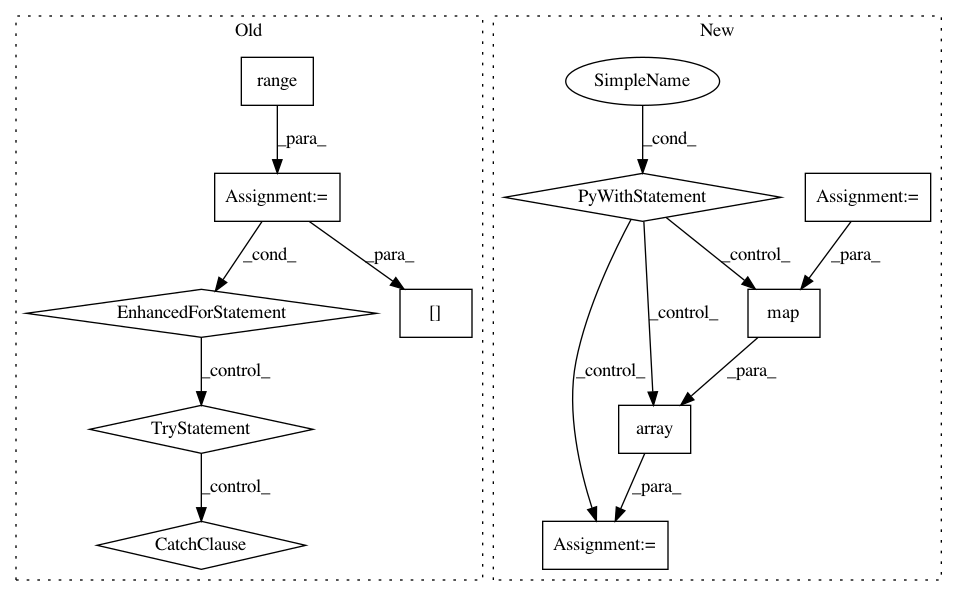

Before Change

"""Get embeddings for the words in vocab"""

word_v_size = vocab.get_vocab_size("tokens")

embeddings = np.zeros((word_v_size, d_word))

for idx in range(word_v_size): // kind of hacky

word = vocab.get_token_from_index(idx)

if word == "@@PADDING@@" or word == "@@UNKNOWN@@":

continue

try:

assert word in word2vec

except AssertionError as error:

log.debug(error)

pdb.set_trace()

embeddings[idx] = word2vec[word]

embeddings[vocab.get_token_index("@@PADDING@@")] = 0.

embeddings = torch.FloatTensor(embeddings)

log.info("\tFinished loading embeddings")

return embeddings

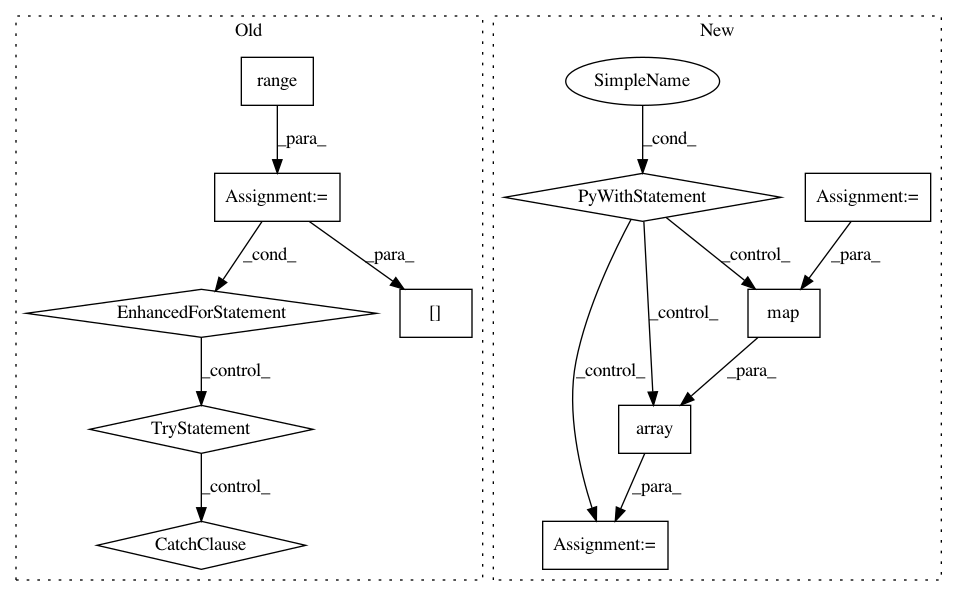

After Change

"""Get embeddings for the words in vocab"""

word_v_size, unk_idx = vocab.get_vocab_size("tokens"), vocab.get_token_index(vocab._oov_token)

embeddings = np.random.randn(word_v_size, d_word) //np.zeros((word_v_size, d_word))

with open(vec_file) as vec_fh:

for line in vec_fh:

word, vec = line.split(" ", 1)

idx = vocab.get_token_index(word)

if idx != unk_idx:

idx = vocab.get_token_index(word)

embeddings[idx] = np.array(list(map(float, vec.split())))

embeddings[vocab.get_token_index("@@PADDING@@")] = 0.

embeddings = torch.FloatTensor(embeddings)

log.info("\tFinished loading embeddings")

return embeddings

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 11

Instances

Project Name: jsalt18-sentence-repl/jiant

Commit Name: 2573c649518391ada6214cfc72d20421dfac4072

Time: 2018-03-16

Author: wang.alex.c@gmail.com

File Name: src/preprocess.py

Class Name:

Method Name: get_embeddings

Project Name: uber/ludwig

Commit Name: 5667af96dade79ef77194d519182d4989494b3a4

Time: 2019-08-25

Author: smiryala@uber.com

File Name: ludwig/features/image_feature.py

Class Name: ImageBaseFeature

Method Name: add_feature_data

Project Name: uber/ludwig

Commit Name: 7d9db23a389499c2764fb850cd19f853cc3e8565

Time: 2019-08-08

Author: smiryala@uber.com

File Name: ludwig/features/image_feature.py

Class Name: ImageBaseFeature

Method Name: add_feature_data