5b48f9a9c097d26d395873044ceaa1a0b886682a,solutionbox/code_free_ml/mltoolbox/code_free_ml/analyze.py,,run_local_analysis,#Any#Any#Any#Any#,291

Before Change

float(parsed_line[col_name])))

numerical_results[col_name]["count"] += 1

numerical_results[col_name]["sum"] += float(parsed_line[col_name])

elif transform == constant.IMAGE_TRANSFORM:

pass

elif transform == constant.KEY_TRANSFORM:

pass

else:

raise ValueError("Unknown transform %s" % transform)

// Write the vocab files. Each label is on its own line.

vocab_sizes = {}

After Change

parsed_line = dict(zip(header, line))

num_examples += 1

for col_name, transform_set in six.iteritems(inverted_features_target):

// All transforms in transform_set require the same analysis. So look

// at the first transform.

transform_name = next(iter(transform_set))

if transform_name in constant.TEXT_TRANSFORMS:

split_strings = parsed_line[col_name].split(" ")

// If a label is in the row N times, increase it"s vocab count by 1.

// This is needed for TFIDF, but it"s also an interesting stat.

for one_label in set(split_strings):

// Filter out empty strings

if one_label:

vocabs[col_name][one_label] += 1

elif transform_name in constant.CATEGORICAL_TRANSFORMS:

if parsed_line[col_name]:

vocabs[col_name][parsed_line[col_name]] += 1

elif transform_name in constant.NUMERIC_TRANSFORMS:

if not parsed_line[col_name].strip():

continue

numerical_results[col_name]["min"] = (

min(numerical_results[col_name]["min"],

float(parsed_line[col_name])))

numerical_results[col_name]["max"] = (

max(numerical_results[col_name]["max"],

float(parsed_line[col_name])))

numerical_results[col_name]["count"] += 1

numerical_results[col_name]["sum"] += float(parsed_line[col_name])

// Write the vocab files. Each label is on its own line.

vocab_sizes = {}

for name, label_count in six.iteritems(vocabs):

// Labels is now the string:

// label1,count

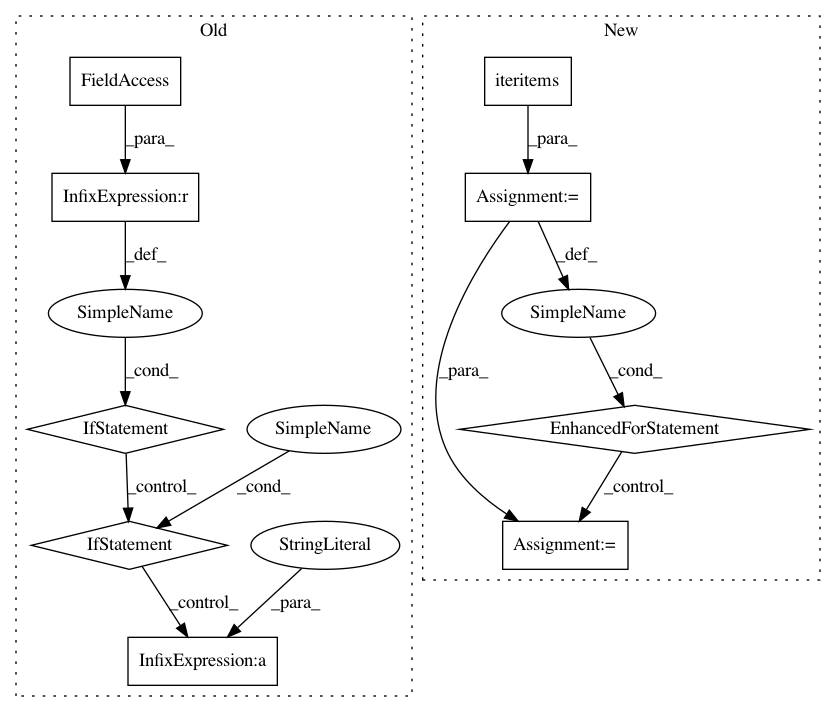

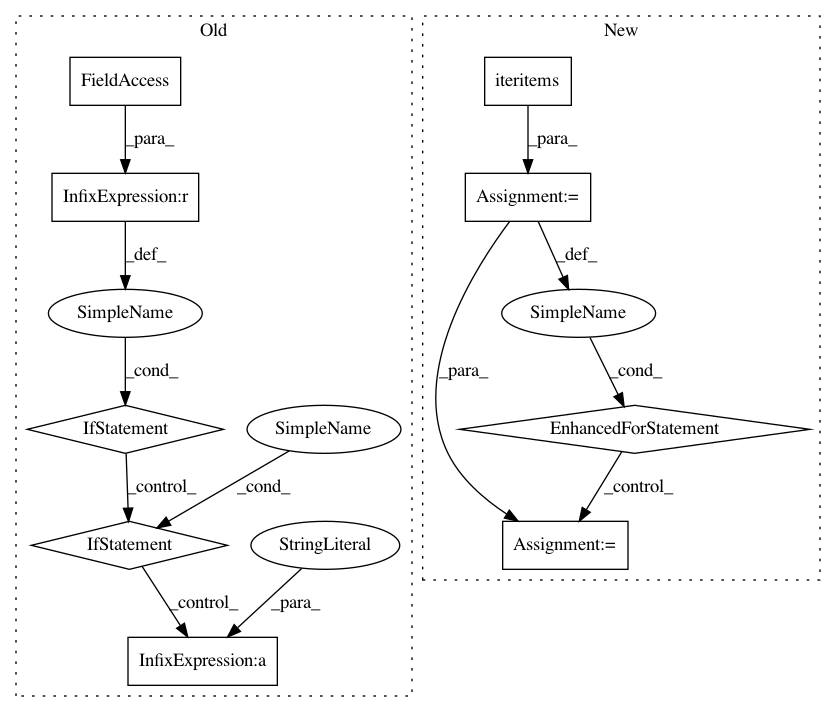

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 9

Instances

Project Name: googledatalab/pydatalab

Commit Name: 5b48f9a9c097d26d395873044ceaa1a0b886682a

Time: 2017-06-14

Author: brandondutra@google.com

File Name: solutionbox/code_free_ml/mltoolbox/code_free_ml/analyze.py

Class Name:

Method Name: run_local_analysis

Project Name: googledatalab/pydatalab

Commit Name: 5b48f9a9c097d26d395873044ceaa1a0b886682a

Time: 2017-06-14

Author: brandondutra@google.com

File Name: solutionbox/code_free_ml/mltoolbox/code_free_ml/analyze.py

Class Name:

Method Name: run_cloud_analysis

Project Name: brian-team/brian2

Commit Name: ee3356fb661d37f06ef09691e8888b97cbcf9209

Time: 2015-03-16

Author: marcel.stimberg@inserm.fr

File Name: brian2/codegen/generators/cpp_generator.py

Class Name: CPPCodeGenerator

Method Name: determine_keywords