1b5a498c2c0283a27428861255743ba7023ca0a3,fairseq/models/fconv.py,,fconv_wmt_en_de,#Any#,490

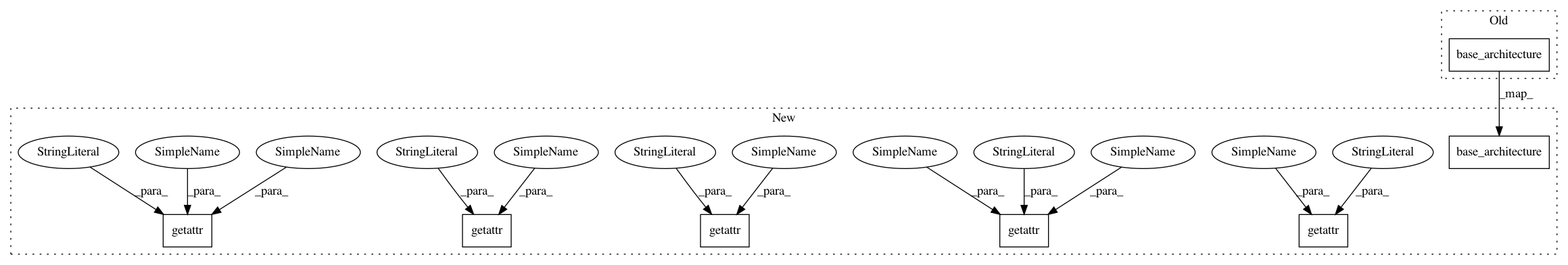

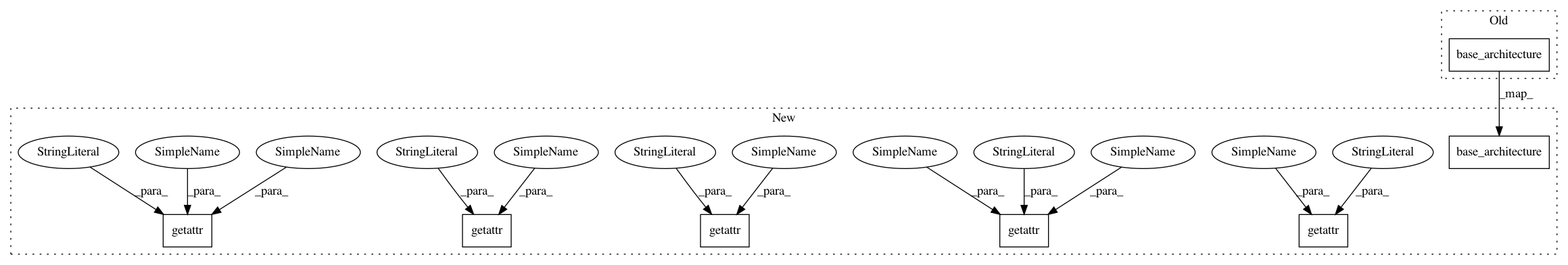

Before Change

@register_model_architecture("fconv", "fconv_wmt_en_de")

def fconv_wmt_en_de(args):

base_architecture(args)

convs = "[(512, 3)] * 9" // first 9 layers have 512 units

convs += " + [(1024, 3)] * 4" // next 4 layers have 1024 units

convs += " + [(2048, 1)] * 2" // final 2 layers use 1x1 convolutions

args.encoder_embed_dim = 768

After Change

convs += " + [(1024, 3)] * 4" // next 4 layers have 1024 units

convs += " + [(2048, 1)] * 2" // final 2 layers use 1x1 convolutions

args.encoder_embed_dim = getattr(args, "encoder_embed_dim", 768)

args.encoder_layers = getattr(args, "encoder_layers", convs)

args.decoder_embed_dim = getattr(args, "decoder_embed_dim", 768)

args.decoder_layers = getattr(args, "decoder_layers", convs)

args.decoder_out_embed_dim = getattr(args, "decoder_out_embed_dim", 512)

base_architecture(args)

@register_model_architecture("fconv", "fconv_wmt_en_fr")

def fconv_wmt_en_fr(args):

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: pytorch/fairseq

Commit Name: 1b5a498c2c0283a27428861255743ba7023ca0a3

Time: 2018-06-15

Author: alexei.b@gmail.com

File Name: fairseq/models/fconv.py

Class Name:

Method Name: fconv_wmt_en_de

Project Name: pytorch/fairseq

Commit Name: 1b5a498c2c0283a27428861255743ba7023ca0a3

Time: 2018-06-15

Author: alexei.b@gmail.com

File Name: fairseq/models/fconv.py

Class Name:

Method Name: fconv_wmt_en_de

Project Name: elbayadm/attn2d

Commit Name: 1b5a498c2c0283a27428861255743ba7023ca0a3

Time: 2018-06-15

Author: alexei.b@gmail.com

File Name: fairseq/models/fconv.py

Class Name:

Method Name: fconv_wmt_en_fr