3bb0fd78af4f9dbddf7c01dde71844a283099650,ch06/01_dqn_pong.py,,,#,71

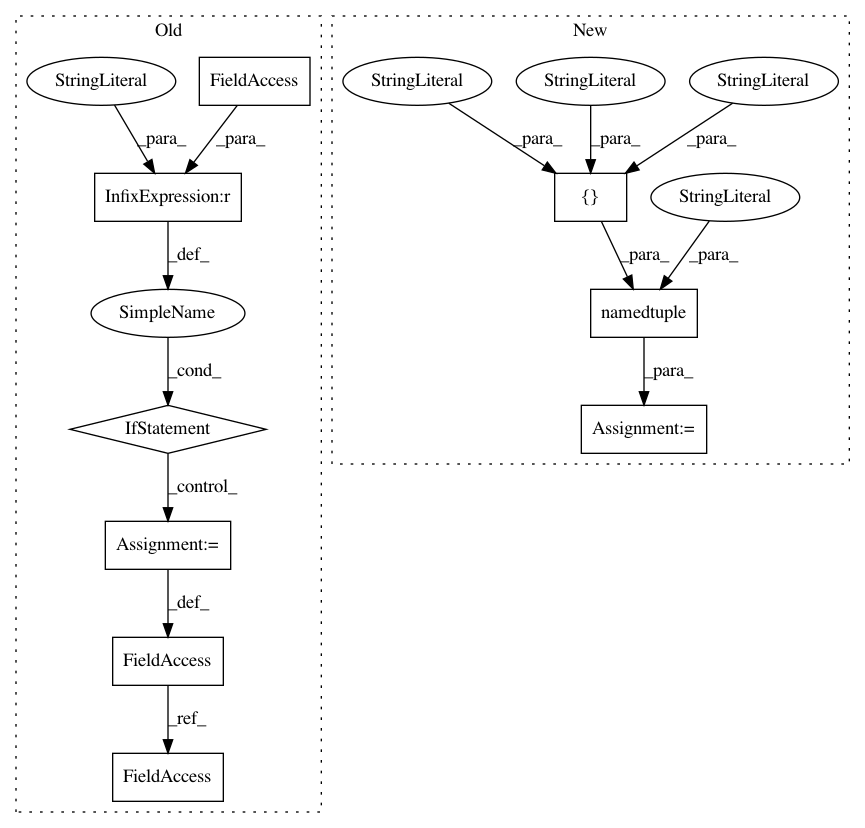

Before Change

return self.fc(conv_out)

if __name__ == "__main__":

env = BufferWrapper(ImageWrapper(gym.make("Pong-v4")), n_steps=4)

net = DQN(env.observation_space.shape, env.action_space.n)

print(net)

out = net(Variable(torch.FloatTensor([env.reset()])))

print(out.size())

pass

After Change

return self.fc(conv_out)

Experience = collections.namedtuple("Experience", field_names=["state", "action", "reward", "done", "new_state"])

class ExperienceBuffer:

def __init__(self, capacity):

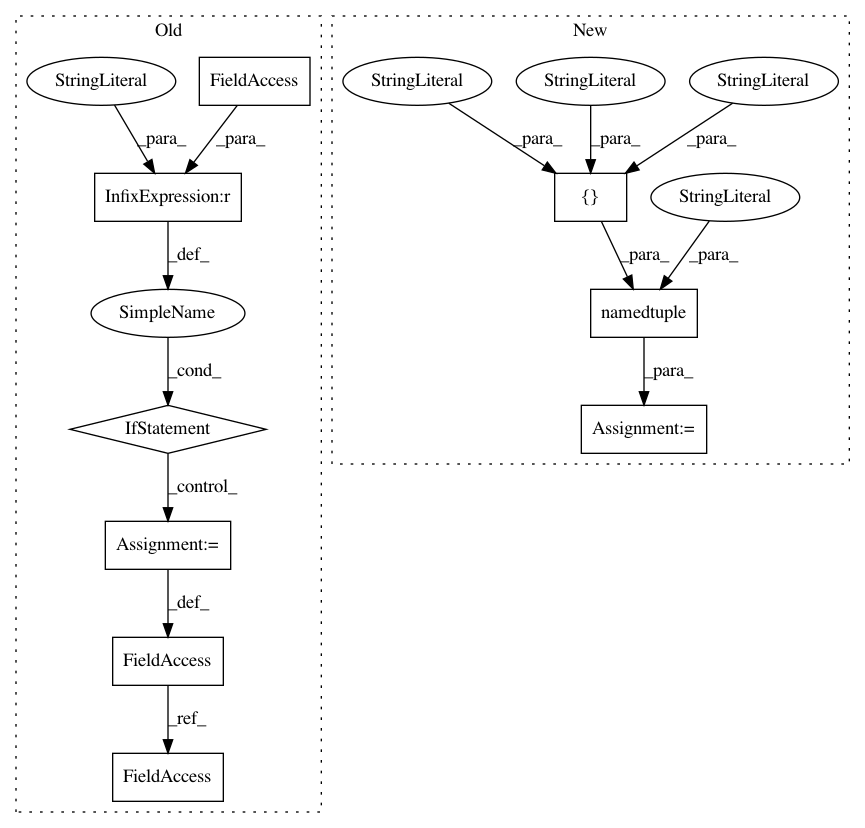

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 9

Instances

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 3bb0fd78af4f9dbddf7c01dde71844a283099650

Time: 2017-10-16

Author: max.lapan@gmail.com

File Name: ch06/01_dqn_pong.py

Class Name:

Method Name:

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: a0113631d7e9d6ec65531457ce03e06c902a4d0b

Time: 2017-10-17

Author: max.lapan@gmail.com

File Name: ch06/01_dqn_pong.py

Class Name:

Method Name:

Project Name: biolab/orange3

Commit Name: 7ebf1257d3667e1245048ff5c6cbb7f9a86c4d9a

Time: 2015-09-14

Author: ales.erjavec@fri.uni-lj.si

File Name: Orange/widgets/data/owfile.py

Class Name:

Method Name: