e4b6611cb73ef7658f028831be1aa6bd85ecbed0,src/garage/tf/policies/discrete_qf_derived_policy.py,DiscreteQfDerivedPolicy,get_action,#DiscreteQfDerivedPolicy#Any#,36

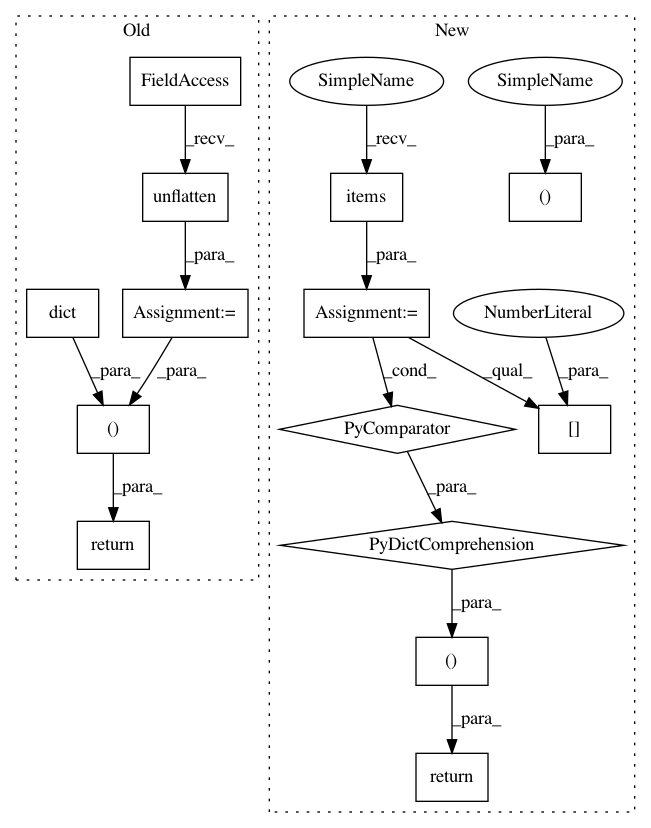

Before Change

if isinstance(self.env_spec.observation_space, akro.Image) and \

len(observation.shape) < \

len(self.env_spec.observation_space.shape):

observation = self.env_spec.observation_space.unflatten(

observation)

q_vals = self._f_qval([observation])

opt_action = np.argmax(q_vals)

return opt_action, dict()

def get_actions(self, observations):

Get actions from this policy for the input observations.

After Change

dict since there is no parameterization.

opt_actions , agent_infos = self.get_actions([observation])

return opt_actions[0], {k: v[0] for k, v in agent_infos.items()}

def get_actions(self, observations):

Get actions from this policy for the input observations.

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 14

Instances Project Name: rlworkgroup/garage

Commit Name: e4b6611cb73ef7658f028831be1aa6bd85ecbed0

Time: 2020-08-14

Author: 38871737+avnishn@users.noreply.github.com

File Name: src/garage/tf/policies/discrete_qf_derived_policy.py

Class Name: DiscreteQfDerivedPolicy

Method Name: get_action

Project Name: rlworkgroup/garage

Commit Name: e4b6611cb73ef7658f028831be1aa6bd85ecbed0

Time: 2020-08-14

Author: 38871737+avnishn@users.noreply.github.com

File Name: src/garage/tf/policies/gaussian_mlp_policy.py

Class Name: GaussianMLPPolicy

Method Name: get_action

Project Name: rlworkgroup/garage

Commit Name: e4b6611cb73ef7658f028831be1aa6bd85ecbed0

Time: 2020-08-14

Author: 38871737+avnishn@users.noreply.github.com

File Name: src/garage/tf/policies/continuous_mlp_policy.py

Class Name: ContinuousMLPPolicy

Method Name: get_action

Project Name: rlworkgroup/garage

Commit Name: e4b6611cb73ef7658f028831be1aa6bd85ecbed0

Time: 2020-08-14

Author: 38871737+avnishn@users.noreply.github.com

File Name: src/garage/tf/policies/discrete_qf_derived_policy.py

Class Name: DiscreteQfDerivedPolicy

Method Name: get_action