f72a9854c23f9101e75cf7c13fbf8232128a896a,tf_agents/agents/sac/examples/train_eval_mujoco.py,,train_eval,#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#,80

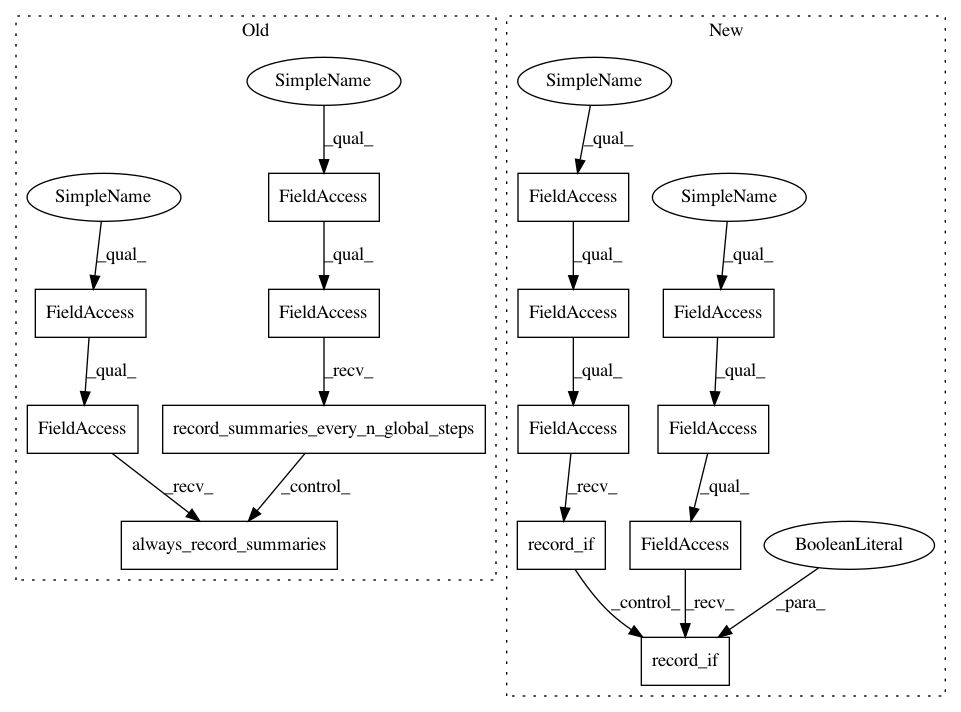

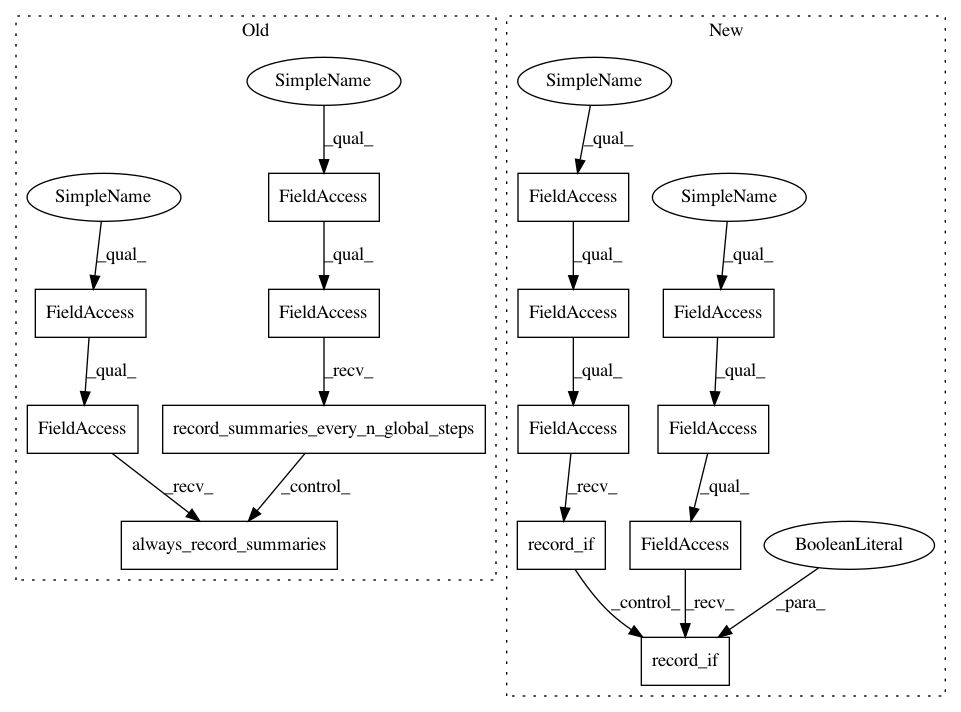

Before Change

py_metrics.AverageEpisodeLengthMetric(buffer_size=num_eval_episodes),

]

with tf.contrib.summary.record_summaries_every_n_global_steps(

summary_interval):

// Create the environment.

tf_env = tf_py_environment.TFPyEnvironment(suite_mujoco.load(env_name))

eval_py_env = suite_mujoco.load(env_name)

// Get the data specs from the environment

time_step_spec = tf_env.time_step_spec()

observation_spec = time_step_spec.observation

action_spec = tf_env.action_spec()

actor_net = actor_distribution_network.ActorDistributionNetwork(

observation_spec,

action_spec,

fc_layer_params=actor_fc_layers,

continuous_projection_net=normal_projection_net)

critic_net = critic_network.CriticNetwork(

(observation_spec, action_spec),

observation_fc_layer_params=critic_obs_fc_layers,

action_fc_layer_params=critic_action_fc_layers,

joint_fc_layer_params=critic_joint_fc_layers)

global_step = tf.compat.v1.train.get_or_create_global_step()

tf_agent = sac_agent.SacAgent(

time_step_spec,

action_spec,

actor_network=actor_net,

critic_network=critic_net,

actor_optimizer=tf.compat.v1.train.AdamOptimizer(

learning_rate=actor_learning_rate),

critic_optimizer=tf.compat.v1.train.AdamOptimizer(

learning_rate=critic_learning_rate),

alpha_optimizer=tf.compat.v1.train.AdamOptimizer(

learning_rate=alpha_learning_rate),

target_update_tau=target_update_tau,

target_update_period=target_update_period,

td_errors_loss_fn=td_errors_loss_fn,

gamma=gamma,

reward_scale_factor=reward_scale_factor,

gradient_clipping=gradient_clipping,

debug_summaries=debug_summaries,

summarize_grads_and_vars=summarize_grads_and_vars,

train_step_counter=global_step)

// Make the replay buffer.

replay_buffer = tf_uniform_replay_buffer.TFUniformReplayBuffer(

data_spec=tf_agent.collect_data_spec,

batch_size=1,

max_length=replay_buffer_capacity)

replay_observer = [replay_buffer.add_batch]

eval_py_policy = py_tf_policy.PyTFPolicy(tf_agent.policy)

train_metrics = [

tf_metrics.NumberOfEpisodes(),

tf_metrics.EnvironmentSteps(),

tf_py_metric.TFPyMetric(py_metrics.AverageReturnMetric()),

tf_py_metric.TFPyMetric(py_metrics.AverageEpisodeLengthMetric()),

]

collect_policy = tf_agent.collect_policy

initial_collect_op = dynamic_step_driver.DynamicStepDriver(

tf_env,

collect_policy,

observers=replay_observer + train_metrics,

num_steps=initial_collect_steps).run()

collect_op = dynamic_step_driver.DynamicStepDriver(

tf_env,

collect_policy,

observers=replay_observer + train_metrics,

num_steps=collect_steps_per_iteration).run()

// Prepare replay buffer as dataset with invalid transitions filtered.

def _filter_invalid_transition(trajectories, unused_arg1):

return ~trajectories.is_boundary()[0]

dataset = replay_buffer.as_dataset(

sample_batch_size=5 * batch_size,

num_steps=2).apply(tf.data.experimental.unbatch()).filter(

_filter_invalid_transition).batch(batch_size).prefetch(

batch_size * 5)

dataset_iterator = tf.compat.v1.data.make_initializable_iterator(dataset)

trajectories, unused_info = dataset_iterator.get_next()

train_op = tf_agent.train(trajectories)

for train_metric in train_metrics:

train_metric.tf_summaries(step_metrics=train_metrics[:2])

summary_op = tf.contrib.summary.all_summary_ops()

with eval_summary_writer.as_default(), \

tf.contrib.summary.always_record_summaries():

for eval_metric in eval_metrics:

eval_metric.tf_summaries()

After Change

]

global_step = tf.compat.v1.train.get_or_create_global_step()

with tf.compat.v2.summary.record_if(

lambda: tf.math.equal(global_step % summary_interval, 0)):

// Create the environment.

tf_env = tf_py_environment.TFPyEnvironment(suite_mujoco.load(env_name))

eval_py_env = suite_mujoco.load(env_name)

// Get the data specs from the environment

time_step_spec = tf_env.time_step_spec()

observation_spec = time_step_spec.observation

action_spec = tf_env.action_spec()

actor_net = actor_distribution_network.ActorDistributionNetwork(

observation_spec,

action_spec,

fc_layer_params=actor_fc_layers,

continuous_projection_net=normal_projection_net)

critic_net = critic_network.CriticNetwork(

(observation_spec, action_spec),

observation_fc_layer_params=critic_obs_fc_layers,

action_fc_layer_params=critic_action_fc_layers,

joint_fc_layer_params=critic_joint_fc_layers)

tf_agent = sac_agent.SacAgent(

time_step_spec,

action_spec,

actor_network=actor_net,

critic_network=critic_net,

actor_optimizer=tf.compat.v1.train.AdamOptimizer(

learning_rate=actor_learning_rate),

critic_optimizer=tf.compat.v1.train.AdamOptimizer(

learning_rate=critic_learning_rate),

alpha_optimizer=tf.compat.v1.train.AdamOptimizer(

learning_rate=alpha_learning_rate),

target_update_tau=target_update_tau,

target_update_period=target_update_period,

td_errors_loss_fn=td_errors_loss_fn,

gamma=gamma,

reward_scale_factor=reward_scale_factor,

gradient_clipping=gradient_clipping,

debug_summaries=debug_summaries,

summarize_grads_and_vars=summarize_grads_and_vars,

train_step_counter=global_step)

// Make the replay buffer.

replay_buffer = tf_uniform_replay_buffer.TFUniformReplayBuffer(

data_spec=tf_agent.collect_data_spec,

batch_size=1,

max_length=replay_buffer_capacity)

replay_observer = [replay_buffer.add_batch]

eval_py_policy = py_tf_policy.PyTFPolicy(tf_agent.policy)

train_metrics = [

tf_metrics.NumberOfEpisodes(),

tf_metrics.EnvironmentSteps(),

tf_py_metric.TFPyMetric(py_metrics.AverageReturnMetric()),

tf_py_metric.TFPyMetric(py_metrics.AverageEpisodeLengthMetric()),

]

collect_policy = tf_agent.collect_policy

initial_collect_op = dynamic_step_driver.DynamicStepDriver(

tf_env,

collect_policy,

observers=replay_observer + train_metrics,

num_steps=initial_collect_steps).run()

collect_op = dynamic_step_driver.DynamicStepDriver(

tf_env,

collect_policy,

observers=replay_observer + train_metrics,

num_steps=collect_steps_per_iteration).run()

// Prepare replay buffer as dataset with invalid transitions filtered.

def _filter_invalid_transition(trajectories, unused_arg1):

return ~trajectories.is_boundary()[0]

dataset = replay_buffer.as_dataset(

sample_batch_size=5 * batch_size,

num_steps=2).apply(tf.data.experimental.unbatch()).filter(

_filter_invalid_transition).batch(batch_size).prefetch(

batch_size * 5)

dataset_iterator = tf.compat.v1.data.make_initializable_iterator(dataset)

trajectories, unused_info = dataset_iterator.get_next()

train_op = tf_agent.train(trajectories)

for train_metric in train_metrics:

train_metric.tf_summaries(step_metrics=train_metrics[:2])

summary_op = tf.contrib.summary.all_summary_ops()

with eval_summary_writer.as_default(), \

tf.compat.v2.summary.record_if(True):

for eval_metric in eval_metrics:

eval_metric.tf_summaries()

In pattern: SUPERPATTERN

Frequency: 10

Non-data size: 14

Instances

Project Name: tensorflow/agents

Commit Name: f72a9854c23f9101e75cf7c13fbf8232128a896a

Time: 2019-02-27

Author: gjn@google.com

File Name: tf_agents/agents/sac/examples/train_eval_mujoco.py

Class Name:

Method Name: train_eval

Project Name: tensorflow/agents

Commit Name: f72a9854c23f9101e75cf7c13fbf8232128a896a

Time: 2019-02-27

Author: gjn@google.com

File Name: tf_agents/agents/dqn/examples/train_eval_rnn_gym.py

Class Name:

Method Name: train_eval

Project Name: tensorflow/agents

Commit Name: f72a9854c23f9101e75cf7c13fbf8232128a896a

Time: 2019-02-27

Author: gjn@google.com

File Name: tf_agents/agents/sac/examples/train_eval_mujoco.py

Class Name:

Method Name: train_eval

Project Name: tensorflow/agents

Commit Name: f72a9854c23f9101e75cf7c13fbf8232128a896a

Time: 2019-02-27

Author: gjn@google.com

File Name: tf_agents/agents/td3/examples/train_eval_mujoco.py

Class Name:

Method Name: train_eval

Project Name: tensorflow/agents

Commit Name: f72a9854c23f9101e75cf7c13fbf8232128a896a

Time: 2019-02-27

Author: gjn@google.com

File Name: tf_agents/agents/ddpg/examples/train_eval_mujoco.py

Class Name:

Method Name: train_eval

Project Name: tensorflow/agents

Commit Name: f72a9854c23f9101e75cf7c13fbf8232128a896a

Time: 2019-02-27

Author: gjn@google.com

File Name: tf_agents/agents/ddpg/examples/train_eval_rnn_dm.py

Class Name:

Method Name: train_eval

Project Name: tensorflow/agents

Commit Name: f72a9854c23f9101e75cf7c13fbf8232128a896a

Time: 2019-02-27

Author: gjn@google.com

File Name: tf_agents/agents/td3/examples/train_eval_rnn_dm.py

Class Name:

Method Name: train_eval

Project Name: tensorflow/agents

Commit Name: f72a9854c23f9101e75cf7c13fbf8232128a896a

Time: 2019-02-27

Author: gjn@google.com

File Name: tf_agents/agents/dqn/examples/train_eval_gym.py

Class Name:

Method Name: train_eval

Project Name: tensorflow/agents

Commit Name: f72a9854c23f9101e75cf7c13fbf8232128a896a

Time: 2019-02-27

Author: gjn@google.com

File Name: tf_agents/agents/reinforce/examples/train_eval_gym.py

Class Name:

Method Name: train_eval

Project Name: tensorflow/agents

Commit Name: f72a9854c23f9101e75cf7c13fbf8232128a896a

Time: 2019-02-27

Author: gjn@google.com

File Name: tf_agents/agents/ppo/examples/train_eval.py

Class Name:

Method Name: train_eval

Project Name: tensorflow/agents

Commit Name: f72a9854c23f9101e75cf7c13fbf8232128a896a

Time: 2019-02-27

Author: gjn@google.com

File Name: tf_agents/agents/dqn/examples/train_eval_atari.py

Class Name: TrainEval

Method Name: __init__