d10e1736bef8954722d0d19631fe42f6b83e8ce6,examples/quickstart.py,,,#,24

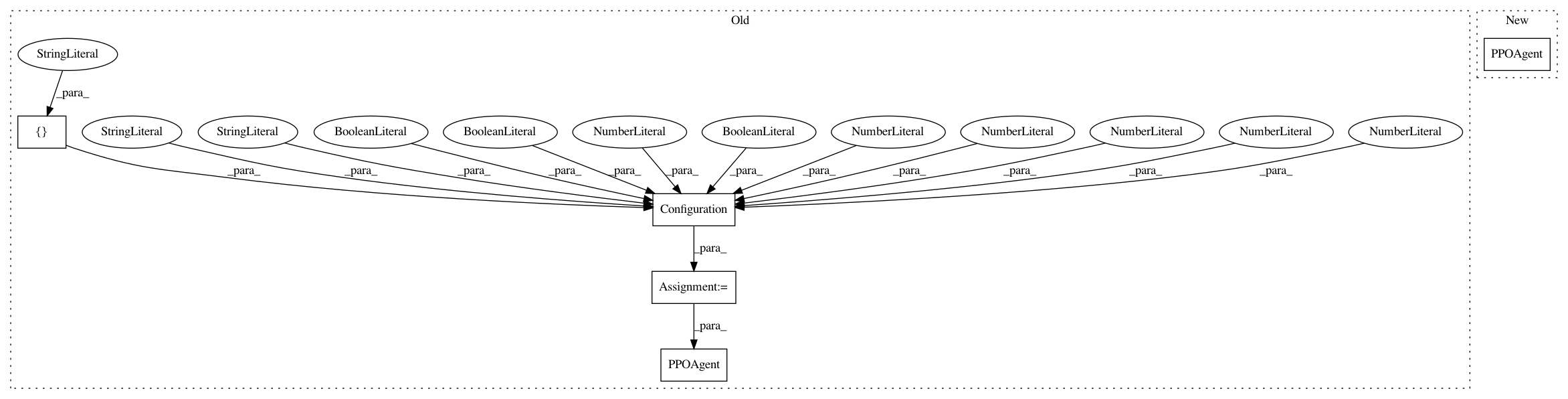

Before Change

env = OpenAIGym("CartPole-v0")

config = Configuration(

batch_size=4096,

// Agent

preprocessing=None,

exploration=None,

reward_preprocessing=None,

// BatchAgent

keep_last_timestep=True,

// PPOAgent

step_optimizer=dict(

type="adam",

learning_rate=1e-3

),

optimization_steps=10,

// Model

scope="ppo",

discount=0.99,

// DistributionModel

distributions=None, // not documented!!!

entropy_regularization=0.01,

// PGModel

baseline_mode=None,

baseline=None,

baseline_optimizer=None,

gae_lambda=None,

normalize_rewards=False,

// PGLRModel

likelihood_ratio_clipping=0.2,

// Logging

log_level="info",

// TensorFlow Summaries

summary_logdir=None,

summary_labels=["total-loss"],

summary_frequency=1,

// Distributed

// TensorFlow distributed configuration

cluster_spec=None,

parameter_server=False,

task_index=0,

device=None,

local_model=False,

replica_model=False,

)

// Network as list of layers

network_spec = [

dict(type="dense", size=32, activation="tanh"),

dict(type="dense", size=32, activation="tanh")

]

agent = PPOAgent(

states_spec=env.states,

actions_spec=env.actions,

network_spec=network_spec,

config=config

)

// Create the runner

runner = Runner(agent=agent, environment=env)

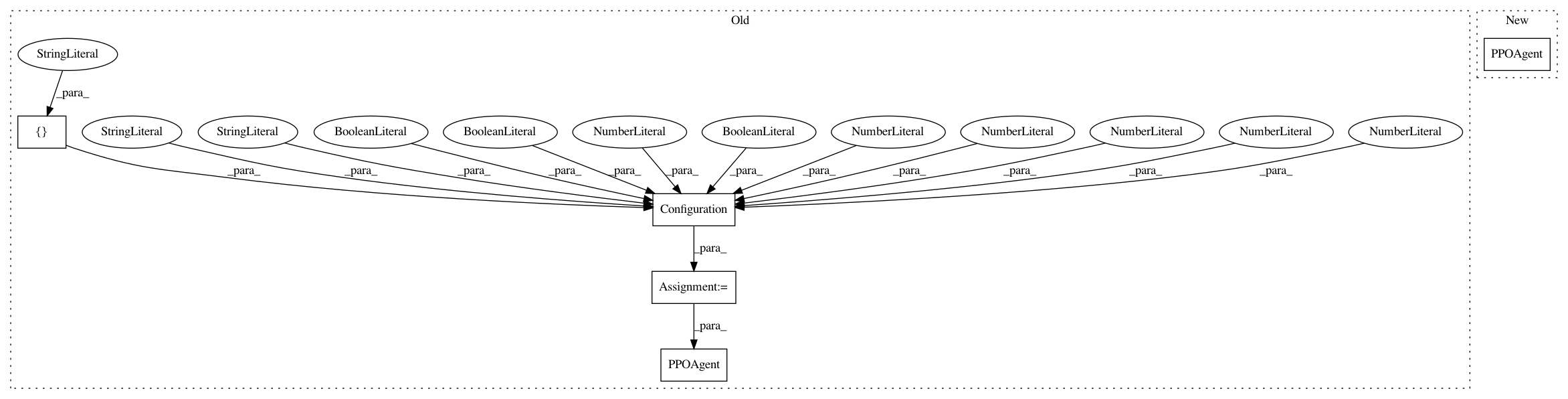

After Change

dict(type="dense", size=32, activation="tanh")

]

agent = PPOAgent(

states_spec=env.states,

actions_spec=env.actions,

network_spec=network_spec,

batch_size=4096,

// Agent

preprocessing=None,

exploration=None,

reward_preprocessing=None,

// BatchAgent

keep_last_timestep=True,

// PPOAgent

step_optimizer=dict(

type="adam",

learning_rate=1e-3

),

optimization_steps=10,

// Model

scope="ppo",

discount=0.99,

// DistributionModel

distributions_spec=None,

entropy_regularization=0.01,

// PGModel

baseline_mode=None,

baseline=None,

baseline_optimizer=None,

gae_lambda=None,

normalize_rewards=False,

// PGLRModel

likelihood_ratio_clipping=0.2,

summary_spec=None,

distributed_spec=None

)

// Create the runner

runner = Runner(agent=agent, environment=env)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: reinforceio/tensorforce

Commit Name: d10e1736bef8954722d0d19631fe42f6b83e8ce6

Time: 2017-11-11

Author: mi.schaarschmidt@gmail.com

File Name: examples/quickstart.py

Class Name:

Method Name:

Project Name: reinforceio/tensorforce

Commit Name: 863b8dee69df21ff479b0f28422f2bf2b14f05bd

Time: 2017-10-15

Author: mi.schaarschmidt@gmail.com

File Name: examples/quickstart.py

Class Name:

Method Name:

Project Name: reinforceio/tensorforce

Commit Name: ac04dcf9ced65fdd2cafc5c967400cabf32d3c6a

Time: 2017-10-15

Author: mi.schaarschmidt@gmail.com

File Name: tensorforce/tests/test_quickstart_example.py

Class Name: TestQuickstartExample

Method Name: test_example