a214f4e64d9e1f09a6ffdcad5e9595d0534e08f0,keras/layers/recurrent.py,LSTM,build,#LSTM#Any#,880

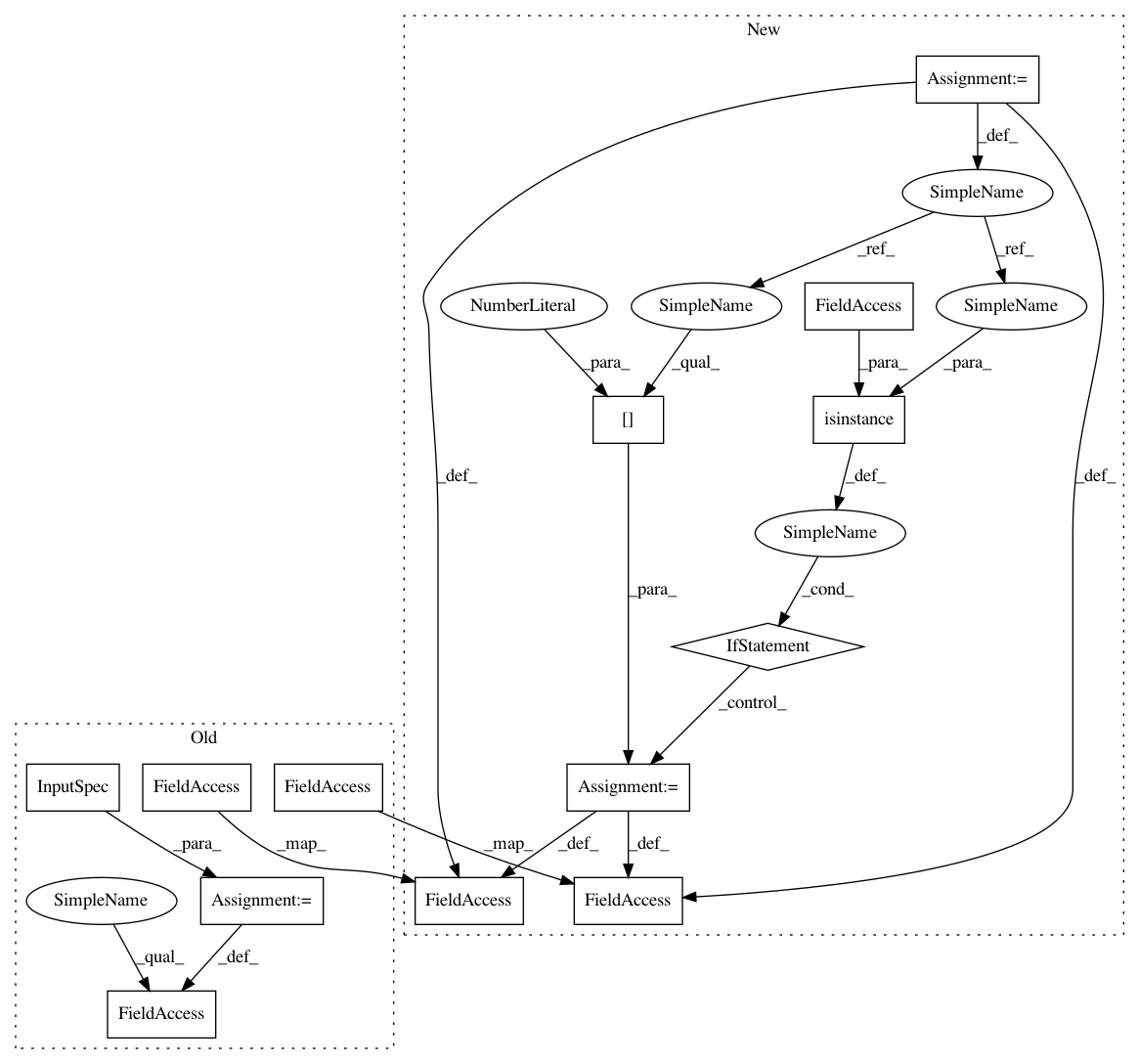

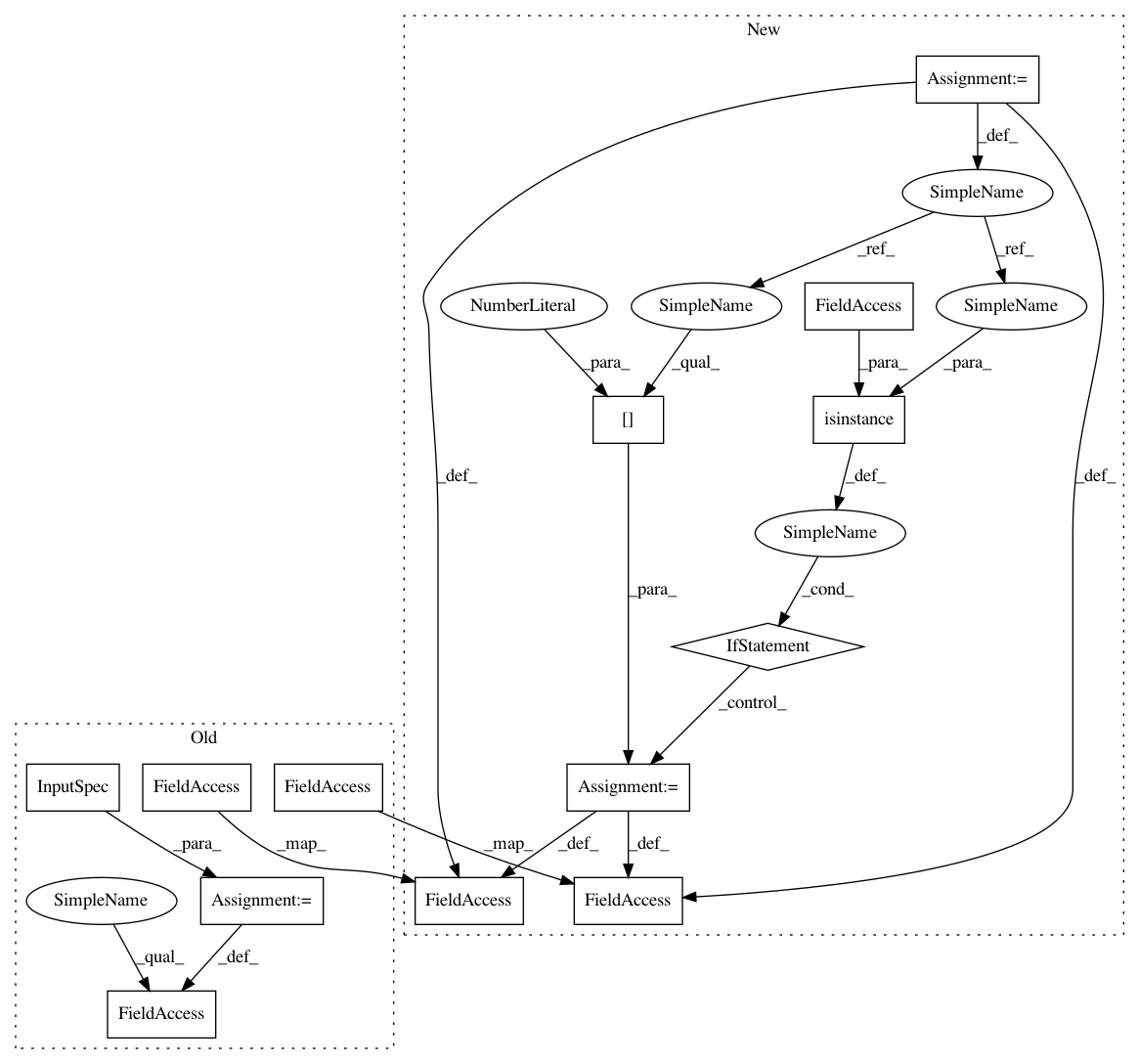

Before Change

self.recurrent_dropout = min(1., max(0., recurrent_dropout))

def build(self, input_shape):

self.input_spec = InputSpec(shape=input_shape)

self.input_dim = input_shape[2]

if self.stateful:

self.reset_states()

else:

// initial states: 2 all-zero tensors of shape (output_dim)

self.states = [None, None]

self.kernel = self.add_weight((self.input_dim, self.units * 4),

name="kernel",

initializer=self.kernel_initializer,

regularizer=self.kernel_regularizer,

constraint=self.kernel_constraint)

self.recurrent_kernel = self.add_weight(

(self.units, self.units * 4),

name="recurrent_kernel",

initializer=self.recurrent_initializer,

regularizer=self.recurrent_regularizer,

constraint=self.recurrent_constraint)

if self.use_bias:

self.bias = self.add_weight((self.units * 4,),

name="bias",

initializer=self.bias_initializer,

regularizer=self.bias_regularizer,

constraint=self.bias_constraint)

if self.unit_forget_bias:

self.bias += K.concatenate([K.zeros((self.units,)),

K.ones((self.units,)),

K.zeros((self.units * 2,))])

else:

self.bias = None

self.kernel_i = self.kernel[:, :self.units]

self.recurrent_kernel_i = self.recurrent_kernel[:, :self.units]

self.kernel_f = self.kernel[:, self.units: self.units * 2]

self.recurrent_kernel_f = self.recurrent_kernel[:, self.units: self.units * 2]

self.kernel_c = self.kernel[:, self.units * 2: self.units * 3]

self.recurrent_kernel_c = self.recurrent_kernel[:, self.units * 2: self.units * 3]

self.kernel_o = self.kernel[:, self.units * 3:]

self.recurrent_kernel_o = self.recurrent_kernel[:, self.units * 3:]

if self.use_bias:

After Change

self.recurrent_dropout = min(1., max(0., recurrent_dropout))

def build(self, input_shape):

if isinstance(input_shape, list):

input_shape = input_shape[0]

self.input_dim = input_shape[2]

if self.stateful:

self.reset_states()

else:

// initial states: 2 all-zero tensors of shape (output_dim)

self.states = [None, None]

self.kernel = self.add_weight((self.input_dim, self.units * 4),

name="kernel",

initializer=self.kernel_initializer,

regularizer=self.kernel_regularizer,

constraint=self.kernel_constraint)

self.recurrent_kernel = self.add_weight(

(self.units, self.units * 4),

name="recurrent_kernel",

initializer=self.recurrent_initializer,

regularizer=self.recurrent_regularizer,

constraint=self.recurrent_constraint)

if self.use_bias:

self.bias = self.add_weight((self.units * 4,),

name="bias",

initializer=self.bias_initializer,

regularizer=self.bias_regularizer,

constraint=self.bias_constraint)

if self.unit_forget_bias:

self.bias += K.concatenate([K.zeros((self.units,)),

K.ones((self.units,)),

K.zeros((self.units * 2,))])

else:

self.bias = None

self.kernel_i = self.kernel[:, :self.units]

self.recurrent_kernel_i = self.recurrent_kernel[:, :self.units]

self.kernel_f = self.kernel[:, self.units: self.units * 2]

self.recurrent_kernel_f = self.recurrent_kernel[:, self.units: self.units * 2]

self.kernel_c = self.kernel[:, self.units * 2: self.units * 3]

self.recurrent_kernel_c = self.recurrent_kernel[:, self.units * 2: self.units * 3]

self.kernel_o = self.kernel[:, self.units * 3:]

self.recurrent_kernel_o = self.recurrent_kernel[:, self.units * 3:]

if self.use_bias:

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 13

Instances

Project Name: keras-team/keras

Commit Name: a214f4e64d9e1f09a6ffdcad5e9595d0534e08f0

Time: 2017-03-03

Author: joshuarchin@gmail.com

File Name: keras/layers/recurrent.py

Class Name: LSTM

Method Name: build

Project Name: keras-team/keras

Commit Name: a214f4e64d9e1f09a6ffdcad5e9595d0534e08f0

Time: 2017-03-03

Author: joshuarchin@gmail.com

File Name: keras/layers/recurrent.py

Class Name: GRU

Method Name: build

Project Name: keras-team/keras

Commit Name: a214f4e64d9e1f09a6ffdcad5e9595d0534e08f0

Time: 2017-03-03

Author: joshuarchin@gmail.com

File Name: keras/layers/recurrent.py

Class Name: SimpleRNN

Method Name: build

Project Name: keras-team/keras

Commit Name: a214f4e64d9e1f09a6ffdcad5e9595d0534e08f0

Time: 2017-03-03

Author: joshuarchin@gmail.com

File Name: keras/layers/recurrent.py

Class Name: LSTM

Method Name: build