6a0d879dd254dcf5ccac86ac9958e203ddb4e1c9,agents/reinforce.py,REINFORCEContinuous,build_network_normal,#REINFORCEContinuous#,183

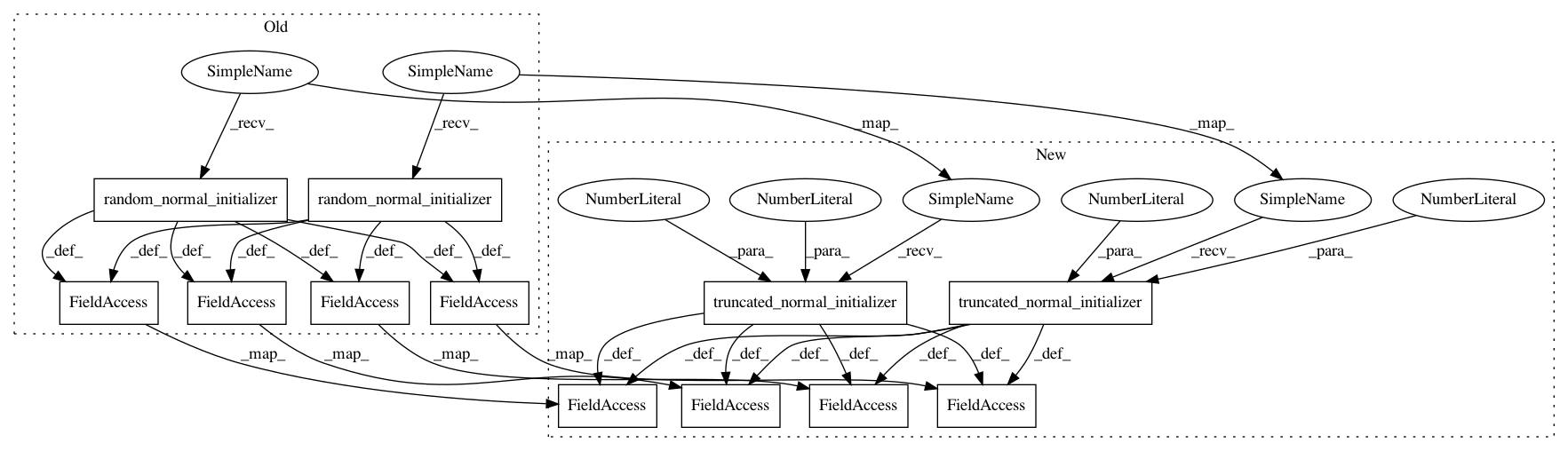

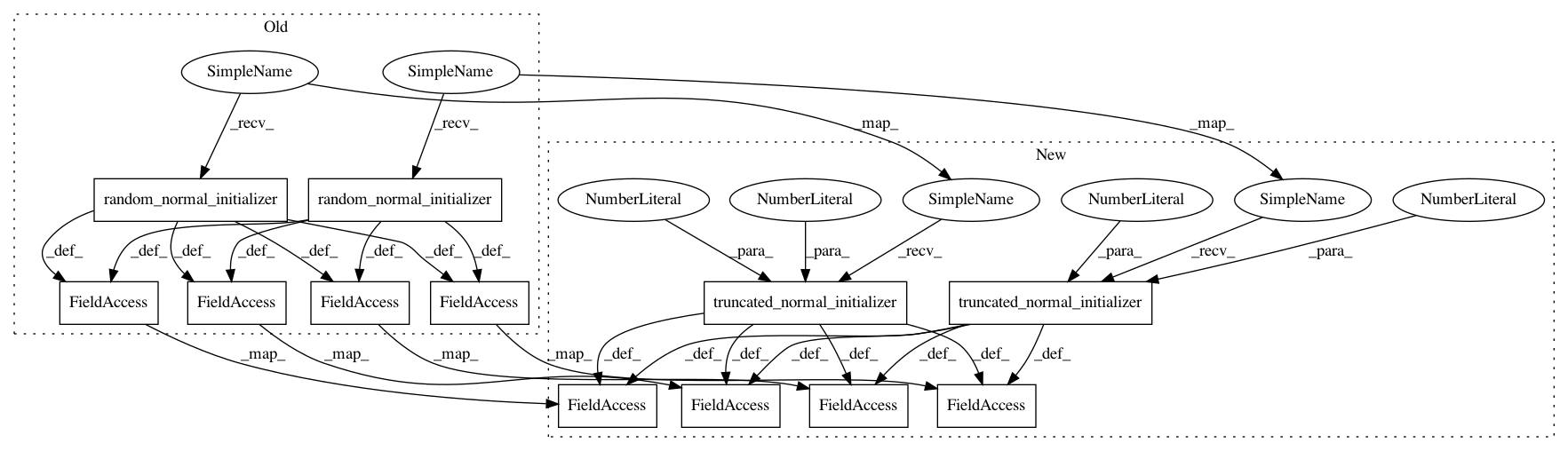

Before Change

inputs=self.states,

num_outputs=self.config["n_hidden_units"],

activation_fn=tf.tanh,

weights_initializer=tf.random_normal_initializer(),

biases_initializer=tf.zeros_initializer())

mu = tf.contrib.layers.fully_connected(

inputs=L1,

num_outputs=1,

activation_fn=None,

weights_initializer=tf.random_normal_initializer(),

biases_initializer=tf.zeros_initializer())

mu = tf.squeeze(mu, name="mu")

sigma_L1 = tf.contrib.layers.fully_connected(

inputs=self.states,

num_outputs=self.config["n_hidden_units"],

activation_fn=tf.tanh,

weights_initializer=tf.random_normal_initializer(),

biases_initializer=tf.zeros_initializer())

sigma = tf.contrib.layers.fully_connected(

inputs=sigma_L1,

num_outputs=1,

activation_fn=None,

weights_initializer=tf.random_normal_initializer(),

biases_initializer=tf.zeros_initializer())

sigma = tf.squeeze(sigma)

sigma = tf.nn.softplus(sigma) + 1e-5

self.normal_dist = tf.contrib.distributions.Normal(mu, sigma)

self.action = self.normal_dist.sample(1)

self.action = tf.clip_by_value(self.action, self.env.action_space.low[0], self.env.action_space.high[0])

loss = -self.normal_dist.log_prob(self.a_n) * self.adv_n

// Add cross entropy cost to encourage exploration

loss -= 1e-1 * self.normal_dist.entropy()

loss = tf.clip_by_value(loss, -1e10, 1e10)

self.summary_loss = tf.reduce_mean(loss)

// optimizer = tf.train.RMSPropOptimizer(learning_rate=self.config["learning_rate"], decay=0.9, epsilon=1e-9)

optimizer = tf.train.AdamOptimizer(learning_rate=self.config["learning_rate"])

self.train = optimizer.minimize(loss, global_step=tf.contrib.framework.get_global_step())

After Change

inputs=self.states,

num_outputs=self.config["n_hidden_units"],

activation_fn=tf.tanh,

weights_initializer=tf.truncated_normal_initializer(mean=0.0, stddev=0.02),

biases_initializer=tf.zeros_initializer())

mu = tf.contrib.layers.fully_connected(

inputs=L1,

num_outputs=1,

activation_fn=None,

weights_initializer=tf.truncated_normal_initializer(mean=0.0, stddev=0.02),

biases_initializer=tf.zeros_initializer())

mu = tf.squeeze(mu, name="mu")

sigma_L1 = tf.contrib.layers.fully_connected(

inputs=self.states,

num_outputs=self.config["n_hidden_units"],

activation_fn=tf.tanh,

weights_initializer=tf.truncated_normal_initializer(mean=0.0, stddev=0.02),

biases_initializer=tf.zeros_initializer())

sigma = tf.contrib.layers.fully_connected(

inputs=sigma_L1,

num_outputs=1,

activation_fn=None,

weights_initializer=tf.truncated_normal_initializer(mean=0.0, stddev=0.02),

biases_initializer=tf.zeros_initializer())

sigma = tf.squeeze(sigma)

sigma = tf.nn.softplus(sigma) + 1e-5

self.normal_dist = tf.contrib.distributions.Normal(mu, sigma)

self.action = self.normal_dist.sample(1)

self.action = tf.clip_by_value(self.action, self.env.action_space.low[0], self.env.action_space.high[0])

loss = -self.normal_dist.log_prob(self.a_n) * self.adv_n

// Add cross entropy cost to encourage exploration

loss -= 1e-1 * self.normal_dist.entropy()

loss = tf.clip_by_value(loss, -1e10, 1e10)

self.summary_loss = tf.reduce_mean(loss)

// optimizer = tf.train.RMSPropOptimizer(learning_rate=self.config["learning_rate"], decay=0.9, epsilon=1e-9)

optimizer = tf.train.AdamOptimizer(learning_rate=self.config["learning_rate"])

self.train = optimizer.minimize(loss, global_step=tf.contrib.framework.get_global_step())

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 12

Instances

Project Name: arnomoonens/yarll

Commit Name: 6a0d879dd254dcf5ccac86ac9958e203ddb4e1c9

Time: 2017-06-02

Author: x-006@hotmail.com

File Name: agents/reinforce.py

Class Name: REINFORCEContinuous

Method Name: build_network_normal

Project Name: arnomoonens/yarll

Commit Name: 6a0d879dd254dcf5ccac86ac9958e203ddb4e1c9

Time: 2017-06-02

Author: x-006@hotmail.com

File Name: agents/reinforce.py

Class Name: REINFORCEContinuous

Method Name: build_network_rnn

Project Name: arnomoonens/yarll

Commit Name: 6a0d879dd254dcf5ccac86ac9958e203ddb4e1c9

Time: 2017-06-02

Author: x-006@hotmail.com

File Name: agents/reinforce.py

Class Name: REINFORCEContinuous

Method Name: build_network_normal

Project Name: arnomoonens/yarll

Commit Name: 6a0d879dd254dcf5ccac86ac9958e203ddb4e1c9

Time: 2017-06-02

Author: x-006@hotmail.com

File Name: agents/a2c.py

Class Name: A2CDiscrete

Method Name: build_networks