eee9f1bbdaa88fa4fde7c3c8096a656804baf9e1,tests/test_tokenizers.py,TestTokenizer,test_tokenize_sentence,#TestTokenizer#,19

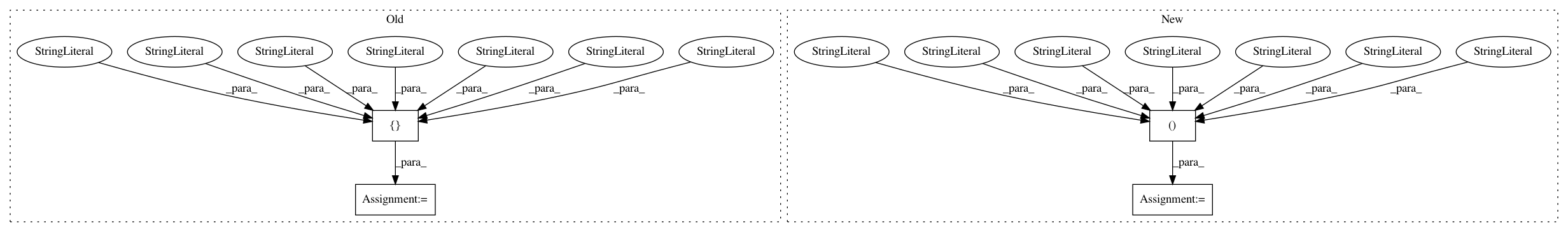

Before Change

tokenizer = Tokenizer("english")

words = tokenizer.to_words("I am a very nice sentence with comma, but..")

expected = [

"I", "am", "a", "very", "nice", "sentence",

"with", "comma", ",", "but.."

]

self.assertEqual(expected, words)

def test_tokenize_paragraph(self):

tokenizer = Tokenizer("english")

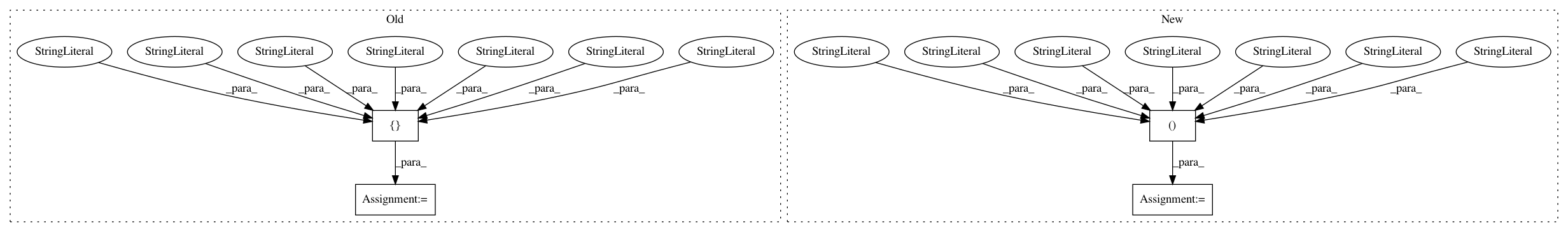

After Change

tokenizer = Tokenizer("english")

words = tokenizer.to_words("I am a very nice sentence with comma, but..")

expected = (

"I", "am", "a", "very", "nice", "sentence",

"with", "comma",

)

self.assertEqual(expected, words)

def test_tokenize_paragraph(self):

tokenizer = Tokenizer("english")

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 4

Instances

Project Name: miso-belica/sumy

Commit Name: eee9f1bbdaa88fa4fde7c3c8096a656804baf9e1

Time: 2013-05-21

Author: miso.belica@gmail.com

File Name: tests/test_tokenizers.py

Class Name: TestTokenizer

Method Name: test_tokenize_sentence

Project Name: okfn-brasil/serenata-de-amor

Commit Name: cef99445ab551931fed507518b85034b97c946ee

Time: 2016-11-09

Author: cuducos@gmail.com

File Name: src/search_suspect_places.py

Class Name:

Method Name: write_csv

Project Name: google-research/google-research

Commit Name: 0511917400844ea3182db8c4741efc1049a72b15

Time: 2021-04-02

Author: 57083372+ofirgeri@users.noreply.github.com

File Name: private_sampling/experiments.py

Class Name:

Method Name:

Project Name: IDSIA/sacred

Commit Name: 15c7e996c8b228713becc69cb678885d56e37e7a

Time: 2015-03-01

Author: qwlouse@gmail.com

File Name: sacred/config/__init__.py

Class Name:

Method Name: