171e9e18a10f2daea090bc6f4815db41072d66b6,ch10/03_pong_a2c_rollouts.py,,,#,115

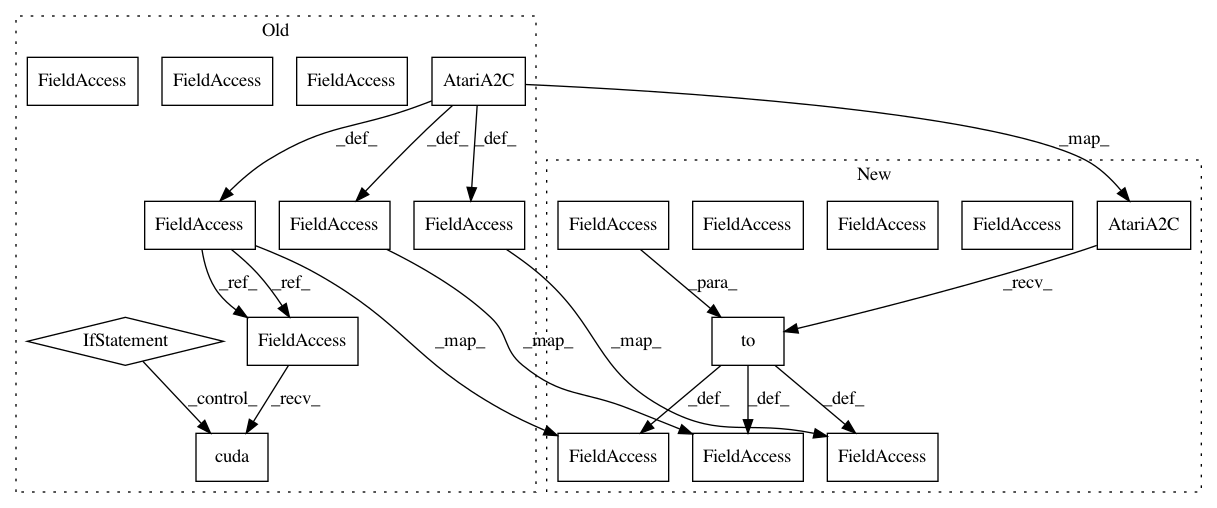

Before Change

writer = SummaryWriter(comment="-pong-a2c-rollouts_" + args.name)

set_seed(20, envs, cuda=args.cuda)

net = AtariA2C(envs[0].observation_space.shape, envs[0].action_space.n)

if args.cuda:

net.cuda()

print(net)

agent = ptan.agent.ActorCriticAgent(net, apply_softmax=True, cuda=args.cuda)

exp_source = ptan.experience.ExperienceSourceRollouts(envs, agent, gamma=GAMMA, steps_count=REWARD_STEPS)

optimizer = optim.RMSprop(net.parameters(), lr=LEARNING_RATE, eps=1e-5)

step_idx = 0

with common.RewardTracker(writer, stop_reward=18) as tracker:

with ptan.common.utils.TBMeanTracker(writer, batch_size=10) as tb_tracker:

for mb_states, mb_rewards, mb_actions, mb_values in exp_source:

// handle new rewards

new_rewards = exp_source.pop_total_rewards()

if new_rewards:

if tracker.reward(np.mean(new_rewards), step_idx):

break

optimizer.zero_grad()

states_v = Variable(torch.from_numpy(mb_states))

mb_adv = mb_rewards - mb_values

adv_v = Variable(torch.from_numpy(mb_adv))

actions_t = torch.from_numpy(mb_actions)

vals_ref_v = Variable(torch.from_numpy(mb_rewards))

if args.cuda:

states_v = states_v.cuda()

adv_v = adv_v.cuda()

actions_t = actions_t.cuda()

vals_ref_v = vals_ref_v.cuda()

logits_v, value_v = net(states_v)

loss_value_v = F.mse_loss(value_v, vals_ref_v)

log_prob_v = F.log_softmax(logits_v, dim=1)

log_prob_actions_v = adv_v * log_prob_v[range(len(mb_states)), actions_t]

loss_policy_v = -log_prob_actions_v.mean()

prob_v = F.softmax(logits_v, dim=1)

entropy_loss_v = (prob_v * log_prob_v).sum(dim=1).mean()

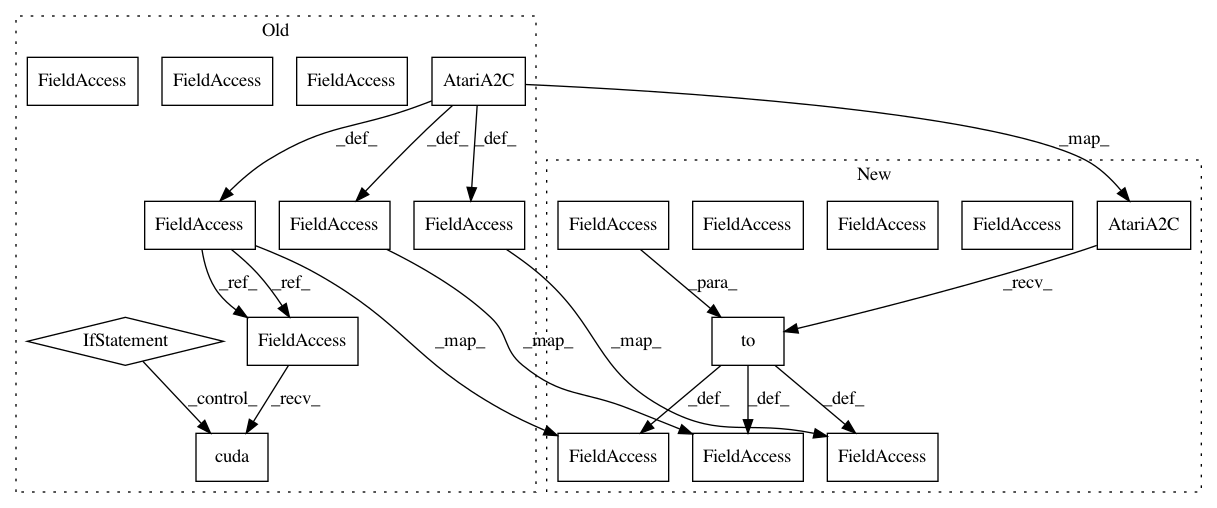

After Change

writer = SummaryWriter(comment="-pong-a2c-rollouts_" + args.name)

set_seed(20, envs, cuda=args.cuda)

net = AtariA2C(envs[0].observation_space.shape, envs[0].action_space.n).to(device)

print(net)

agent = ptan.agent.ActorCriticAgent(net, apply_softmax=True, device=device)

exp_source = ptan.experience.ExperienceSourceRollouts(envs, agent, gamma=GAMMA, steps_count=REWARD_STEPS)

optimizer = optim.RMSprop(net.parameters(), lr=LEARNING_RATE, eps=1e-5)

step_idx = 0

with common.RewardTracker(writer, stop_reward=18) as tracker:

with ptan.common.utils.TBMeanTracker(writer, batch_size=10) as tb_tracker:

for mb_states, mb_rewards, mb_actions, mb_values in exp_source:

// handle new rewards

new_rewards = exp_source.pop_total_rewards()

if new_rewards:

if tracker.reward(np.mean(new_rewards), step_idx):

break

optimizer.zero_grad()

states_v = torch.FloatTensor(mb_states).to(device)

mb_adv = mb_rewards - mb_values

adv_v = torch.FloatTensor(mb_adv).to(device)

actions_t = torch.LongTensor(mb_actions).to(device)

vals_ref_v = torch.FloatTensor(mb_rewards).to(device)

logits_v, value_v = net(states_v)

loss_value_v = F.mse_loss(value_v.squeeze(-1), vals_ref_v)

log_prob_v = F.log_softmax(logits_v, dim=1)

log_prob_actions_v = adv_v * log_prob_v[range(len(mb_states)), actions_t]

loss_policy_v = -log_prob_actions_v.mean()

prob_v = F.softmax(logits_v, dim=1)

entropy_loss_v = (prob_v * log_prob_v).sum(dim=1).mean()

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 19

Instances

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 171e9e18a10f2daea090bc6f4815db41072d66b6

Time: 2018-04-27

Author: max.lapan@gmail.com

File Name: ch10/03_pong_a2c_rollouts.py

Class Name:

Method Name:

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 171e9e18a10f2daea090bc6f4815db41072d66b6

Time: 2018-04-27

Author: max.lapan@gmail.com

File Name: ch10/02_pong_a2c.py

Class Name:

Method Name:

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 9e80c11073af48db2876fc943df9264a7ab0488e

Time: 2018-04-29

Author: max.lapan@gmail.com

File Name: ch11/01_a3c_data.py

Class Name:

Method Name: