ca907342507c1139696f542de0a3351d7a382eee,reinforcement_learning/actor_critic.py,,,#,86

Before Change

running_reward = 10

for i_episode in count(1):

state = env.reset()

for t in range(10000): // Don"t infinite loop while learning

action = select_action(state)

state, reward, done, _ = env.step(action)

if args.render:

env.render()

model.rewards.append(reward)

if done:

break

running_reward = running_reward * 0.99 + t * 0.01

finish_episode()

if i_episode % args.log_interval == 0:

print("Episode {}\tLast length: {:5d}\tAverage length: {:.2f}".format(

After Change

break

if __name__ == "__main__":

main()

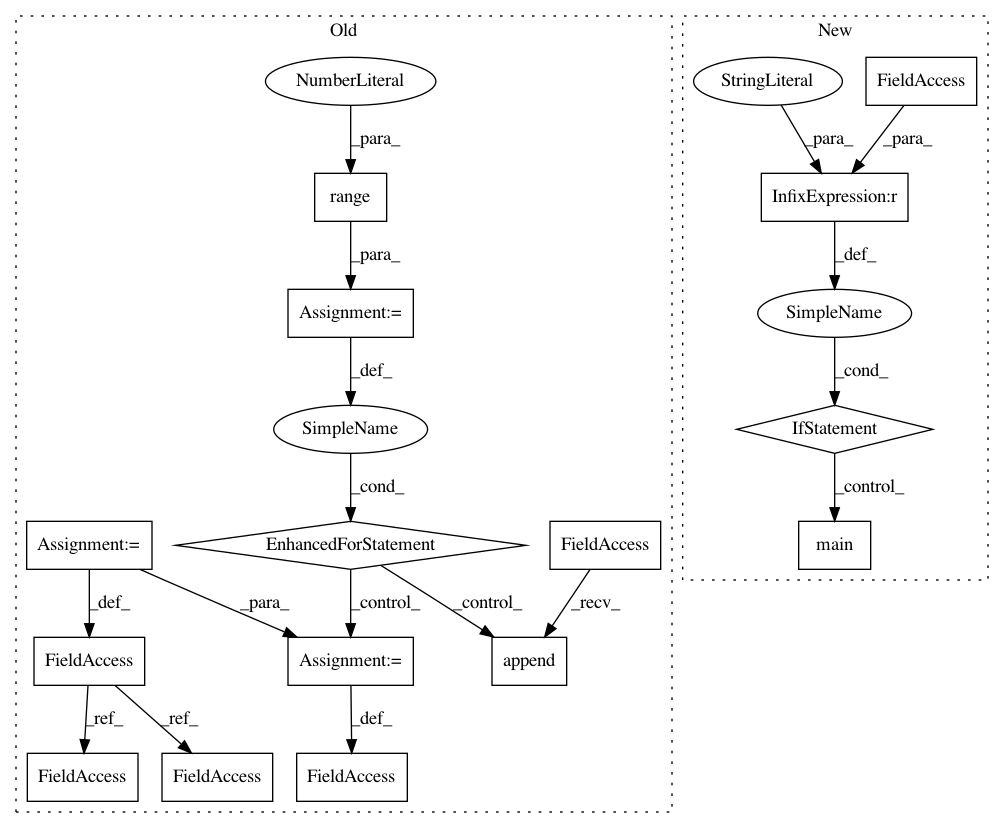

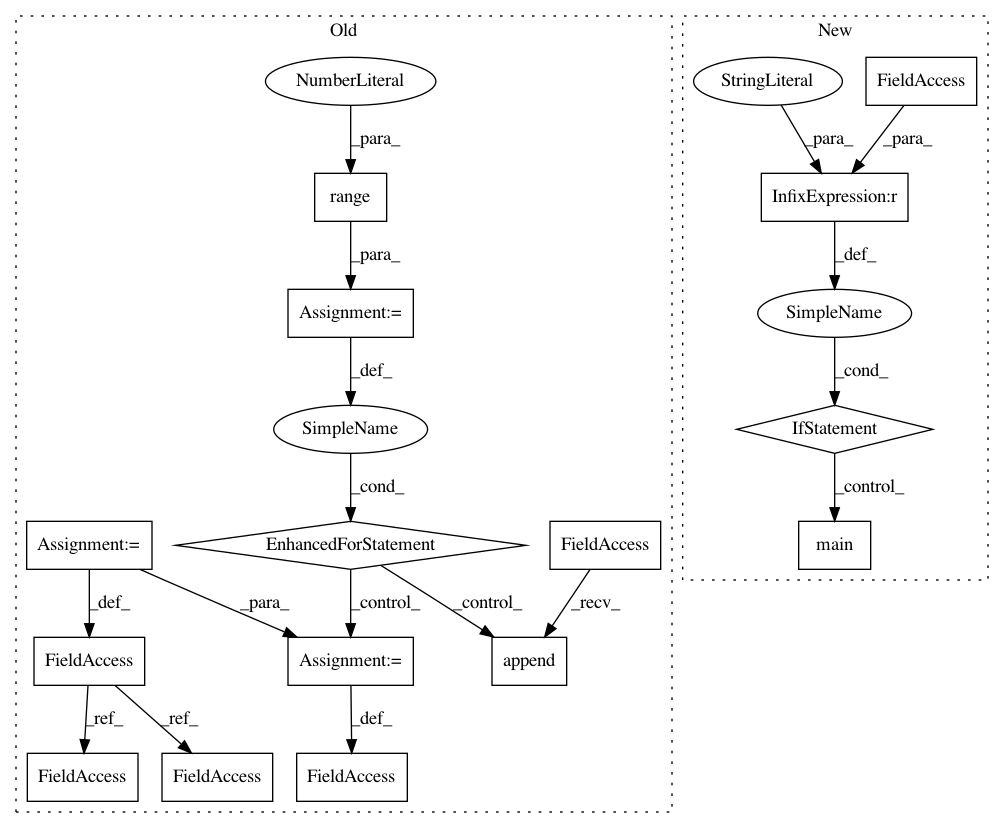

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 15

Instances

Project Name: pytorch/examples

Commit Name: ca907342507c1139696f542de0a3351d7a382eee

Time: 2017-12-04

Author: sgross@fb.com

File Name: reinforcement_learning/actor_critic.py

Class Name:

Method Name:

Project Name: ray-project/ray

Commit Name: be647b69abd7450df6ca2a34a4de25c27d46c0b3

Time: 2020-06-30

Author: xmo@berkeley.edu

File Name: python/ray/serve/benchmarks/noop_latency.py

Class Name:

Method Name:

Project Name: pytorch/examples

Commit Name: ca907342507c1139696f542de0a3351d7a382eee

Time: 2017-12-04

Author: sgross@fb.com

File Name: reinforcement_learning/reinforce.py

Class Name:

Method Name: