2f2cfbf4cccfa0f9b1aaf114baa3c1be3c777f5e,spynnaker/pyNN/models/common/eieio_spike_recorder.py,EIEIOSpikeRecorder,get_spikes,#EIEIOSpikeRecorder#Any#Any#Any#Any#Any#Any#Any#Any#,39

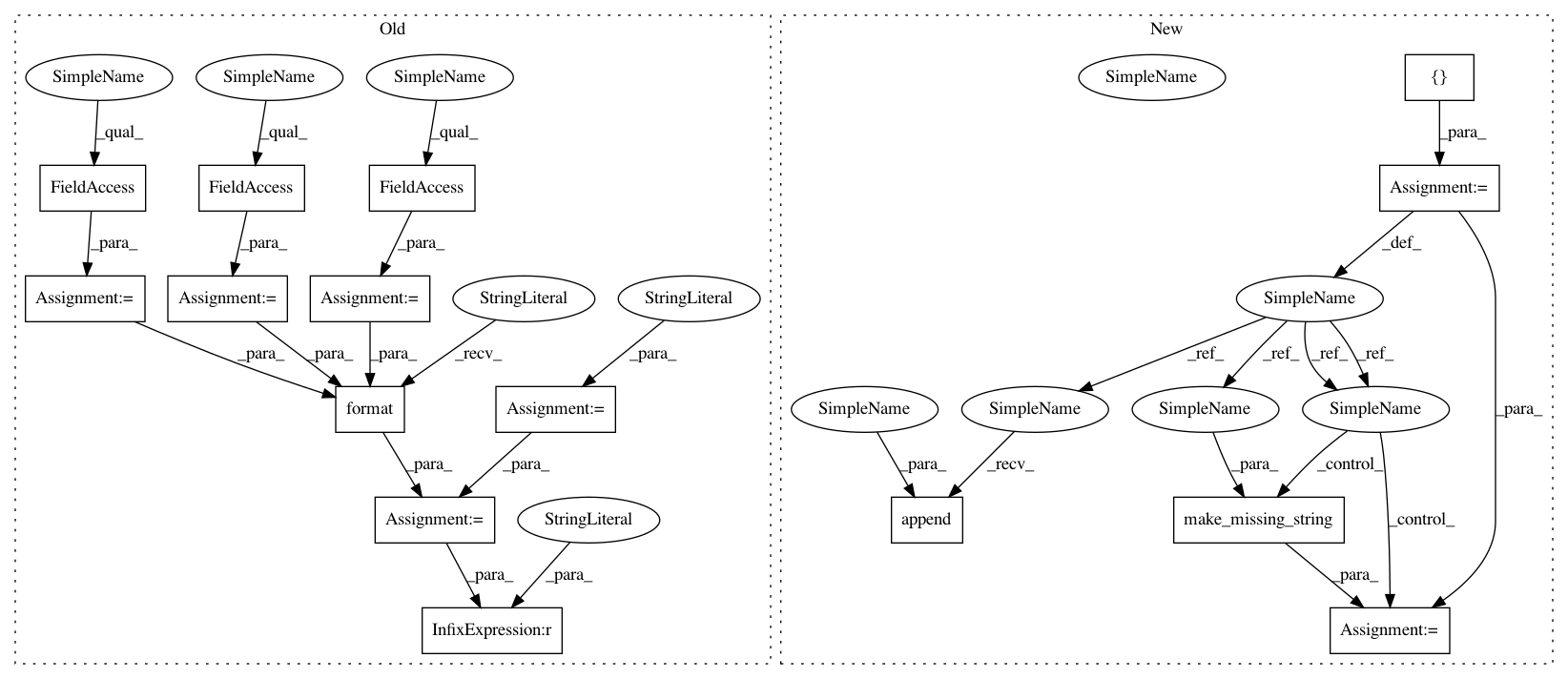

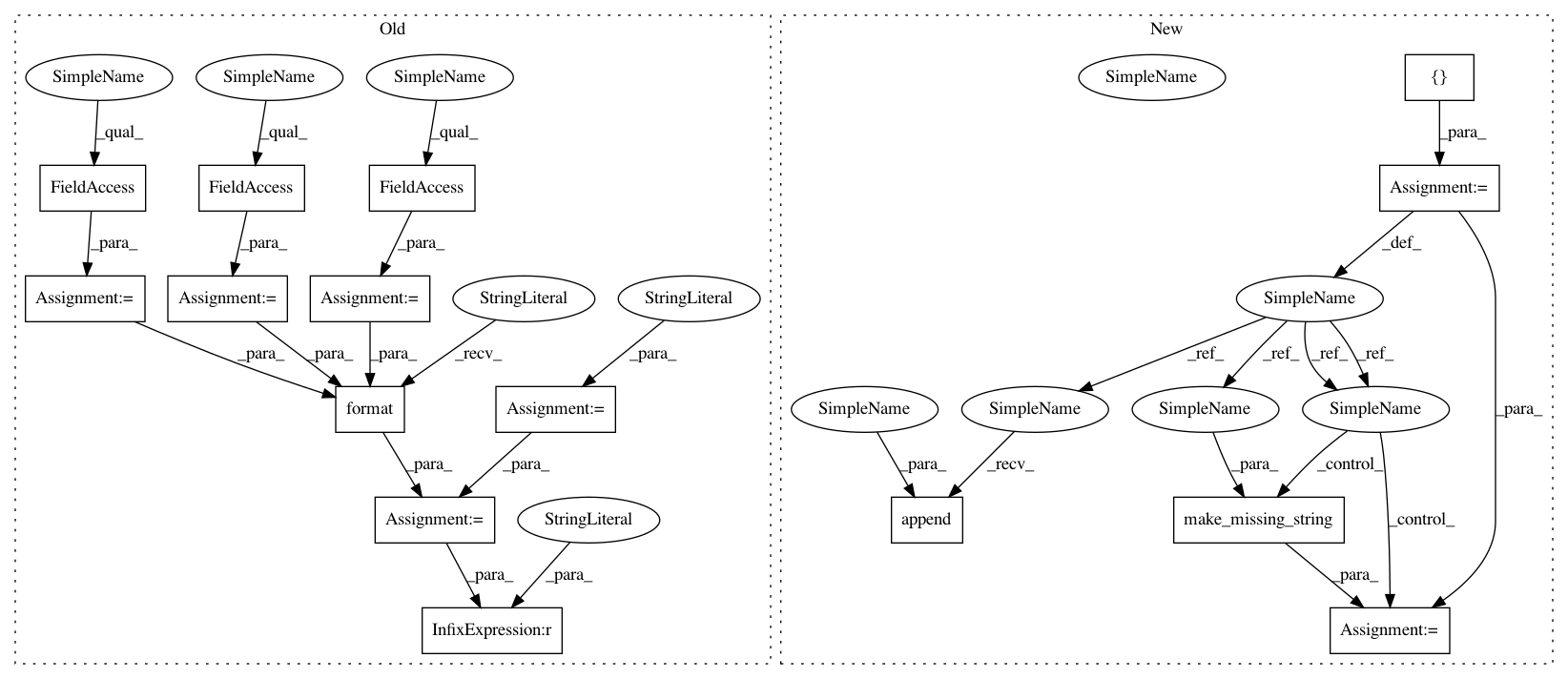

Before Change

placements, graph_mapper, application_vertex,

base_key_function, machine_time_step):

results = list()

missing_str = ""

ms_per_tick = machine_time_step / 1000.0

vertices = graph_mapper.get_machine_vertices(application_vertex)

progress = ProgressBar(vertices,

"Getting spikes for {}".format(label))

for vertex in progress.over(vertices):

placement = placements.get_placement_of_vertex(vertex)

vertex_slice = graph_mapper.get_slice(vertex)

x = placement.x

y = placement.y

p = placement.p

// Read the spikes

raw_spike_data, data_missing = \

buffer_manager.get_data_for_vertex(placement, region)

if data_missing:

missing_str += "({}, {}, {}); ".format(x, y, p)

spike_data = str(raw_spike_data.read_all())

number_of_bytes_written = len(spike_data)

offset = 0

while offset < number_of_bytes_written:

length = _ONE_WORD.unpack_from(spike_data, offset)[0]

time = _ONE_WORD.unpack_from(spike_data, offset + 4)[0]

time *= ms_per_tick

data_offset = offset + 8

eieio_header = EIEIODataHeader.from_bytestring(

spike_data, data_offset)

if eieio_header.eieio_type.payload_bytes > 0:

raise Exception("Can only read spikes as keys")

data_offset += eieio_header.size

timestamps = numpy.repeat([time], eieio_header.count)

key_bytes = eieio_header.eieio_type.key_bytes

keys = numpy.frombuffer(

spike_data, dtype="<u{}".format(key_bytes),

count=eieio_header.count, offset=data_offset)

neuron_ids = ((keys - base_key_function(vertex)) +

vertex_slice.lo_atom)

offset += length + 8

results.append(numpy.dstack((neuron_ids, timestamps))[0])

if missing_str != "":

logger.warn(

"Population %s is missing spike data in region %s from the"

" following cores: %s", label, region, missing_str)

if not results:

After Change

base_key_function, machine_time_step):

// pylint: disable=too-many-arguments

results = list()

missing = []

ms_per_tick = machine_time_step / 1000.0

vertices = graph_mapper.get_machine_vertices(application_vertex)

progress = ProgressBar(vertices,

"Getting spikes for {}".format(label))

for vertex in progress.over(vertices):

placement = placements.get_placement_of_vertex(vertex)

vertex_slice = graph_mapper.get_slice(vertex)

// Read the spikes

raw_spike_data, data_missing = \

buffer_manager.get_data_for_vertex(placement, region)

if data_missing:

missing.append(placement)

spike_data = str(raw_spike_data.read_all())

number_of_bytes_written = len(spike_data)

offset = 0

while offset < number_of_bytes_written:

length = _ONE_WORD.unpack_from(spike_data, offset)[0]

time = _ONE_WORD.unpack_from(spike_data, offset + 4)[0]

time *= ms_per_tick

data_offset = offset + 8

eieio_header = EIEIODataHeader.from_bytestring(

spike_data, data_offset)

if eieio_header.eieio_type.payload_bytes > 0:

raise Exception("Can only read spikes as keys")

data_offset += eieio_header.size

timestamps = numpy.repeat([time], eieio_header.count)

key_bytes = eieio_header.eieio_type.key_bytes

keys = numpy.frombuffer(

spike_data, dtype="<u{}".format(key_bytes),

count=eieio_header.count, offset=data_offset)

neuron_ids = ((keys - base_key_function(vertex)) +

vertex_slice.lo_atom)

offset += length + 8

results.append(numpy.dstack((neuron_ids, timestamps))[0])

if missing:

missing_str = recording_utils.make_missing_string(missing)

logger.warn(

"Population %s is missing spike data in region %s from the"

" following cores: %s", label, region, missing_str)

if not results:

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 15

Instances

Project Name: SpiNNakerManchester/sPyNNaker

Commit Name: 2f2cfbf4cccfa0f9b1aaf114baa3c1be3c777f5e

Time: 2017-12-18

Author: donal.k.fellows@manchester.ac.uk

File Name: spynnaker/pyNN/models/common/eieio_spike_recorder.py

Class Name: EIEIOSpikeRecorder

Method Name: get_spikes

Project Name: SpiNNakerManchester/sPyNNaker

Commit Name: 2f2cfbf4cccfa0f9b1aaf114baa3c1be3c777f5e

Time: 2017-12-18

Author: donal.k.fellows@manchester.ac.uk

File Name: spynnaker/pyNN/models/common/eieio_spike_recorder.py

Class Name: EIEIOSpikeRecorder

Method Name: get_spikes

Project Name: SpiNNakerManchester/sPyNNaker

Commit Name: 2f2cfbf4cccfa0f9b1aaf114baa3c1be3c777f5e

Time: 2017-12-18

Author: donal.k.fellows@manchester.ac.uk

File Name: spynnaker/pyNN/models/common/spike_recorder.py

Class Name: SpikeRecorder

Method Name: get_spikes

Project Name: SpiNNakerManchester/sPyNNaker

Commit Name: 2f2cfbf4cccfa0f9b1aaf114baa3c1be3c777f5e

Time: 2017-12-18

Author: donal.k.fellows@manchester.ac.uk

File Name: spynnaker/pyNN/models/common/multi_spike_recorder.py

Class Name: MultiSpikeRecorder

Method Name: get_spikes

Project Name: SpiNNakerManchester/sPyNNaker

Commit Name: 2f2cfbf4cccfa0f9b1aaf114baa3c1be3c777f5e

Time: 2017-12-18

Author: donal.k.fellows@manchester.ac.uk

File Name: spynnaker/pyNN/models/common/abstract_uint32_recorder.py

Class Name: AbstractUInt32Recorder

Method Name: get_data