69e3319c4bffaa78823be2267f036d3d3fdf286e,tensorlayer/layers/activation.py,PRelu6,forward,#PRelu6#Any#,151

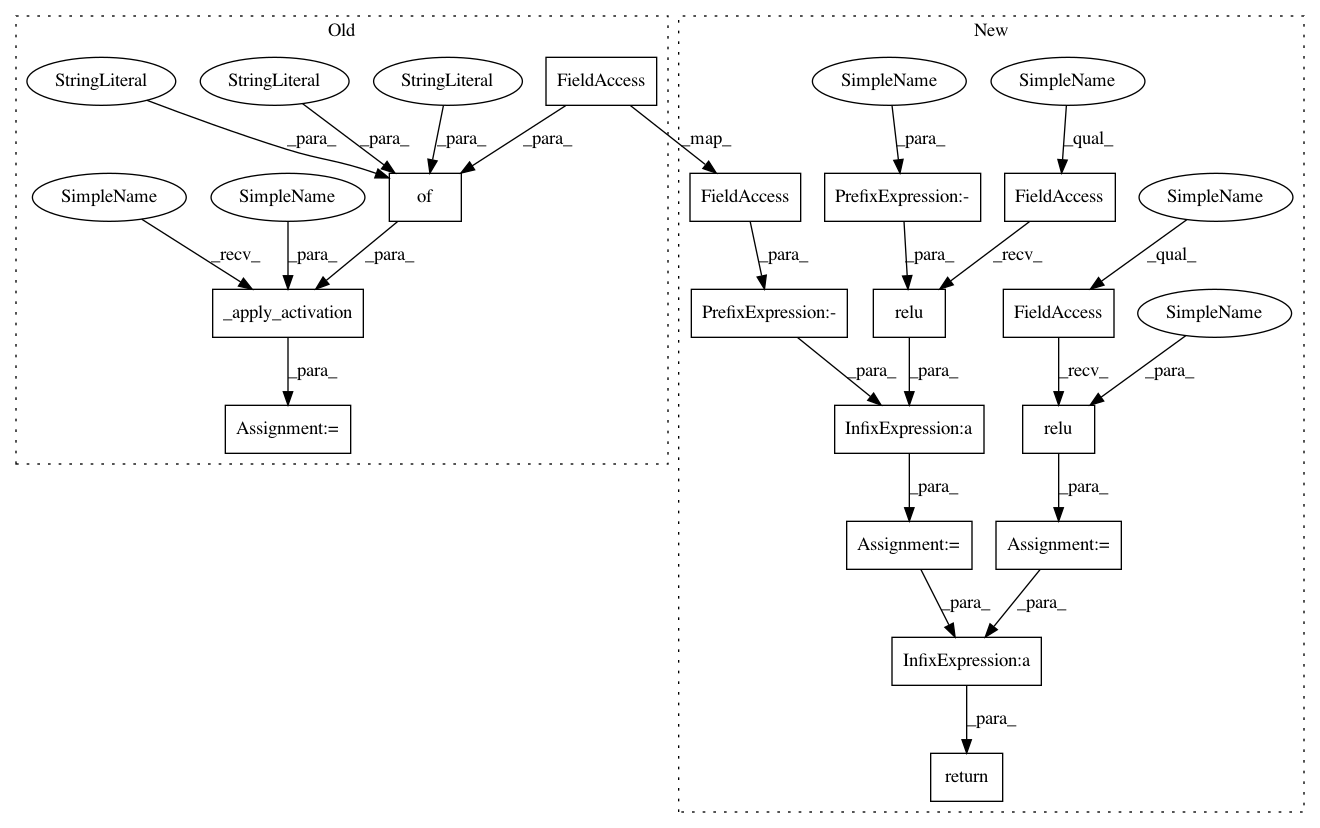

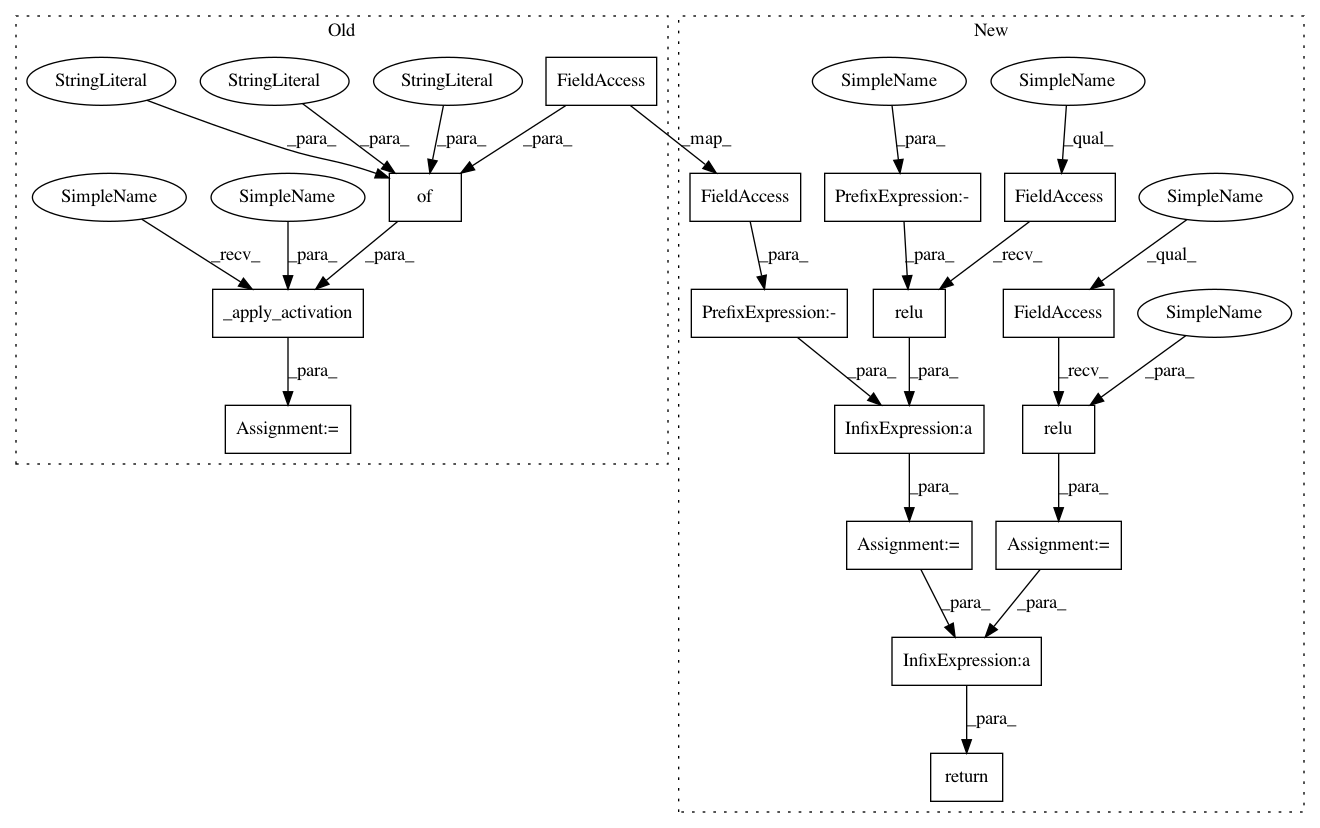

Before Change

// self.add_weights(self.alpha_var)

def forward(self, inputs):

outputs = self._apply_activation(inputs, **{"alpha": self.alpha_var_constrained, "name": "prelu6_activation"})

return outputs

class PTRelu6(Layer):

After Change

self.alpha_var_constrained = tf.nn.sigmoid(self.alpha_var, name="constraining_alpha_var_in_0_1")

def forward(self, inputs):

pos = tf.nn.relu(inputs)

pos_6 = -tf.nn.relu(inputs - 6)

neg = -self.alpha_var_constrained * tf.nn.relu(-inputs)

return pos + pos_6 + neg

class PTRelu6(Layer):

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 16

Instances

Project Name: tensorlayer/tensorlayer

Commit Name: 69e3319c4bffaa78823be2267f036d3d3fdf286e

Time: 2019-03-19

Author: jingqing.zhang15@imperial.ac.uk

File Name: tensorlayer/layers/activation.py

Class Name: PRelu6

Method Name: forward

Project Name: tensorlayer/tensorlayer

Commit Name: 69e3319c4bffaa78823be2267f036d3d3fdf286e

Time: 2019-03-19

Author: jingqing.zhang15@imperial.ac.uk

File Name: tensorlayer/layers/activation.py

Class Name: PTRelu6

Method Name: forward

Project Name: tensorlayer/tensorlayer

Commit Name: 69e3319c4bffaa78823be2267f036d3d3fdf286e

Time: 2019-03-19

Author: jingqing.zhang15@imperial.ac.uk

File Name: tensorlayer/layers/activation.py

Class Name: PRelu6

Method Name: forward

Project Name: tensorlayer/tensorlayer

Commit Name: 96596d3f9277ca11279c0ff4f7ae556ec5e7388f

Time: 2019-03-10

Author: jingqing.zhang15@imperial.ac.uk

File Name: tensorlayer/layers/activation.py

Class Name: PRelu

Method Name: forward