f11d4fd116c3f4d92bb65f496211b27a1d6f1bac,examples/images/inception_resnet_v2.py,,block35,#Any#Any#Any#,30

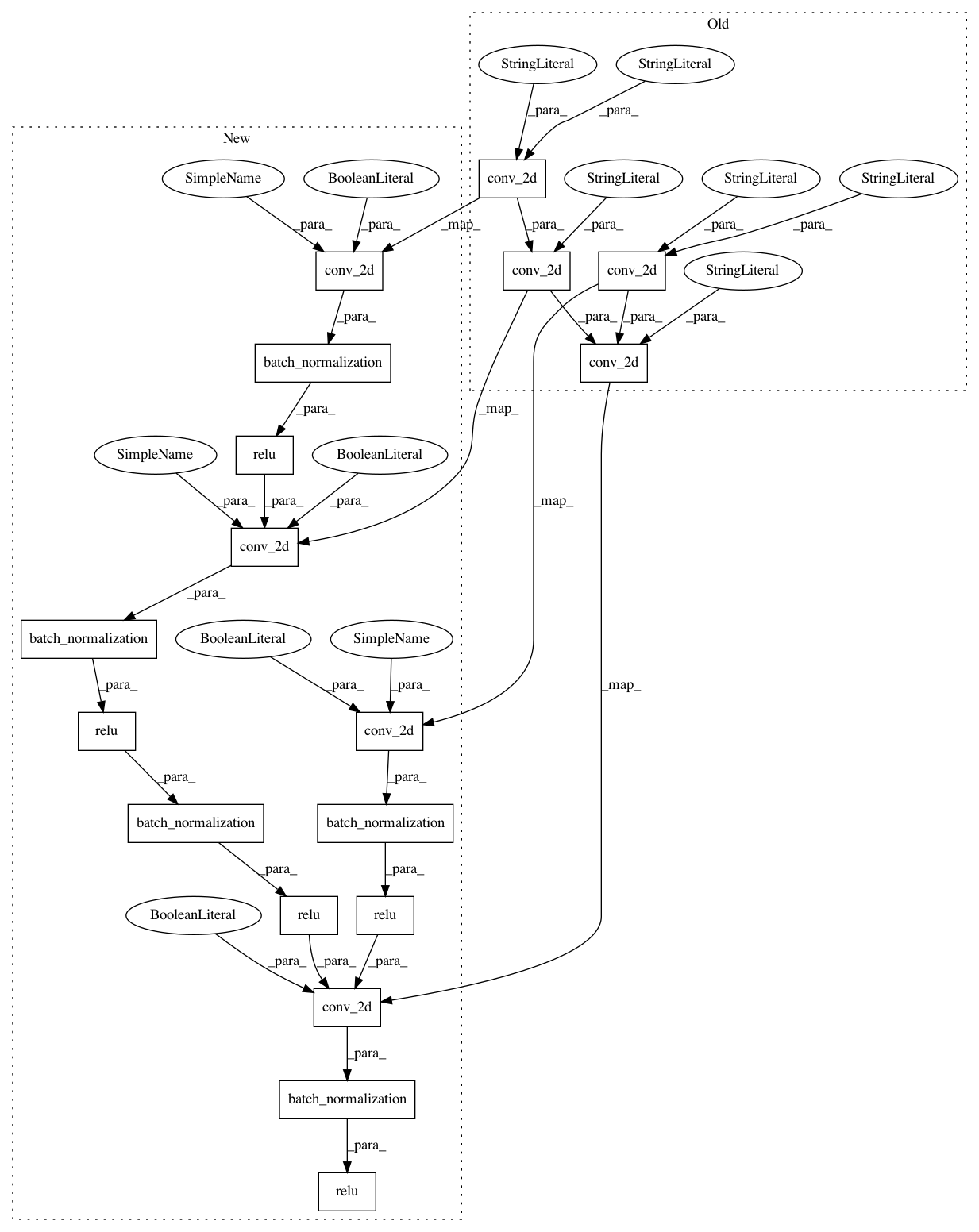

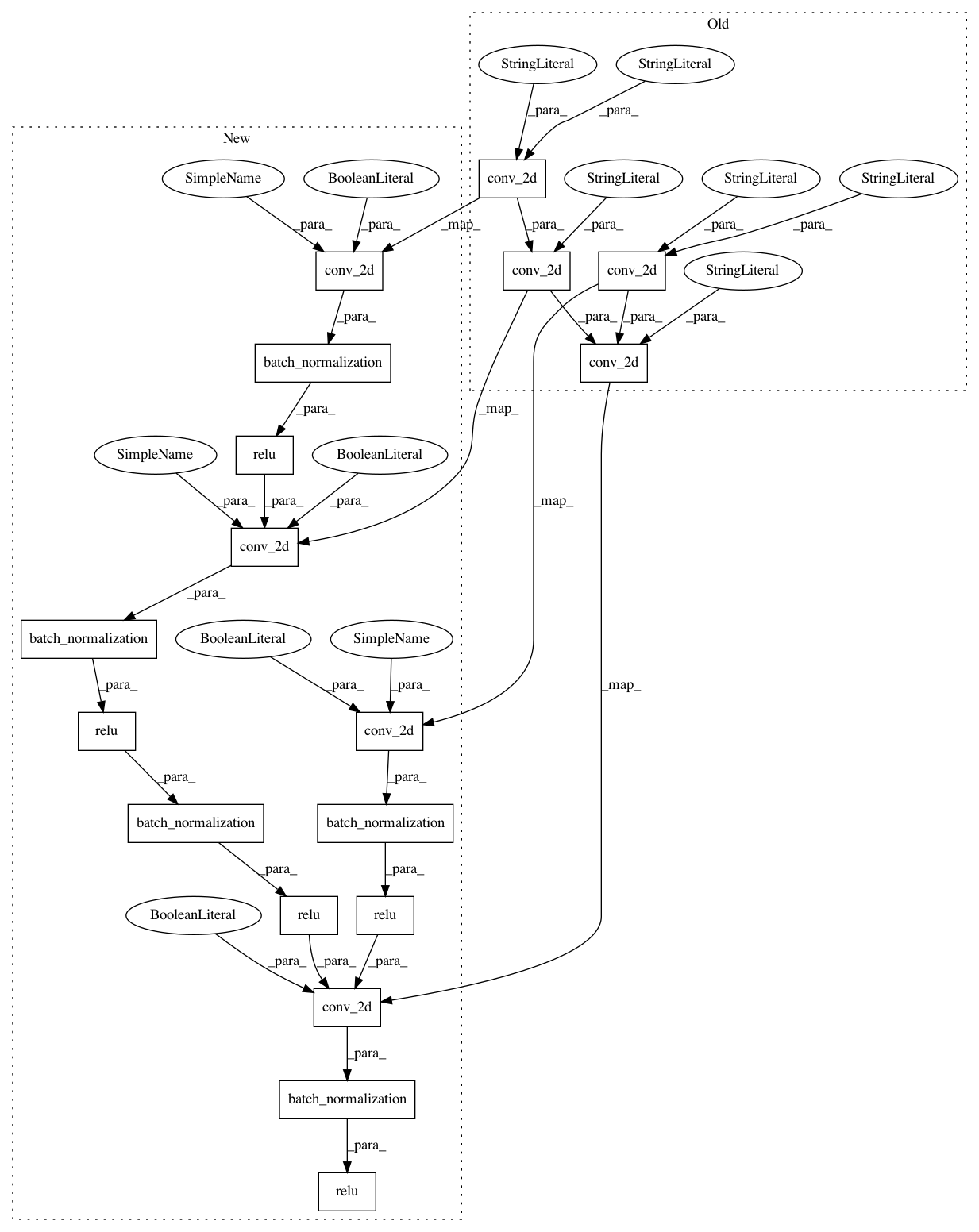

Before Change

import tflearn.datasets.oxflower17 as oxflower17

def block35(net, scale=1.0, activation="relu"):

tower_conv = conv_2d(net, 32, 1, normalizer_fn="batch_normalization", activation="relu", name="Conv2d_1x1")

tower_conv1_0 = conv_2d(net, 32, 1, normalizer_fn="batch_normalization", activation="relu",name="Conv2d_0a_1x1")

tower_conv1_1 = conv_2d(tower_conv1_0, 32, 3, normalizer_fn="batch_normalization", activation="relu",name="Conv2d_0b_3x3")

tower_conv2_0 = conv_2d(net, 32, 1, normalizer_fn="batch_normalization", activation="relu", name="Conv2d_0a_1x1")

tower_conv2_1 = conv_2d(tower_conv2_0, 48,3, normalizer_fn="batch_normalization", activation="relu", name="Conv2d_0b_3x3")

tower_conv2_2 = conv_2d(tower_conv2_1, 64,3, normalizer_fn="batch_normalization", activation="relu", name="Conv2d_0c_3x3")

tower_mixed = merge([tower_conv, tower_conv1_1, tower_conv2_2], mode="concat", axis=3)

tower_out = conv_2d(tower_mixed, net.get_shape()[3], 1, normalizer_fn="batch_normalization", activation=None, name="Conv2d_1x1")

net += scale * tower_out

if activation:

if isinstance(activation, str):

net = activations.get(activation)(net)

After Change

def block35(net, scale=1.0, activation="relu"):

tower_conv = relu(batch_normalization(conv_2d(net, 32, 1, bias=False, activation=None, name="Conv2d_1x1")))

tower_conv1_0 = relu(batch_normalization(conv_2d(net, 32, 1, bias=False, activation=None,name="Conv2d_0a_1x1")))

tower_conv1_1 = relu(batch_normalization(conv_2d(tower_conv1_0, 32, 3, bias=False, activation=None,name="Conv2d_0b_3x3")))

tower_conv2_0 = relu(batch_normalization(conv_2d(net, 32, 1, bias=False, activation=None, name="Conv2d_0a_1x1")))

tower_conv2_1 = relu(batch_normalization(conv_2d(tower_conv2_0, 48,3, bias=False, activation=None, name="Conv2d_0b_3x3")))

tower_conv2_2 = relu(batch_normalization(conv_2d(tower_conv2_1, 64,3, bias=False, activation=None, name="Conv2d_0c_3x3")))

tower_mixed = merge([tower_conv, tower_conv1_1, tower_conv2_2], mode="concat", axis=3)

tower_out = relu(batch_normalization(conv_2d(tower_mixed, net.get_shape()[3], 1, bias=False, activation=None, name="Conv2d_1x1")))

net += scale * tower_out

if activation:

if isinstance(activation, str):

net = activations.get(activation)(net)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 18

Instances

Project Name: tflearn/tflearn

Commit Name: f11d4fd116c3f4d92bb65f496211b27a1d6f1bac

Time: 2016-11-21

Author: dss_1990@sina.com

File Name: examples/images/inception_resnet_v2.py

Class Name:

Method Name: block35

Project Name: tflearn/tflearn

Commit Name: f11d4fd116c3f4d92bb65f496211b27a1d6f1bac

Time: 2016-11-21

Author: dss_1990@sina.com

File Name: examples/images/inception_resnet_v2.py

Class Name:

Method Name: block35

Project Name: tflearn/tflearn

Commit Name: f11d4fd116c3f4d92bb65f496211b27a1d6f1bac

Time: 2016-11-21

Author: dss_1990@sina.com

File Name: examples/images/inception_resnet_v2.py

Class Name:

Method Name: block17

Project Name: tflearn/tflearn

Commit Name: f11d4fd116c3f4d92bb65f496211b27a1d6f1bac

Time: 2016-11-21

Author: dss_1990@sina.com

File Name: examples/images/inception_resnet_v2.py

Class Name:

Method Name: block8