0b47129713e8cd63c49a0c53202f2b3deac941cc,softlearning/policies/latent_space_policy.py,LatentSpacePolicy,get_action,#LatentSpacePolicy#Any#Any#Any#,175

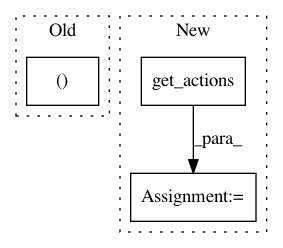

Before Change

best_action_index = np.argmax(q_values)

return action_candidates[best_action_index], {}

return self .get_actions(observation[None])[0], {}

def get_actions(self, observations):

Sample batch of actions based on the observationsAfter Change

assert not with_log_pis, "No log pi for deterministic action"

if with_raw_actions:

action_candidates, raw_action_candidates = self.get_actions(

observations, with_raw_actions=with_raw_actions)

q_values = self._q_function.eval(observations, action_candidates)

best_action_index = np.argmax(q_values)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: rail-berkeley/softlearning

Commit Name: 0b47129713e8cd63c49a0c53202f2b3deac941cc

Time: 2018-07-09

Author: azhou42@berkeley.edu

File Name: softlearning/policies/latent_space_policy.py

Class Name: LatentSpacePolicy

Method Name: get_action

Project Name: rlworkgroup/garage

Commit Name: e4b6611cb73ef7658f028831be1aa6bd85ecbed0

Time: 2020-08-14

Author: 38871737+avnishn@users.noreply.github.com

File Name: src/garage/tf/policies/gaussian_mlp_task_embedding_policy.py

Class Name: GaussianMLPTaskEmbeddingPolicy

Method Name: get_action

Project Name: rlworkgroup/garage

Commit Name: e4b6611cb73ef7658f028831be1aa6bd85ecbed0

Time: 2020-08-14

Author: 38871737+avnishn@users.noreply.github.com

File Name: src/garage/torch/policies/deterministic_mlp_policy.py

Class Name: DeterministicMLPPolicy

Method Name: get_action