ea0e73d5531f1481848df657ccfcc8d98757ff20,texar/core/optimization.py,,get_optimizer,#Any#Any#Any#Any#,353

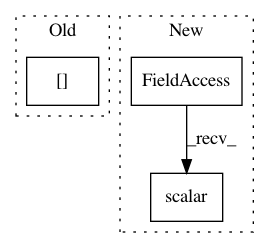

Before Change

opt_argspec = utils.get_default_arg_values(optimizer_class.__init__)

learning_rate = opt_argspec.get("learning_rate", None)

grad_clip_fn = get_gradient_clip_fn(hparams["gradient_clip"])

lr_decay_fn = get_learning_rate_decay_fn(hparams["learning_rate_decay"])

if lr_decay_fn is not None:

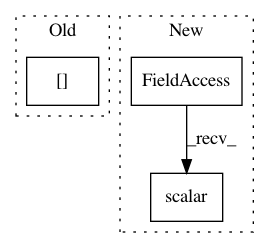

After Change

global_step=global_step)

else:

lr = learning_rate

tf.summary.scalar("learning_rate", lr)

optimizer = optimizer_fn(learning_rate=lr)

return optimizer

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: asyml/texar

Commit Name: ea0e73d5531f1481848df657ccfcc8d98757ff20

Time: 2018-12-20

Author: haoranshi97@gmail.com

File Name: texar/core/optimization.py

Class Name:

Method Name: get_optimizer

Project Name: arnomoonens/yarll

Commit Name: 86ce5d52134a56806112ff8664e4034338e0e05a

Time: 2019-03-21

Author: arno.moonens@gmail.com

File Name: yarll/agents/ppo/ppo.py

Class Name: PPO

Method Name: learn

Project Name: galeone/dynamic-training-bench

Commit Name: f302eba7375d8da3477816a705c7ce628f9a55a8

Time: 2017-05-12

Author: nessuno@nerdz.eu

File Name: dytb/trainer/Trainer.py

Class Name: Trainer

Method Name: train