1f720ee453871b2ab764f608926281716ef7bf81,tools/train_pl.py,,,#,340

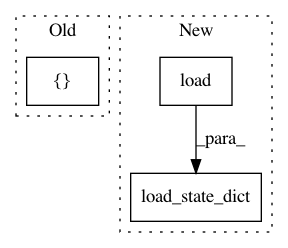

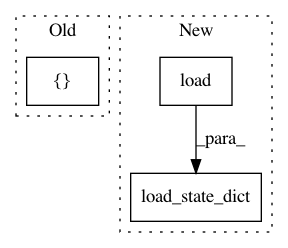

Before Change

lit = LitModel(opt)

// warning grad_clip_mode is ignored.

trainer = pl.Trainer(

callbacks=[OnEpochStartCallback()],

default_root_dir=opt.checkpoint_path,

resume_from_checkpoint=resume_from,

distributed_backend="ddp",

After Change

)

if os.getenv("EVALUATE", "0") == "1":

lit.load_state_dict(torch.load(resume_from)["state_dict"])

// Because ddp cant work with test

trainer = pl.Trainer(

default_root_dir=opt.checkpoint_path,

resume_from_checkpoint=resume_from,

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: ruotianluo/ImageCaptioning.pytorch

Commit Name: 1f720ee453871b2ab764f608926281716ef7bf81

Time: 2020-07-05

Author: rluo@ttic.edu

File Name: tools/train_pl.py

Class Name:

Method Name:

Project Name: jindongwang/transferlearning

Commit Name: 2733bef356c53286d475a67476d88d4840923830

Time: 2020-09-30

Author: jindongwang@outlook.com

File Name: code/deep/finetune_AlexNet_ResNet/finetune_office31.py

Class Name:

Method Name: finetune