f2328f664ba2ad19868d6e0eaff08721d96c506b,tensorforce/models/pg_model.py,PGModel,tf_reward_estimation,#PGModel#Any#Any#Any#Any#Any#,161

Before Change

)

// Normalize advantage.

mean, variance = tf.nn.moments(x=advantage, axes=[0], keep_dims=True)

advantage = (advantage - mean) / tf.sqrt(x=tf.maximum(x=variance, y=util.epsilon))

return advantage

After Change

update=update

)

state_value = self.baseline.predict(

states=tf.stop_gradient(input=embedding),

internals=internals,

update=update

)

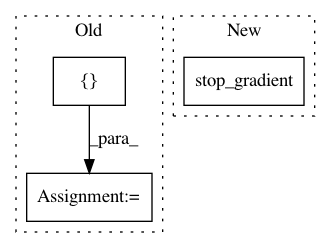

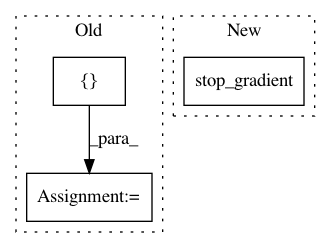

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 3

Instances

Project Name: reinforceio/tensorforce

Commit Name: f2328f664ba2ad19868d6e0eaff08721d96c506b

Time: 2018-01-27

Author: aok25@cl.cam.ac.uk

File Name: tensorforce/models/pg_model.py

Class Name: PGModel

Method Name: tf_reward_estimation

Project Name: arnomoonens/yarll

Commit Name: db33f675df19cd20001554b3a92f8c9d7f013c39

Time: 2018-10-25

Author: arno.moonens@gmail.com

File Name: agents/sac.py

Class Name: SAC

Method Name: __init__

Project Name: tensorflow/ranking

Commit Name: 6bf3f51cd0a312da842157665663c2dad9983248

Time: 2021-01-29

Author: xuanhui@google.com

File Name: tensorflow_ranking/python/losses_impl.py

Class Name: ClickEMLoss

Method Name: _compute_latent_prob

Project Name: MorvanZhou/tutorials

Commit Name: f1632a7d8a1c95f8a1b37c9b08d948f1adc7af92

Time: 2017-04-11

Author: morvanzhou@gmail.com

File Name: Reinforcement_learning_TUT/experiments/Solve_BipedalWalker/A3C.py

Class Name: ACNet

Method Name: _build_net