60dfcf812eca79017dced46e1189245c050a3fd6,dl/callbacks.py,OptimizerCallback,on_batch_end,#OptimizerCallback#Any#,393

Before Change

self.accumulation_counter = 0

else:

state.model.zero_grad()

if len(state._optimizer) > 0:

assert len(state._optimizer) == 1, \

"fp16 mode works only with one optimizer for now"

After Change

optimizer = state.get_key(

key="optimizer",

inner_key=self.optimizer_key)

loss = state.get_key(

key="loss",

inner_key=self.loss_key)

loss.backward()

if (self.accumulation_counter + 1) % self.accumulation_steps == 0:

self.grad_step(

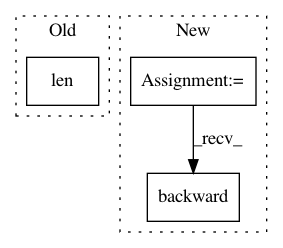

optimizer=optimizer,In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: Scitator/catalyst

Commit Name: 60dfcf812eca79017dced46e1189245c050a3fd6

Time: 2018-12-10

Author: scitator@gmail.com

File Name: dl/callbacks.py

Class Name: OptimizerCallback

Method Name: on_batch_end

Project Name: dpressel/mead-baseline

Commit Name: 3d9e51d5034e89bcec3a04eff3e646c70b45edb2

Time: 2017-03-16

Author: dpressel@gmail.com

File Name: classify/python/tf/train.py

Class Name: Trainer

Method Name: train

Project Name: pytorch/examples

Commit Name: 9faf2c65f74e3ee9bdaeedf9f3e5856727f0afe7

Time: 2017-12-04

Author: design@kaixhin.com

File Name: reinforcement_learning/actor_critic.py

Class Name:

Method Name: finish_episode