ad44e9a2219e4508a68ba3e831d36b9ca6a324ee,examples/grid_world.py,,experiment,#,13

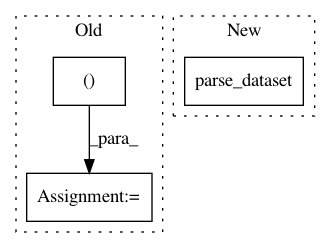

Before Change

np.random.seed()

// MDP

mdp = GridWorld(height=3, width=3, goal=(2, 2))

// Policy

epsilon = Parameter(value=1)

discrete_actions = mdp.action_space.values

pi = EpsGreedy(epsilon=epsilon, discrete_actions=discrete_actions)

// Approximator

approximator_params = dict(shape=(3, 3, mdp.action_space.n))

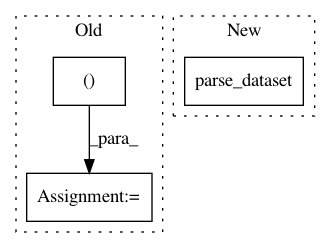

After Change

core.learn(n_iterations=1, how_many=500000, n_fit_steps=1,

iterate_over="samples")

_, _, reward, _, _, _ = parse_dataset(core.get_dataset(),

mdp.observation_space.dim,

mdp.action_space.dim)

return reward

if __name__ == "__main__":

n_experiment = 2

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: AIRLab-POLIMI/mushroom

Commit Name: ad44e9a2219e4508a68ba3e831d36b9ca6a324ee

Time: 2017-06-02

Author: carlo.deramo@gmail.com

File Name: examples/grid_world.py

Class Name:

Method Name: experiment

Project Name: AIRLab-POLIMI/mushroom

Commit Name: b2aad723220e31bc8e950c112b557732b608b97a

Time: 2017-06-04

Author: carlo.deramo@gmail.com

File Name: PyPi/algorithms/td.py

Class Name: DoubleQLearning

Method Name: fit

Project Name: AIRLab-POLIMI/mushroom

Commit Name: b2aad723220e31bc8e950c112b557732b608b97a

Time: 2017-06-04

Author: carlo.deramo@gmail.com

File Name: PyPi/algorithms/td.py

Class Name: TD

Method Name: fit