563207ae6bd9a8565243b04dcd92af9c33bac524,deepchem/models/keras_model.py,KerasModel,fit_generator,#KerasModel#Any#Any#Any#Any#Any#Any#Any#,246

Before Change

vars = variables

grads = tape.gradient(batch_loss, vars)

self._tf_optimizer.apply_gradients(zip(grads, vars))

self._global_step.assign_add(1)

current_step = self._global_step.numpy()

avg_loss += batch_loss

After Change

if loss is None:

loss = self._loss_fn

var_key = None

if variables is not None:

var_key = tuple(v.experimental_ref() for v in variables)

// The optimizer creates internal variables the first time apply_gradients()

// is called for a new set of variables. If that happens inside a function

// annotated with tf.function it throws an exception, so call it once here.

zero_grads = [tf.zeros(v.shape) for v in variables]

self._tf_optimizer.apply_gradients(zip(zero_grads, variables))

if var_key not in self._gradient_fn_for_vars:

self._gradient_fn_for_vars[var_key] = self._create_gradient_fn(variables)

apply_gradient_for_batch = self._gradient_fn_for_vars[var_key]

time1 = time.time()

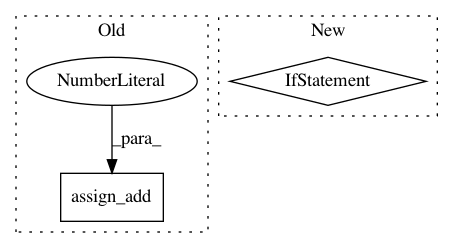

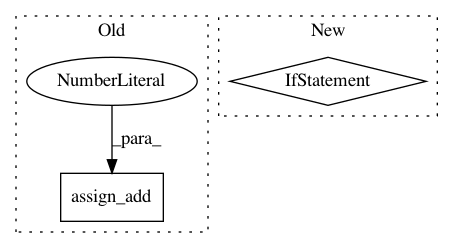

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 2

Instances

Project Name: deepchem/deepchem

Commit Name: 563207ae6bd9a8565243b04dcd92af9c33bac524

Time: 2020-02-12

Author: peastman@stanford.edu

File Name: deepchem/models/keras_model.py

Class Name: KerasModel

Method Name: fit_generator

Project Name: reinforceio/tensorforce

Commit Name: 04693aef014b8249e40a53e02e9ee293c0116913

Time: 2017-10-06

Author: aok25@cl.cam.ac.uk

File Name: tensorforce/models/model.py

Class Name: Model

Method Name: __init__

Project Name: tensorflow/kfac

Commit Name: a0ce9c776719da042d58022a31db5e99755588ad

Time: 2019-02-01

Author: jamesmartens@google.com

File Name: kfac/python/ops/kfac_utils/periodic_inv_cov_update_kfac_opt.py

Class Name: PeriodicInvCovUpdateKfacOpt

Method Name: kfac_update_ops