cea292769af4ac688649573a11b20f4d69024e3d,tests/unit_test/processor_units/test_processor_units.py,,test_bert_tokenizer_unit,#,145

Before Change

]

with tempfile.NamedTemporaryFile(delete=False) as vocab_writer:

vocab_writer.write("".join(

[x + "\n" for x in vocab_tokens] ).encode("utf-8"))

vocab_file = vocab_writer.name

After Change

clean_unit = units.BertClean()

cleaned_text = clean_unit.transform(raw_text)

chinese_tokenize_unit = units.ChineseTokenize()

chinese_tokenized_text = chinese_tokenize_unit.transform(cleaned_text)

basic_tokenize_unit = units.BasicTokenize()

basic_tokens = basic_tokenize_unit.transform(chinese_tokenized_text)

wordpiece_unit = units.WordPieceTokenize(vocab_dict)

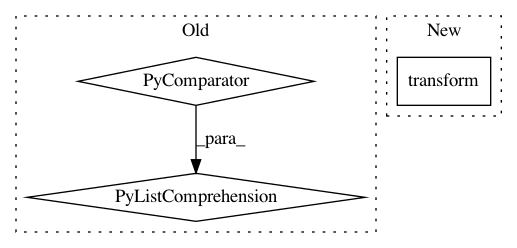

wordpiece_tokens = wordpiece_unit.transform(basic_tokens)In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: NTMC-Community/MatchZoo

Commit Name: cea292769af4ac688649573a11b20f4d69024e3d

Time: 2019-05-15

Author: 469413628@qq.com

File Name: tests/unit_test/processor_units/test_processor_units.py

Class Name:

Method Name: test_bert_tokenizer_unit

Project Name: apache/incubator-tvm

Commit Name: d164aac058eff0101a043690ca24f374b3900e7e

Time: 2020-11-05

Author: tristan.konolige@gmail.com

File Name: python/tvm/script/parser.py

Class Name: TVMScriptParser

Method Name: parse_arg_list

Project Name: GPflow/GPflow

Commit Name: ae11db5d25194cae8ef8998adbfbd96443a10fd6

Time: 2017-07-04

Author: alexggmatthews@googlemail.com

File Name: testing/test_transforms.py

Class Name: TransformTests

Method Name: setUp