78a7f7de24c34235d0784a5781f46de34d2336eb,python/eight_mile/tf/layers.py,MultiHeadedAttention,__init__,#MultiHeadedAttention#Any#Any#Any#Any#,1026

Before Change

:param dropout (``float``): The amount of dropout to use

:param attn_fn: A function to apply attention, defaults to SDP

super(MultiHeadedAttention, self).__init__()

assert d_model % h == 0

self.d_k = d_model // h

self.h = h

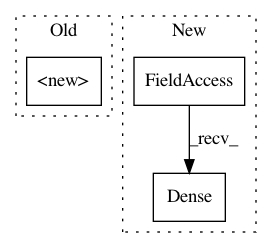

self.w_Q = TimeDistributedProjection(d_model)After Change

self.d_k = d_model // num_heads

self.h = num_heads

self.w_Q = tf.keras.layers.Dense(units=d_model)

self.w_K = tf.keras.layers.Dense(units=d_model)

self.w_V = tf.keras.layers.Dense(units=d_model)

self.w_O = tf.keras.layers.Dense(units=d_model)

self.attn_fn = self._scaled_dot_product_attention if scale else self._dot_product_attention

self.attn = NoneIn pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: dpressel/mead-baseline

Commit Name: 78a7f7de24c34235d0784a5781f46de34d2336eb

Time: 2019-10-29

Author: dpressel@gmail.com

File Name: python/eight_mile/tf/layers.py

Class Name: MultiHeadedAttention

Method Name: __init__

Project Name: google-research/google-research

Commit Name: 92d5725053bbafbe3b4932fc19a891466bd95447

Time: 2020-12-20

Author: rinap@google.com

File Name: graph_compression/compression_lib/examples/mnist_eager_mode/mnist_compression.py

Class Name: CompressedModel

Method Name: __init__

Project Name: dpressel/mead-baseline

Commit Name: 78a7f7de24c34235d0784a5781f46de34d2336eb

Time: 2019-10-29

Author: dpressel@gmail.com

File Name: python/eight_mile/tf/layers.py

Class Name: FFN

Method Name: __init__