5028ed1b6bedd526dee27ea731284f43e87303f0,fairseq/trainer.py,Trainer,train_step,#Trainer#Any#Any#,271

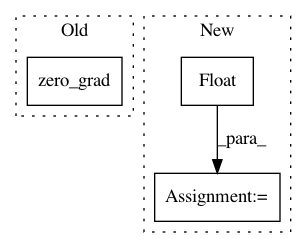

Before Change

metrics.log_scalar("oom", ooms, len(samples), priority=600, round=3)

if ooms == self.args.distributed_world_size * len(samples):

logger.warning("OOM in all workers, skipping update")

self.zero_grad()

return None

try:

// normalize grads by sample size

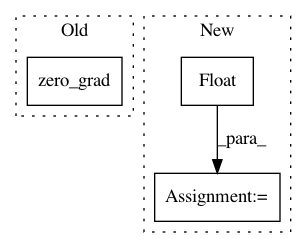

After Change

if torch.is_tensor(sample_size):

sample_size = sample_size.float()

else:

sample_size = float(sample_size)

// gather logging outputs from all replicas

if self._sync_stats():

logging_outputs, (sample_size, ooms) = self._aggregate_logging_outputs(

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: pytorch/fairseq

Commit Name: 5028ed1b6bedd526dee27ea731284f43e87303f0

Time: 2020-03-11

Author: myleott@fb.com

File Name: fairseq/trainer.py

Class Name: Trainer

Method Name: train_step

Project Name: facebookresearch/Horizon

Commit Name: 4d68a1e4435dfeb5884093aa91a33e1b34a909cc

Time: 2019-02-13

Author: kittipat@fb.com

File Name: ml/rl/training/_dqn_trainer.py

Class Name: _DQNTrainer

Method Name: train

Project Name: facebookresearch/Horizon

Commit Name: 69061e67d62a067c2a8a5c6a440f7b9605c111d6

Time: 2020-08-28

Author: badri@fb.com

File Name: reagent/training/reinforce.py

Class Name: Reinforce

Method Name: train