c19ac6b5692e2e8b5dec2f60ff306ecc5976e112,snips_nlu/tokenization.py,,tokenize,#Any#,16

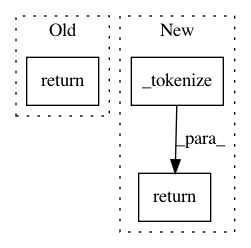

Before Change

def tokenize(string):

return _tokenize(string, [WORD_REGEX, SYMBOL_REGEX])

def _tokenize(string, regexes):

non_overlapping_tokens = []

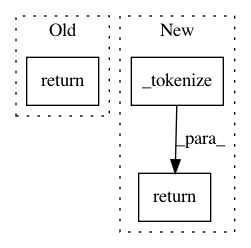

After Change

def tokenize(string):

return _tokenize(string, REGEXES_LIST)

def _tokenize(string, regexes):

non_overlapping_tokens = []

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 3

Instances

Project Name: snipsco/snips-nlu

Commit Name: c19ac6b5692e2e8b5dec2f60ff306ecc5976e112

Time: 2017-05-31

Author: clement.doumouro@snips.ai

File Name: snips_nlu/tokenization.py

Class Name:

Method Name: tokenize

Project Name: snipsco/snips-nlu

Commit Name: d264e82050700d9aaed31c11dbd65f9dbd03e4d9

Time: 2017-04-25

Author: adrien.ball@snips.net

File Name: snips_nlu/tokenization.py

Class Name:

Method Name: tokenize

Project Name: snipsco/snips-nlu

Commit Name: d3954738b162fb166ef6591edfd9bfb083544073

Time: 2017-07-20

Author: adrien.ball@snips.net

File Name: snips_nlu/tokenization.py

Class Name:

Method Name: tokenize

Project Name: allenai/allennlp

Commit Name: bb1b1c6e27b273a66135d02a04232835d5bfc3ca

Time: 2019-11-19

Author: max.del.edu@gmail.com

File Name: allennlp/data/tokenizers/pretrained_transformer_tokenizer.py

Class Name: PretrainedTransformerTokenizer

Method Name: tokenize