0cfdc391714cc2eea9502aa7e0019d24bae19192,algo/ppo.py,PPO,update,#PPO#Any#,34

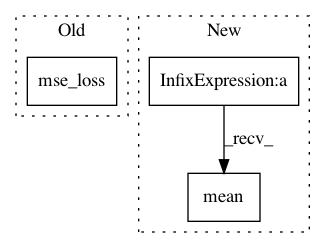

Before Change

value_losses_clipped = (value_pred_clipped - return_batch).pow(2)

value_loss = .5 * torch.max(value_losses, value_losses_clipped).mean()

else:

value_loss = 0.5 * F.mse_loss(return_batch, values)

self.optimizer.zero_grad()

(value_loss * self.value_loss_coef + action_loss -

dist_entropy * self.entropy_coef).backward()

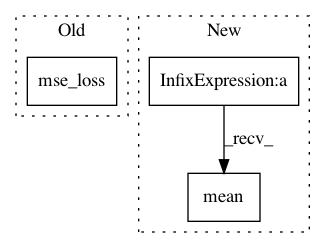

After Change

value_losses_clipped = (value_pred_clipped - return_batch).pow(2)

value_loss = 0.5 * torch.max(value_losses, value_losses_clipped).mean()

else:

value_loss = 0.5 * (return_batch - values).pow(2).mean()

self.optimizer.zero_grad()

(value_loss * self.value_loss_coef + action_loss -

dist_entropy * self.entropy_coef).backward()

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: ikostrikov/pytorch-a2c-ppo-acktr

Commit Name: 0cfdc391714cc2eea9502aa7e0019d24bae19192

Time: 2018-11-25

Author: ikostrikov@gmail.com

File Name: algo/ppo.py

Class Name: PPO

Method Name: update

Project Name: ikostrikov/pytorch-a2c-ppo-acktr

Commit Name: 79ec8f5009d71a891176f23af20fc077f058713a

Time: 2018-09-25

Author: ikostrikov@gmail.com

File Name: algo/ppo.py

Class Name: PPO

Method Name: update

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 8d52bd0b09152b02e0a5504d33593d0c290b88c7

Time: 2018-02-05

Author: max.lapan@gmail.com

File Name: ch14/06_train_d4pg.py

Class Name:

Method Name: