bed0a0ae26451c9897cf1ee0f7302e42eba9b42c,transformer/Models.py,,get_attn_subsequent_mask,#Any#,31

Before Change

assert seq.dim() == 2

attn_shape = (seq.size(0), seq.size(1), seq.size(1))

subsequent_mask = np.triu(np.ones(attn_shape), k=1).astype("uint8")

subsequent_mask = torch.from_numpy(subsequent_mask)

if seq.is_cuda:

subsequent_mask = subsequent_mask.cuda()

After Change

subsequent_mask = torch.ones((len_s, len_s), device=seq.device, dtype=torch.uint8)

subsequent_mask = torch.triu(subsequent_mask, diagonal=1)

subsequent_mask = subsequent_mask.unsqueeze(0).expand(sz_b, len_s, len_s)

return subsequent_mask

class Encoder(nn.Module):

""" A encoder model with self attention mechanism. """

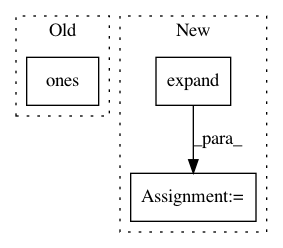

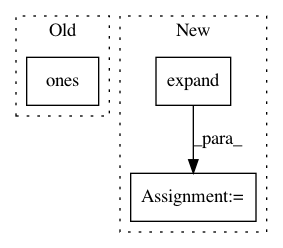

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: jadore801120/attention-is-all-you-need-pytorch

Commit Name: bed0a0ae26451c9897cf1ee0f7302e42eba9b42c

Time: 2018-08-23

Author: yhhuang@nlg.csie.ntu.edu.tw

File Name: transformer/Models.py

Class Name:

Method Name: get_attn_subsequent_mask

Project Name: facebookresearch/ParlAI

Commit Name: 1a6f95dc2d715783b358f4bca267d099efd3ac31

Time: 2020-06-09

Author: roller@fb.com

File Name: parlai/core/torch_generator_agent.py

Class Name: TorchGeneratorAgent

Method Name: _dummy_batch

Project Name: pytorch/pytorch

Commit Name: 093aca082e2878f3a28defe9075e7334dfceac70

Time: 2021-01-05

Author: fritz.obermeyer@gmail.com

File Name: test/distributions/test_distributions.py

Class Name: TestDistributionShapes

Method Name: test_one_hot_categorical_shape