16b7d06575fb72f3a0c9d09f38efc17066daf473,elephas/optimizers.py,Adadelta,get_updates,#Adadelta#Any#Any#Any#,154

Before Change

def get_updates(self, params, constraints, grads):

accumulators = [shared_zeros(p.get_value().shape) for p in params]

delta_accumulators = [shared_zeros(p.get_value().shape ) for p in params]

self.updates = []

for p, g, a, d_a, c in zip(params, grads, accumulators,After Change

// update delta_accumulator

new_d_a = self.rho * d_a + (1 - self.rho) * update ** 2

new_weights.append(new_p)

return new_weights

def get_config(self):

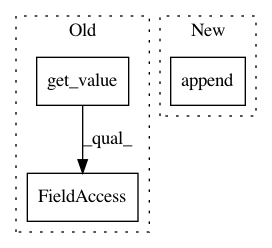

return {"name": self.__class__.__name__,In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: maxpumperla/elephas

Commit Name: 16b7d06575fb72f3a0c9d09f38efc17066daf473

Time: 2015-11-16

Author: max.pumperla@googlemail.com

File Name: elephas/optimizers.py

Class Name: Adadelta

Method Name: get_updates

Project Name: keras-team/keras

Commit Name: defa1283c44653c29b81403f9d9f19fb3df0a27d

Time: 2016-07-21

Author: francois.chollet@gmail.com

File Name: keras/optimizers.py

Class Name: Optimizer

Method Name: set_weights

Project Name: maxpumperla/elephas

Commit Name: 13e233ce7b50e463d557ec7964c11e4db5a5cb1f

Time: 2015-11-16

Author: max.pumperla@googlemail.com

File Name: elephas/optimizers.py

Class Name: Adagrad

Method Name: get_updates