16a31e2c9fedc654e9117b42b8144adf1d0e4900,examples/reinforcement_learning/tutorial_A3C.py,,,#,259

Before Change

import matplotlib.pyplot as plt

plt.plot(GLOBAL_RUNNING_R)

plt.xlabel("episode")

plt.ylabel("global running reward")

plt.savefig("a3c.png")

plt.show()

GLOBAL_AC.save_ckpt()

After Change

if args.test:

// ============================= EVALUATION =============================

GLOBAL_AC.load()

for episode in range(TEST_EPISODES):

s = env.reset()

episode_reward = 0

while True:

env.render()

s = s.astype("float32") // double to float

a = GLOBAL_AC.get_action(s, greedy=True)

s, r, d, _ = env.step(a)

episode_reward += r

if d:

break

print(

"Testing | Episode: {}/{} | Episode Reward: {:.4f} | Running Time: {:.4f}".format(

episode + 1, TEST_EPISODES, episode_reward,

time.time() - T0))

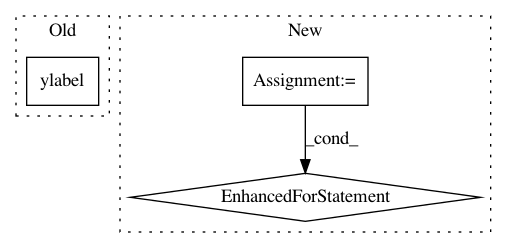

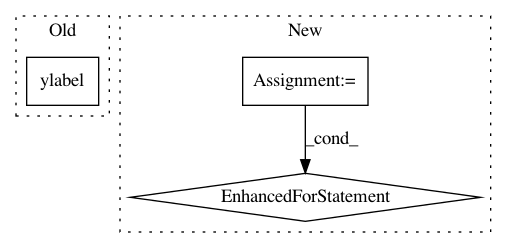

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: tensorlayer/tensorlayer

Commit Name: 16a31e2c9fedc654e9117b42b8144adf1d0e4900

Time: 2020-02-03

Author: 34995488+Tokarev-TT-33@users.noreply.github.com

File Name: examples/reinforcement_learning/tutorial_A3C.py

Class Name:

Method Name:

Project Name: ScottfreeLLC/AlphaPy

Commit Name: 7a7d0e7b35cbee6b3409ac30ff4bf4edfe525af2

Time: 2019-11-17

Author: Mark.R.Conway@gmail.com

File Name: alphapy/plots.py

Class Name:

Method Name: plot_confusion_matrix

Project Name: has2k1/plotnine

Commit Name: db31f8b4639b478cd77660d682bb0c27364aa3f1

Time: 2017-09-29

Author: has2k1@gmail.com

File Name: plotnine/qplot.py

Class Name:

Method Name: qplot