e91a9941d0ee6d1198e4b05bbd39ce16b5c0d569,ml/rl/training/discrete_action_trainer.py,DiscreteActionTrainer,get_max_q_values,#DiscreteActionTrainer#Any#Any#Any#,137

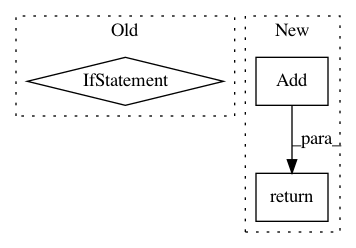

Before Change

next_states, use_target_network

)

if possible_next_actions is not None:

mask = np.multiply(

np.logical_not(possible_next_actions),

self.ACTION_NOT_POSSIBLE_VAL

)

q_values += mask

return np.max(q_values, axis=1, keepdims=True)

def get_q_values(

self, states: np.ndarray, actions: np.ndarray

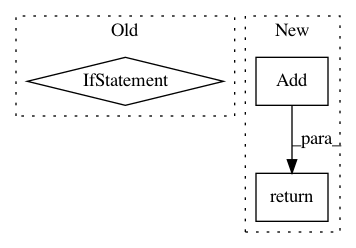

After Change

self.ACTION_NOT_POSSIBLE_VAL,

broadcast=1,

)

q_values = C2.Add(q_values, inverse_pna)

q_values_max = C2.ReduceBackMax(

q_values,

num_reduce_dims=1,

)

return C2.ExpandDims(q_values_max, dims=[1])

def get_q_values_all_actions(

self,

states: str,

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: facebookresearch/Horizon

Commit Name: e91a9941d0ee6d1198e4b05bbd39ce16b5c0d569

Time: 2018-02-27

Author: jjg@fb.com

File Name: ml/rl/training/discrete_action_trainer.py

Class Name: DiscreteActionTrainer

Method Name: get_max_q_values

Project Name: sympy/sympy

Commit Name: 55f138cf41ded9877f52d0d636a5e291a107c027

Time: 2020-07-13

Author: sachinagarwal0499@gmail.com

File Name: sympy/functions/elementary/trigonometric.py

Class Name:

Method Name: _peeloff_pi

Project Name: SheffieldML/GPy

Commit Name: 6637eb7ac808851f9902db6ad817e56ca44d0690

Time: 2014-03-18

Author: ibinbei@gmail.com

File Name: GPy/kern/_src/kern.py

Class Name: Kern

Method Name: add