447885e15243dd18d906e2e35ac34ec6dcf9a600,Reinforcement_learning_TUT/7_Policy_gradient/RL_brain.py,PolicyGradient,_build_net,#PolicyGradient#,129

Before Change

with tf.variable_scope("eval_net"):

self.q_eval = self._build_layers(self.s, self.n_actions, trainable=True)

with tf.name_scope("loss"):

self.loss = tf.reduce_sum(tf.square(self.q_target - self.q_eval))

with tf.name_scope("train"):

self._train_op = tf.train.RMSPropOptimizer(self.lr).minimize(self.loss)

After Change

l1 = self._add_layer("hidden0", self.x_inputs, self.n_features, 10, tf.nn.relu) // hidden layer 1

self.prediction = self._add_layer("output", l1, 10, 1, tf.nn.sigmoid) // predicting for action 0

with tf.name_scope("loss"):

loglik = self.fake_targets*tf.log(self.prediction) + (1 - self.fake_targets)*tf.log(1-self.prediction) //

self.loss = -tf.reduce_mean(loglik * self.advantages)

with tf.name_scope("train"):

self._train_op = tf.train.RMSPropOptimizer(self.lr).minimize(self.loss)

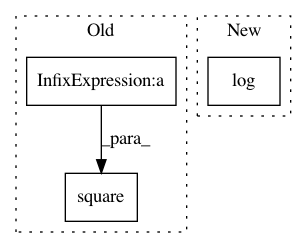

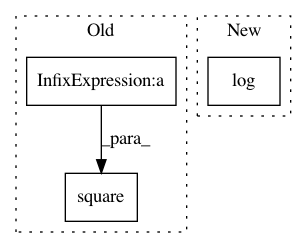

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: MorvanZhou/tutorials

Commit Name: 447885e15243dd18d906e2e35ac34ec6dcf9a600

Time: 2016-12-30

Author: morvanzhou@hotmail.com

File Name: Reinforcement_learning_TUT/7_Policy_gradient/RL_brain.py

Class Name: PolicyGradient

Method Name: _build_net

Project Name: reinforceio/tensorforce

Commit Name: 208e5d91d7f88cc1ab10ab1a4bfdd856b2691671

Time: 2017-05-22

Author: aok25@cl.cam.ac.uk

File Name: tensorforce/core/distributions/gaussian.py

Class Name: Gaussian

Method Name: log_probability

Project Name: jostmey/rwa

Commit Name: 6c2c4e18d28032ac547aa27806d581bc5691b090

Time: 2017-03-18

Author: hima.p.mehta@gmail.com

File Name: adding_problem_100/rwa_model/train.py

Class Name:

Method Name: