ca907342507c1139696f542de0a3351d7a382eee,reinforcement_learning/actor_critic.py,,finish_episode,#,65

Before Change

rewards = (rewards - rewards.mean()) / (rewards.std() + np.finfo(np.float32).eps)

for (log_prob, value), r in zip(saved_actions, rewards):

reward = r - value.data[0, 0]

policy_loss -= (log_prob * reward).sum()

value_loss += F.smooth_l1_loss(value, Variable(torch.Tensor([r])))

optimizer.zero_grad()

(policy_loss + value_loss).backward()

optimizer.step()

del model.rewards[:]

del model.saved_actions[:]

After Change

rewards = (rewards - rewards.mean()) / (rewards.std() + np.finfo(np.float32).eps)

for (log_prob, value), r in zip(saved_actions, rewards):

reward = r - value.data[0, 0]

policy_losses.append(-log_prob * reward)

value_losses.append(F.smooth_l1_loss(value, Variable(torch.Tensor([r]))))

optimizer.zero_grad()

loss = torch.cat(policy_losses).sum() + torch.cat(value_losses).sum()

loss.backward()

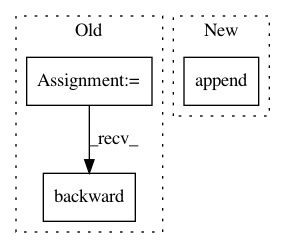

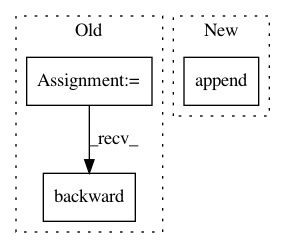

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: pytorch/examples

Commit Name: ca907342507c1139696f542de0a3351d7a382eee

Time: 2017-12-04

Author: sgross@fb.com

File Name: reinforcement_learning/actor_critic.py

Class Name:

Method Name: finish_episode

Project Name: facebookresearch/Horizon

Commit Name: 69061e67d62a067c2a8a5c6a440f7b9605c111d6

Time: 2020-08-28

Author: badri@fb.com

File Name: reagent/training/reinforce.py

Class Name: Reinforce

Method Name: train

Project Name: adobe/NLP-Cube

Commit Name: be3b9d766660704a3008ba76b991c797425f1188

Time: 2018-03-30

Author: tiberiu44@gmail.com

File Name: cube/generic_networks/taggers.py

Class Name: BDRNNTagger

Method Name: learn