e3fcbb639e115e8afe9600bd06aee81acfda6704,reagent/training/world_model/seq2reward_trainer.py,Seq2RewardTrainer,train,#Seq2RewardTrainer#Any#,38

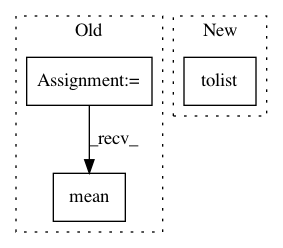

Before Change

self.optimizer.step()

detached_loss = loss.cpu().detach().item()

q_values = (

self.get_Q(

training_batch,

training_batch.batch_size(),

self.params.multi_steps,

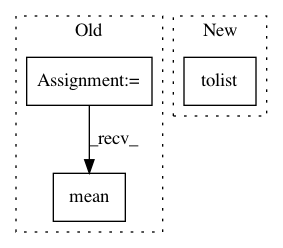

After Change

detached_loss = loss.cpu().detach().item()

if self.view_q_value:

q_values = (

get_Q(self.seq2reward_network, training_batch, self.all_permut)

.cpu()

.mean(0)

.tolist()

)

else:

q_values = [0] * len(self.params.action_names)

logger.info(f"Seq2Reward trainer output: {(detached_loss, q_values)}")

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: facebookresearch/Horizon

Commit Name: e3fcbb639e115e8afe9600bd06aee81acfda6704

Time: 2020-10-13

Author: czxttkl@fb.com

File Name: reagent/training/world_model/seq2reward_trainer.py

Class Name: Seq2RewardTrainer

Method Name: train

Project Name: masa-su/pixyz

Commit Name: 5273b035b2305458813de1932aecd43f0c3cf8f0

Time: 2018-12-11

Author: masa@weblab.t.u-tokyo.ac.jp

File Name: pixyz/models/ml.py

Class Name: ML

Method Name: __init__

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 155e770cb912f0ac89f862d29ae14b720ceef589

Time: 2018-02-28

Author: max.lapan@gmail.com

File Name: ch17/02_imag.py

Class Name:

Method Name: