67d13b4ba91b23ad29f660aae68a01ddbd809530,chainerrl/agents/ppo.py,PPO,act_and_train,#PPO#Any#Any#,407

Before Change

self._update(dataset_iter, target_model, mean_advs, std_advs)

def act_and_train(self, obs, reward):

if hasattr(self.model, "obs_filter"):

xp = self.xp

b_state = self.batch_states([obs], xp, self.phi)

self.model.obs_filter.experience(b_state)

action, v = self._act(obs)

// Update stats

self.average_v += (After Change

b_state = self.obs_normalizer(b_state, update=False)

// action_distrib will be recomputed when computing gradients

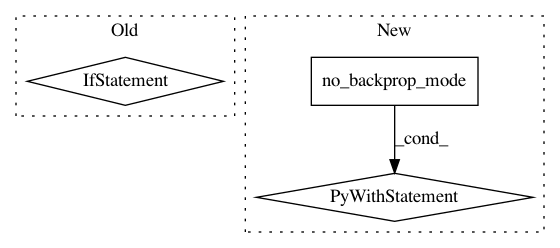

with chainer.using_config("train", False), chainer.no_backprop_mode() :

action_distrib, value = self.model(b_state)

action = chainer.cuda.to_cpu(action_distrib.sample().data)[0]

self.entropy_record.append(float(action_distrib.entropy.data))In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: chainer/chainerrl

Commit Name: 67d13b4ba91b23ad29f660aae68a01ddbd809530

Time: 2018-10-16

Author: muupan@gmail.com

File Name: chainerrl/agents/ppo.py

Class Name: PPO

Method Name: act_and_train

Project Name: chainer/chainerrl

Commit Name: 4e008e79147043207a5d0032aa771d7f811af0e1

Time: 2017-01-05

Author: muupan@gmail.com

File Name: chainerrl/agents/dqn.py

Class Name: DQN

Method Name: _compute_y_and_t

Project Name: chainer/chainerrl

Commit Name: 7cebf32b75ef71ea8e367579c71fea84deaac91b

Time: 2017-01-15

Author: muupan@gmail.com

File Name: chainerrl/agents/dpp.py

Class Name: AbstractDPP

Method Name: _compute_y_and_t